NVMe Namespace

Terms related to simplyblock

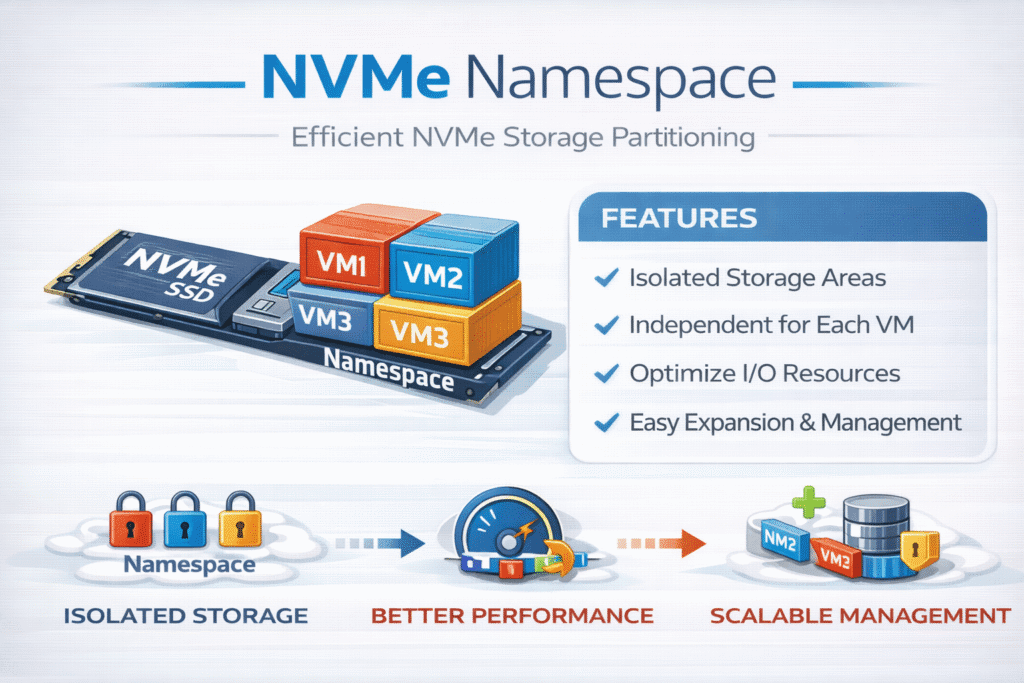

An NVMe namespace is a logical range of block addresses that an NVMe controller presents to a host as a usable block device. In Linux, each namespace typically shows up as a separate device (for example, a namespace can appear as a block device that you can format, mount, or hand to LVM).

Executives usually care about namespaces for one reason: they let teams carve up high-performance flash into clear units with clear owners, which supports predictable SLAs for Kubernetes Storage and Software-defined Block Storage.

NVMe Namespace Basics for Storage Architects

A namespace gives you isolation at the logical block level, not “physical partition walls.” The controller uses a namespace identifier (NSID) to map host I/O to that logical space. Many vendors treat a namespace like an NVMe-era LUN, especially in shared storage environments.

Namespaces also create a clean boundary for multi-tenancy and policy. You can align one namespace to one application tier, one cluster, one database, or one tenant. That setup makes it easier to manage performance, capacity, and change control than a single device that mixes workloads.

🚀 Use NVMe Namespaces for Predictable NVMe/TCP Storage in Kubernetes

Use simplyblock to simplify Kubernetes Storage and keep Software-defined Block Storage latency steady.

👉 Use simplyblock for NVMe/TCP Kubernetes Storage →

How Namespaces Show Up in Kubernetes Storage

Kubernetes Storage uses abstractions such as PersistentVolumes and StorageClasses. When your backend exposes block devices as namespaces, your CSI driver can map each claim to a volume that targets a namespace boundary, depending on the driver and platform design.

That mapping matters for Software-defined Block Storage because the namespace becomes the unit you can size, move, snapshot, throttle, or isolate, while Kubernetes keeps the application interface consistent.

NVMe/TCP and Namespace Access Across the Network

NVMe/TCP extends NVMe-oF over standard TCP/IP networks, so you can export namespaces beyond a single PCIe host while keeping routable Ethernet and familiar tools. This transport choice often fits disaggregated designs where compute and storage scale independently, which many platform teams prefer for large Kubernetes Storage estates.

From a control standpoint, NVMe/TCP makes namespace boundaries more useful. You can assign namespaces per tenant, then apply QoS and limits at the namespace level instead of fighting noisy neighbors inside one shared device.

Benchmarking Namespace-Level Performance Without Guesswork

Namespace benchmarking works best when you test the same namespace under both steady-state load and “busy platform” conditions. Kubernetes documentation notes that storage performance and behavior depend on the class of storage and how you provision it.

To keep tests repeatable, many teams use standard Linux NVMe tools and fio-style patterns. If you create or change namespaces during evaluation, nvme-cli provides management commands that return the assigned namespace identifier after a successful create operation.

When you report results to leadership, highlight p95 and p99 latency, plus CPU cost per I/O. Tail latency is what breaks database SLOs, and CPU waste is what drives up cluster cost.

Practical Ways to Tune Namespaces for Predictable Latency

Namespace design affects performance most when teams pair it with clear workload placement and clean resource boundaries. In mixed clusters, a namespace-per-workload pattern often limits the blast radius during spikes.

- Size namespaces to match growth and avoid frequent resize churn during peak business hours.

- Align one namespace to one performance class, then map that class to a dedicated StorageClass in Kubernetes.

- Separate noisy batch I/O from latency-sensitive databases by using different namespaces, not just different PVC names.

- Track rebuild and maintenance windows, and rerun latency tests during those periods, not only during quiet hours.

- Use an efficient data path where possible; user-space, zero-copy techniques reduce overhead in high IOPS environments.

Decision Matrix – NVMe Namespace vs Partition vs LUN

A quick comparison helps teams pick the right abstraction for Software-defined Block Storage and Kubernetes Storage designs.

| Item | NVMe Namespace | Disk Partition | LUN |

|---|---|---|---|

| Where it lives | NVMe controller concept | OS-level layout | Storage array concept |

| Typical purpose | Logical NVMe block device boundary | Split one device locally | Present storage to hosts |

| Operational fit | Strong for multi-tenant NVMe and NVMe/TCP | Good for single-host cases | Common in SAN workflows |

| “Unit of control” | NSID and namespace policies | Partition table | Array volume policies |

Predictable Namespace-Based NVMe/TCP with Simplyblock™

Simplyblock™ focuses on predictable Software-defined Block Storage for Kubernetes Storage, including NVMe-centric designs and NVMe/TCP topologies. Use NVMe TCP Kubernetes storage to align namespace boundaries with tenant boundaries, then apply QoS so one workload cannot dominate shared infrastructure.

For platform teams that run baremetal clusters, a namespace-first model pairs well with disaggregated storage because it keeps the control plane clean: Kubernetes asks for volumes, and the storage layer maps policy and isolation to namespace-level constructs.

Where NVMe Namespaces Are Going Next

The NVMe roadmap continues to expand namespace-related capabilities, including Zoned Namespaces (ZNS) and other feature work called out in newer NVMe base specification releases.

For executives, the key takeaway is simple: as devices add more per-namespace controls, teams can tighten governance and reduce risk without adding extra storage silos.

Related Terms

Teams often review these glossary pages alongside NVMe Namespace when they standardize Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

NVMe namespaces allow multiple isolated storage volumes to exist on the same physical NVMe device, making them ideal for multi-tenant Kubernetes workloads. They help ensure predictable performance and better I/O separation across tenants.

Yes, when paired with NVMe over TCP, namespaces enable parallel data access and reduce contention. This leads to higher throughput and lower latency for workloads like databases and analytics engines.

While logical volumes are managed by software like LVM, NVMe namespaces are natively supported by the SSD hardware and NVMe protocol. In software-defined storage, this leads to reduced overhead and faster provisioning.

Yes, modern CSI drivers can leverage NVMe namespaces for dynamic volume provisioning. This enables storage backends to deliver high-performance persistent volumes for stateful containerized applications.

In shared infrastructure, NVMe namespaces can be combined with encryption at rest and access control to ensure secure, isolated storage per tenant or workload. This is critical for regulatory compliance and performance isolation.