NVMe-oF target on DPU

Terms related to simplyblock

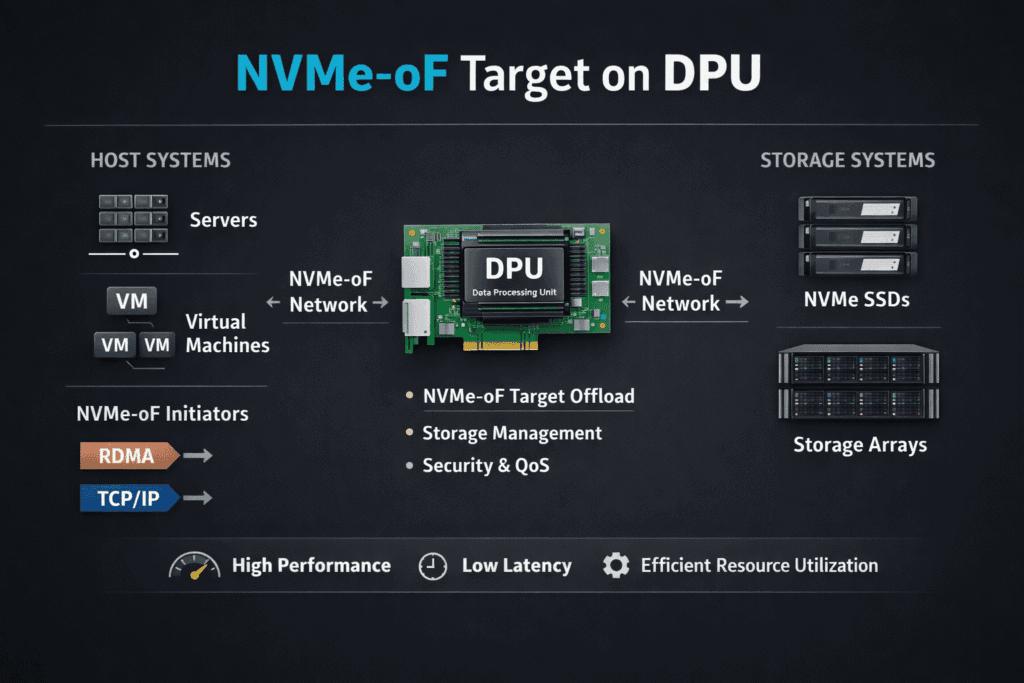

An NVMe-oF target on DPU is a storage target endpoint that runs on a data processing unit and exposes NVMe namespaces to hosts over a fabric transport. Instead of terminating NVMe-oF on the host CPU (or on a general-purpose storage server), the DPU terminates the storage protocol, handles data movement, and can enforce policies closer to the network edge.

For environments standardizing on NVMe/TCP and Kubernetes Storage, this pattern is usually about CPU efficiency, tighter isolation, and predictable tail latency for multi-tenant workloads.

What is the “target” in NVMe-oF, and why place it on a DPU?

In NVMe-oF, the “target” is the side that exports storage (namespaces) to clients (initiators/hosts). Placing the target on a DPU shifts protocol processing and I/O path work away from the host’s general-purpose cores. In practice, that can reduce context switching, lower CPU overhead, and make it easier to keep data-plane work isolated from application noise, which matters when the same cluster runs latency-sensitive databases next to background jobs.

This is also a clean architectural fit for disaggregated storage designs, where compute and storage scale independently, and where NVMe/TCP is used to carry NVMe semantics over standard Ethernet.

🚀 Run NVMe-oF Targets on DPUs with NVMe/TCP

Use Simplyblock to offload the storage data path and keep latency predictable in Kubernetes Storage.

👉 Use Simplyblock for NVMe-oF and SPDK →

How a DPU-based target fits into Kubernetes Storage

Kubernetes Storage adds two pressures that DPUs help with: noisy-neighbor I/O and operational sprawl. A DPU-based target can provide a more deterministic data path, while Kubernetes consumes storage through CSI, StorageClasses, and PersistentVolumeClaims. The Kubernetes control plane stays in charge of lifecycle and placement, while the DPU handles the fast path.

With simplyblock, the intent is to keep this consumption model consistent, whether you deploy hyper-converged, disaggregated, or mixed clusters. That means you can standardize on Software-defined Block Storage semantics and use the right fabric transport (NVMe/TCP broadly, and RDMA where justified) without changing the way applications request volumes.

Why SPDK matters for NVMe-oF targets on DPUs

DPUs are most valuable when the storage stack is efficient enough to justify offload. SPDK’s user-space model is designed to reduce kernel overhead and deliver microsecond-level behavior in the right conditions, which aligns with a DPU’s role as a data-plane processor. In operational terms, the goal is fewer CPU cycles per I/O, better tail latency behavior under load, and more headroom for application compute on the host.

Simplyblock leans on SPDK fundamentals as part of its performance approach, so teams can get SPDK-class characteristics with platform-level lifecycle, observability, and policy controls rather than hand-assembling an SPDK environment.

NVMe/TCP vs RDMA when the target is offloaded

A DPU does not automatically imply RDMA, and many enterprises standardize first on NVMe/TCP because it works with existing Ethernet networks and avoids the operational constraints of lossless fabrics.

When workloads demand tighter p99 latency, RDMA (for example, RoCE) can be introduced selectively, including within the same Kubernetes cluster. The important architectural point is that the platform should not force a forklift redesign just to adopt RDMA where it makes sense.

NVMe-oF Target Placement Comparison

The choice is rarely “DPU or not,” it is about where you terminate the storage protocol, where you enforce QoS, and where you spend CPU cycles. This table summarizes common trade-offs.

| Approach | Where the NVMe-oF target runs | Strengths | Typical trade-offs | Best fit |

| Host-based target | Application/compute host CPU | Lowest hardware complexity, fast iteration | CPU overhead on hosts, weaker isolation under contention | Small clusters, early proofs of concept |

| DPU-based target | DPU on the data path | Strong isolation, improved CPU efficiency, policy closer to network | Requires DPU operational model, hardware qualification | Multi-tenant platforms, strict SLOs, dense clusters |

| Storage-server target | Dedicated storage nodes (x86/ARM) | Operational familiarity, easy capacity scaling | More host CPU use than DPU offload, added hop in some topologies | General enterprise SAN alternative at scale |

Simplyblock™ for NVMe-oF Targets on DPUs

Simplyblock uses an SPDK-based, user-space, zero-copy data path that fits DPU-centric NVMe-oF target designs where CPU efficiency and consistent latency matter. It helps keep storage protocol work off host cores, which is useful when dense clusters run mixed workloads. The result is a cleaner separation between application compute and the storage fast path.

It supports NVMe/TCP for standard Ethernet environments and NVMe/RoCEv2 when RDMA is justified. In Kubernetes Storage, simplyblock keeps operations consistent across hyper-converged and disaggregated deployments with multi-tenancy and QoS.

Common deployment patterns

The following are typical ways a DPU-based target shows up in production designs for Software-defined Block Storage and Kubernetes Storage, with NVMe/TCP as the baseline transport.

- In a disaggregated design, storage nodes export volumes over NVMe/TCP, and DPUs handle target termination and policy enforcement at the edge of the storage network, improving isolation and CPU efficiency on compute nodes.

- In a hyper-converged design, DPUs can be used on storage-capable worker nodes to keep the data plane stable even when pods churn, and to reduce I/O interference with application scheduling.

- In a mixed design, general workloads use NVMe/TCP, while a subset of nodes (often GPU or low-latency pools) uses RDMA for stricter tail latency targets, still managed under one storage control plane.

Related Terms

These glossary terms are commonly reviewed alongside NVMe-oF target on DPU designs in NVMe/TCP and Kubernetes Storage environments.

Data Processing Unit (DPU)

Zero-copy I/O

Storage Virtualization

What Is a Storage Controller?

Questions and Answers

Running an NVMe-oF target on a DPU offloads the full data path from the CPU, minimizing software stack overhead. This reduces round-trip latency and enables direct, high-speed access to storage—especially valuable in low-latency Kubernetes environments.

Using a DPU reduces latency, increases IOPS, and offloads protocol handling from the CPU. It enables better scalability and performance in software-defined storage environments without requiring custom hardware or proprietary appliances.

NVMe-oF benefits from DPU acceleration by achieving near-local NVMe performance over standard Ethernet. The DPU handles protocol translation, I/O processing, and security, making it ideal for distributed systems needing efficient remote storage access.

Yes, NVMe-oF over TCP on a DPU avoids the complexity and cost of RDMA or Fibre Channel setups. It runs on standard Ethernet and delivers high performance without special NICs—making it ideal for scalable deployments, especially when paired with NVMe over TCP.

Absolutely. A DPU-based NVMe-oF target minimizes storage latency and protocol overhead, leading to faster persistent volumes in Kubernetes. It’s especially powerful when integrated with a CSI-compliant storage layer like Simplyblock’s Kubernetes-native platform.