NVMe over TCP SAN Alternative

Terms related to simplyblock

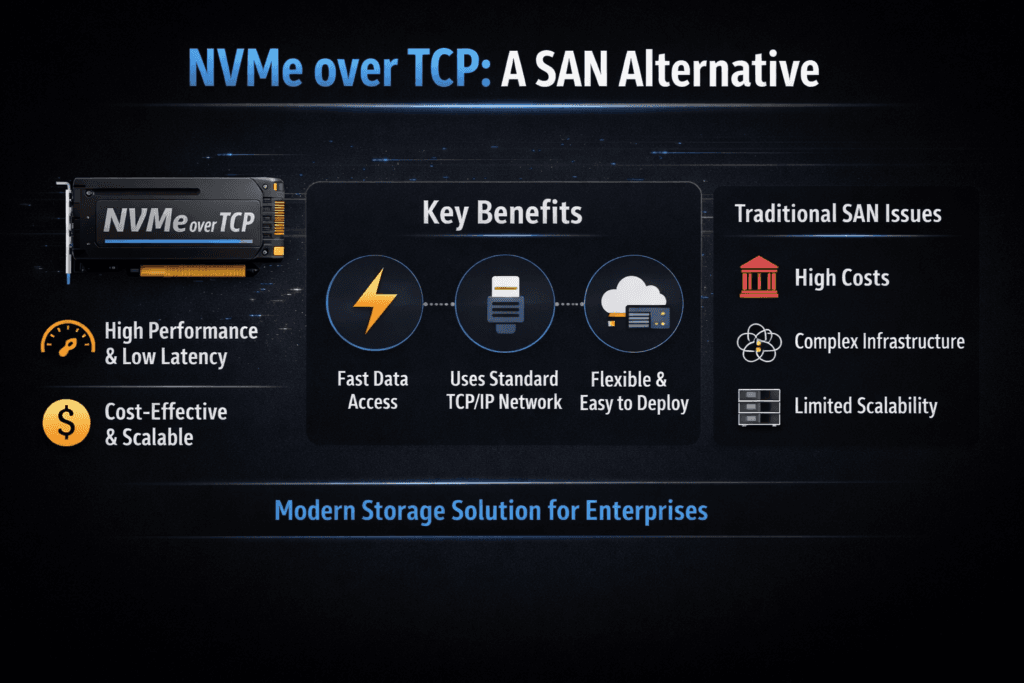

An NVMe over TCP SAN Alternative replaces traditional shared block storage built on Fibre Channel or legacy iSCSI arrays with NVMe-oF over standard Ethernet. You still deliver centralized block volumes to many hosts, but you do it with NVMe/TCP on the same IP network your teams already operate.

This model matters because many SAN estates carry high costs, long change cycles, and tight vendor lock-in. Teams also struggle to line up SAN workflows with cloud-native automation. A SAN alternative built on Software-defined Block Storage can scale out on commodity servers, keep upgrades predictable, and fit better with platform engineering goals.

What “SAN alternative” should deliver in enterprise terms

A real SAN alternative must meet the same business needs as a SAN. It must provide shared block access, fast recovery from node loss, and stable latency under load. It must also support growth without forcing a forklift refresh.

Most buyers evaluate these areas first: the performance envelope (including tail latency), the blast radius of failures, the day-2 burden, and how well the platform supports audit, change control, and tenant isolation. If the system cannot hold steady p99 latency while multiple teams run jobs at once, it will create hidden costs even if peak IOPS looks strong.

🚀 Validate Your SAN Alternative with a Production-like Deployment

Deploy Simplyblock fast, then benchmark NVMe/TCP performance under real concurrency.

👉 Deploy Simplyblock Now →

Where the NVMe over TCP SAN Alternative fits best

An NVMe over TCP SAN Alternative works best when you want SAN-like shared storage on Ethernet, and you want to avoid specialized fabrics as the default. It also fits when you plan to run both baremetal and virtualized workloads, and you want one block layer across them.

Common fit signals include scale-out app fleets, database clusters, AI feature stores, and log pipelines that need consistent block performance across many nodes. It also fits when teams need a SAN alternative that aligns with GitOps and automated rollouts, instead of manual zoning and per-host mapping steps.

Kubernetes Storage patterns that influence SAN replacement design

Kubernetes changes how teams consume storage. Apps ask for a volume through a claim, and the platform binds it through CSI and policy. That shift favors systems that can provision fast, support topology rules, and keep per-tenant behavior stable.

Two patterns show up often. Some teams choose a hyper-converged layout to keep traffic local and simplify wiring. Others choose a disaggregated layout to scale compute and storage on their own. Many environments land in a mixed model that pins high-IO tiers close to apps while placing bulk tiers in storage-heavy nodes. A SAN alternative should support these patterns without forcing separate stacks.

NVMe over TCP SAN Alternative and NVMe/TCP performance basics

NVMe/TCP runs NVMe-oF over TCP/IP, which makes deployment simpler than RDMA-first designs. Standard Ethernet also makes operations easier, but it raises the importance of CPU efficiency and clean networking. That is where user-space, zero-copy I/O paths can matter, especially when load climbs and queue depth grows.

When you compare options, focus on more than peak speed. Look at tail latency, cross-node fairness, and how the system behaves during rebuilds. Those factors decide whether the platform feels “fast” in real life.

Benchmarking and proof points that matter for SAN alternatives

Treat testing like a capacity and risk exercise, not a lab contest. Start with a clean baseline, then add concurrency until you hit your p99 target. Run the same profile across nodes, and record variance between runs.

Use one stable set of measures:

- IOPS, throughput, and average latency

- p95 and p99 latency

- CPU use per GB/s

- Rebuild impact while serving I/O

In Kubernetes, run tests through real PVCs so the data path matches production. For baremetal, run the same profiles with the same host pinning approach. Keep job files under version control and rerun them after every major change.

Practical steps to improve results without hiding real problems

Start by keeping storage traffic predictable on the network. Avoid oversubscription where possible, and separate noisy east-west app flows from storage flows when you can. Next, align compute and NIC placement. NUMA mismatch can hurt latency even on great hardware.

Then tune concurrency with intent. Low queue depth underuses the fabric. Very high queue depth can inflate tail latency and hide app-like behavior. Match your settings to the workload class you plan to run.

Finally, address multi-tenant pressure. QoS and isolation stop one team’s batch job from causing another team’s database to miss latency targets. That single feature can change how confident you feel about consolidation.

Side-by-side comparison for SAN replacement choices

The table below summarizes common trade-offs when teams compare SAN paths and Ethernet-based NVMe-oF options.

| Option | Network Needs | Ops Model | Typical Strength | Typical Risk |

|---|---|---|---|---|

| Fibre Channel SAN | Dedicated FC fabric | Zoning, LUN workflows | Predictable legacy ops | High cost, slow change |

| iSCSI SAN | Standard Ethernet | Familiar IP storage | Broad support | Higher overhead, weaker tails |

| NVMe/TCP with Software-defined Block Storage | Standard Ethernet | Automation-friendly | Strong scale-out fit | Needs CPU-aware tuning |

| NVMe/RDMA NVMe-oF | RDMA-ready fabric | More fabric rules | Lowest latency ceiling | More moving parts |

How simplyblock™ stabilizes latency at scale

Simplyblock™ targets SAN alternative goals with Software-defined Block Storage designed for Kubernetes Storage and baremetal. It supports NVMe/TCP, and it uses an SPDK-based, user-space design to improve CPU efficiency and reduce data path overhead.

In practice, teams use simplyblock to standardize shared block delivery across clusters, apply multi-tenant controls, and keep performance consistent as they add nodes. That combination supports growth without forcing the SAN-style operational load that slows platform teams down.

NVMe over TCP SAN Alternative roadmap trends

An NVMe over TCP SAN Alternative continues to move toward policy-driven control. Buyers want per-volume QoS, clean observability, and safe automation for upgrades. Offload tech like DPUs and IPUs can also shift protocol work away from host CPUs, which can reduce jitter at scale.

At the same time, more teams benchmark with p99-first targets and multi-node runs that reflect real app fan-out. This trend pushes vendors to prove not only peak results, but also stable behavior during failure, rebuild, and noisy-neighbor events.

Related Terms

These glossary terms support SAN replacement planning with NVMe/TCP.

Questions and Answers

NVMe over TCP offers SAN-like performance over standard Ethernet, eliminating the need for proprietary Fibre Channel networks. It enables low-latency, high-throughput access to storage, making it an ideal replacement for traditional SAN systems.

NVMe over TCP provides similar or better performance at a fraction of the cost by running over IP networks. It supports scalable, distributed architectures, unlike Fibre Channel SANs that require dedicated hardware and are less flexible in cloud-native environments.

Features like multi-queue support, microsecond latency, and compatibility with CSI make NVMe over TCP ideal for modern workloads. Simplyblock enhances this by providing enterprise-grade capabilities such as encryption, snapshots, and replication.

Yes. With support for block-based protocols, high availability, and advanced data services, NVMe/TCP platforms like Simplyblock can replace traditional SANs without compromising on performance or durability, especially for stateful applications.

Simplyblock offers software-defined, scale-out block storage using NVMe over TCP. It integrates with Kubernetes via CSI and supports features like multi-tenant isolation, volume encryption, and high performance—positioning it as a drop-in SAN alternative for modern infrastructure.