NVMe SSD Endurance

Terms related to simplyblock

NVMe SSD endurance tells you how much data a drive can write before NAND wear raises the risk of errors and early failure. Write-heavy systems like databases, logs, CI pipelines, and cache rebuilds consume endurance fast. Many teams size by capacity first, then get surprised when a small set of volumes burns through the write budget.

Endurance planning works best when you treat it like an SLO input. Your app writes “logical” data, while the SSD often writes more “physical” data during garbage collection and wear leveling. That extra work increases wear and can shorten service life if you ignore it.

In shared platforms, endurance also becomes a fairness problem. One tenant can drive most of the write load, and everyone shares the operational risk unless you add controls.

NVMe SSD Endurance Metrics – TBW, DWPD, and P/E Cycles

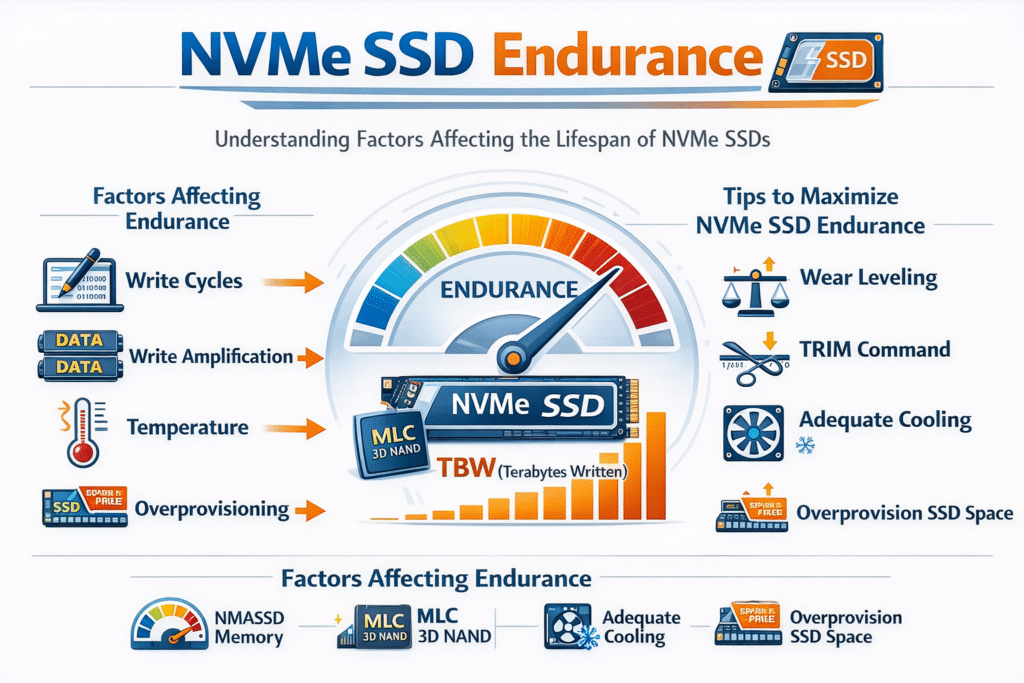

Vendors describe endurance with a few core specs. TBW (terabytes written) states the total data you can write over the warranty period. DWPD (drive writes per day) describes how many full-drive writes you can do per day across the warranty window. P/E cycles (program/erase cycles) point to how many rewrite cycles NAND cells can handle, which depends heavily on the NAND type.

Use TBW and DWPD to compare drives, but validate them against your real write pattern. Small random writes, frequent snapshots, and high metadata churn can wear NAND faster than large sequential writes. Workload behavior matters more than any single spec.

For Software-defined Block Storage, add a second lens: system write amplification. Rebuilds, resync, and background tasks can add write load even when the application stays quiet.

🚀 Extend NVMe SSD Endurance for Write-Heavy Kubernetes Storage on NVMe/TCP

Use Simplyblock to smooth write bursts, reduce write amplification, and protect SSD lifetime at scale.

👉 Use Simplyblock for Databases on Kubernetes →

NVMe SSD Endurance in Kubernetes Storage Planning

Kubernetes Storage changes endurance math because it adds automation and churn. Dynamic provisioning creates and deletes volumes often. StatefulSets drive steady writes. Cluster events like rollouts, node drains, and rescheduling can stack write bursts on the same media.

Good planning starts with hotspot mapping. Focus on write-ahead logs, compaction paths, index updates, and snapshot delta activity. Those paths drive wear, even if cluster-wide write volume looks normal.

Storage policies help when you align them with write intensity. Teams often split storage classes by workload type, isolate heavy writers, and reserve faster media for hot write paths. That approach supports SAN alternative designs where you scale compute and storage without tying endurance to a single node.

NVMe SSD Endurance and NVMe/TCP Data Paths

NVMe/TCP carries NVMe commands over standard Ethernet, so teams can share NVMe performance across a fleet without specialized fabrics. Endurance still depends on writes, but the data path influences how smoothly the platform handles load.

A copy-heavy or interrupt-heavy stack can create backpressure during spikes. Backpressure can trigger retries, flush storms, and bursty write patterns. Those patterns can raise write amplification at the SSD layer and make wear harder to predict.

SPDK-style user-space processing reduces overhead and keeps I/O flow steady at higher concurrency. A steadier flow helps you control tail latency, and it often keeps write behavior closer to what the app intends.

NVMe/TCP also fits multiple deployment models. You can run hyper-converged, disaggregated, or hybrid layouts for baremetal clusters, depending on where you want to place hot writers and how you want to scale.

Measuring and Benchmarking Drive Wear in Production

Treat endurance measurement as a routine, not a one-time sizing task. Track three layers: application writes, storage-layer writes, and device wear signals.

At the drive layer, use SMART wear indicators and total bytes written. At the platform layer, watch per-volume write IOPS, bandwidth, queue depth, and tail latency. Add workload context such as compaction windows, checkpoint cadence, and snapshot frequency, so you can explain the spikes.

Benchmarking works when it mirrors production. Use fio profiles that match your block size, read/write mix, and concurrency. Include “event” tests too, such as rolling updates, rebalancing, rebuilds, and backup windows, because those moments often decide real wear outcomes.

Approaches That Extend Drive Life Without Slowing Apps

Most endurance problems come from avoidable write amplification and uncontrolled bursts. Start with a small set of changes, measure impact, and keep what helps.

- Reduce small random writes by tuning flush and compaction settings, where the app allows it.

- Limit noisy neighbors with per-volume or per-tenant IOPS and bandwidth caps.

- Use snapshot and clone methods that avoid full-copy rewrites, especially in CI and test flows.

- Tier cold blocks off NVMe, so you save write budget for hot data paths.

Choosing the Right NVMe SSD Endurance Strategy

The table below compares several common approaches by endurance impact, predictability under load, and operational effort. Use it to pick a fit for shared platforms, regulated environments, and fast-moving Kubernetes teams.

| Option | Endurance Impact | Predictability Under Load | Operational Effort | Notes |

|---|---|---|---|---|

| Buy higher-endurance SSDs (higher DWPD) | High | High | Low | Costs more, simplifies ops |

| Add overprovisioning and keep free space | Medium | Medium | Medium | Helps garbage collection, needs discipline |

| Enforce QoS and per-tenant limits | Medium | High | Medium | Prevents bursts and protects neighbors |

| Tier cold data off NVMe | Medium | Medium | Medium | Cuts wasted writes on premium media |

| Use heavy protection everywhere | Low to Medium | Medium | High | Adds write overhead, improves durability |

Keeping Endurance Predictable with Simplyblock™

Simplyblock™ provides Software-defined Block Storage for Kubernetes Storage where teams need steady latency and predictable performance. The platform supports NVMe/TCP and NVMe/RoCEv2, so teams can start on Ethernet and add RDMA where it fits.

Simplyblock uses an SPDK-based, user-space design to reduce CPU overhead in the I/O path. Lower overhead helps the system keep a smoother write rate under concurrency, which supports both performance stability and endurance planning.

Multi-tenancy and QoS give teams enforceable limits. Those limits reduce noisy-neighbor write bursts and keep critical stateful services from absorbing someone else’s write pressure.

What Changes Next – NAND, Telemetry, and Offload

Higher-density NAND, including QLC, pushes teams to adopt tighter write-aware policies and better placement. Richer telemetry will also improve forecasting, which helps ops teams schedule replacements before risk rises. DPUs and IPUs will take on more I/O work over time, which can reduce host CPU variance and smooth NVMe/TCP pipelines.

As disaggregated designs grow, endurance management will shift toward policy and automation. That shift helps teams hold steady results across large fleets instead of tuning drive-by-drive.

Related Terms

Teams often review these glossary pages alongside NVMe SSD Endurance when they set write-aware policies for Kubernetes Storage and Software-defined Block Storage.

Volume Snapshotting in Kubernetes

Snapshot vs Clone in Storage

Storage Tiering

Thin Provisioning

Questions and Answers

Endurance defines how much data an SSD can write over its lifetime. In enterprise environments like Database-as-a-Service, poor endurance can lead to premature device failure, performance drops, or increased replacement costs—especially under write-heavy workloads.

Endurance is typically measured in DWPD (Drive Writes Per Day) or TBW (Terabytes Written). Tools like smartctl or vendor-specific software allow ongoing monitoring. Platforms like Simplyblock also provide integrated health checks for storage durability and lifecycle management.

Yes. QLC NAND offers higher density and lower cost but comes with reduced endurance compared to TLC or MLC. It’s best suited for read-heavy workloads or secondary storage tiers. For performance-critical apps, pairing QLC with automated data placement ensures longevity and efficiency.

Absolutely. Advanced SDS solutions use wear-leveling algorithms, caching strategies, and tiering to reduce write amplification. These techniques help balance workloads across devices and preserve SSD health over time—especially useful in write-heavy environments.

Limit unnecessary writes, enable filesystem trimming, and avoid logging to high-IO partitions. With CSI-managed storage, you can also use policies to isolate ephemeral from persistent data, preserving the SSD’s lifespan while maintaining performance.