OpenShift CSI Driver Operator

Terms related to simplyblock

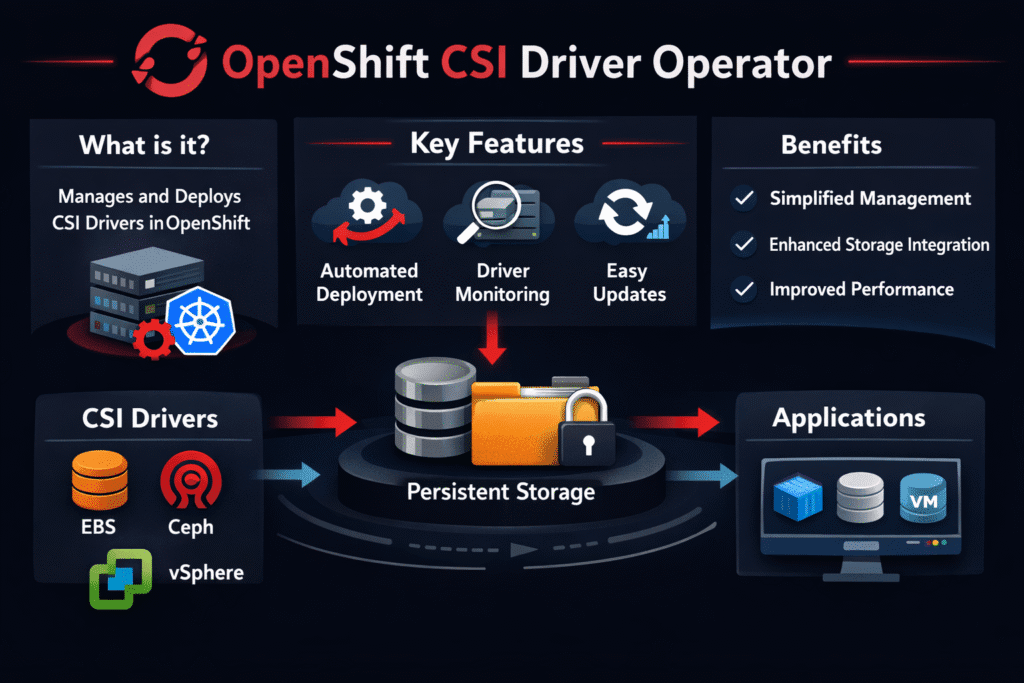

OpenShift CSI Driver Operator manages the lifecycle of CSI storage components on OpenShift. It installs, updates, and keeps CSI pods in the expected state, so platform teams avoid manual drift. That control matters most for Kubernetes Storage, because the CSI path sits on every read, write, attach, and mount for stateful apps.

In day-two operations, the operator becomes the “traffic cop” between cluster upgrades and storage stability. It helps teams roll forward in smaller steps, reduces broken mounts after node drains, and keeps StorageClass behavior consistent across worker pools.

Why operators change the storage risk profile

Operators turn storage into a managed service inside the cluster. That sounds simple, but it changes how incidents show up. A manual CSI install fails in obvious ways. An operator install can fail in quieter ways, such as mismatched sidecar versions, wrong SCC settings, or pods that restart in a loop during upgrades.

The best teams treat the operator as part of the storage SLO. They watch its events, set clear change windows, and test node drains as part of every release.

🚀 Keep CSI Upgrades Predictable on OpenShift, with NVMe/TCP Block Storage

Use Simplyblock to standardize Kubernetes Storage behavior and cut rollout-time I/O stalls.

👉 Use Simplyblock for OpenShift CSI Storage →

OpenShift CSI Driver Operator in Kubernetes Storage design

OpenShift CSI Driver Operator affects how you plan StorageClasses, node pools, and failure domains. If your workloads span zones, you need topology-aware behavior that keeps volumes close to the pods that use them. If your cluster runs mixed tenants, you need consistent limits and isolation so one team does not crowd out another.

Software-defined Block Storage helps here because it can enforce performance tiers and QoS without forcing a single hardware stack. That approach fits platform-style OpenShift operations. You define intent once, then reuse it across clusters and environments.

What to watch during upgrades and node drains

Upgrades and drains stress the CSI path more than steady-state traffic does. During a drain, the node plugin has to unmount cleanly, detach the volume, and allow a new node to attach it again. If any step takes too long, the app sees a stall. If the operator rolls a controller update at the same time, you can also get timing issues that look like random storage flakiness.

Good observability keeps these events readable. Track attach time, mount time, and the rate of failed publish or unpublish operations. Pair those signals with app p95 and p99 latency so you can see the impact, not just logs.

OpenShift CSI Driver Operator with NVMe/TCP and Software-defined Block Storage

NVMe/TCP gives OpenShift clusters a low-latency block path over standard Ethernet. That matters when you run disaggregated storage nodes, or when you want a SAN alternative without the usual lock-in. When the CSI operator manages a driver that talks to NVMe/TCP storage, you get two wins: a stable lifecycle for the driver and a fast transport for the data path.

An SPDK-based design improves the result further. SPDK keeps I/O in the user space and cuts extra kernel work. That can free CPU on busy nodes, especially when stateful apps fight for cores during peak load. In practice, this combination helps Kubernetes Storage stay predictable while the platform keeps changing.

Measuring performance without chasing the wrong problem

Start with placement and lifecycle, then measure speed. If pods wait on volume binding, you have a control-plane issue. If volumes attach quickly but latency climbs later, you likely have contention, weak QoS, or a tight network.

For benchmarks, match the workload. Databases often behave like mixed random I/O with bursts. Queue systems can spike writes. Analytics can push long reads. Use a tool like fio with the right block sizes and queue depth, and report latency percentiles, not just average throughput.

One checklist that improves steady storage behavior

- Separate StorageClasses by tier, and keep latency-critical apps off general pools.

- Set clear QoS limits per tenant so noisy neighbors cannot dominate shared bandwidth.

- Test node drains and rollouts as part of every release, not only during incidents.

- Validate NVMe/TCP network headroom under peak traffic, then re-test after changes.

- Keep topology rules consistent so volumes stay close to the pods that use them.

Side-by-side comparison for CSI lifecycle control

The table below compares common ways teams manage CSI on OpenShift, with a focus on upgrade safety and operational effort.

| Approach | Strength | Trade-off | Best fit |

|---|---|---|---|

| Operator-managed CSI (OpenShift model) | Consistent lifecycle, safer upgrades | Needs good observability and policy | Regulated platforms, many clusters |

| Manual YAML or Helm installs | Fast for labs, easy to tweak | Drift and version mismatch risk | Short-lived dev clusters |

| Vendor operator in a namespace | Tight vendor support path | Extra RBAC, SCC, and tuning work | Single-vendor standard stacks |

| SDS with strong CSI + QoS controls | Stable tiers, clear isolation | Requires tier design up front | Multi-tenant Kubernetes Storage |

Simplyblock™ for operator-driven CSI at scale

Simplyblock supports Kubernetes Storage with NVMe/TCP and Software-defined Block Storage, which pairs well with OpenShift’s operator-based lifecycle model. You can run hyper-converged, disaggregated, or mixed layouts and still keep StorageClass intent consistent across clusters.

Simplyblock uses an SPDK-based architecture to keep the data path efficient. That helps when clusters run dense workloads and need stable p99 latency during rollouts. Multi-tenancy and QoS controls also help reduce performance swings when multiple teams share the same storage plane.

Roadmap signals for OpenShift CSI driver lifecycle management

OpenShift keeps pushing toward safer upgrades and clearer policy. CSI operators will continue to tighten version control, reduce drift, and standardize how clusters report storage health. At the same time, more teams will adopt NVMe/TCP as a default transport for disaggregated designs, because it fits standard networks and scales cleanly.

Expect stronger focus on topology behavior, attach-time visibility, and per-tenant performance controls. Those areas decide whether stateful apps feel “boring” or “fragile” on the platform Kubernetes.

Related Terms

Teams often pair these glossary pages with the OpenShift CSI Driver Operator when they review driver lifecycle, attach behavior, and storage isolation on OpenShift.

- CSIDriver Object Basics

- CSI Controller Plugin Fundamentals

- CSI Snapshot Controller Fundamentals

- QoS Policy in CSI

Questions and Answers

The OpenShift CSI Driver Operator automates the deployment and lifecycle management of CSI drivers within OpenShift clusters. It ensures proper configuration, RBAC setup, and upgrades. CSI-based storage backends like Simplyblock offer native CSI support that integrates seamlessly via the operator model.

By abstracting the complexity of CSI deployment, the operator enables storage vendors to plug in dynamic provisioning, volume expansion, and snapshot capabilities. This is essential for platforms like Simplyblock that deliver production-grade Kubernetes storage into OpenShift environments.

Yes. Operators in OpenShift follow a declarative model, allowing seamless updates and rollbacks. This ensures that CSI components like the controller and node plugin stay compatible with OpenShift’s storage architecture and meet security and performance expectations.

Absolutely. The operator can configure topology-aware provisioning by passing zone labels and StorageClass parameters. Simplyblock leverages this to offer high-performance, zone-aware block volumes across OpenShift clusters, improving latency and resilience.

While not strictly required, using the operator is the recommended way to deploy and maintain CSI drivers in OpenShift. It ensures full compatibility and simplifies integration with features like encryption at rest and dynamic volume provisioning.