OpenShift Data Foundation (ODF)

Terms related to simplyblock

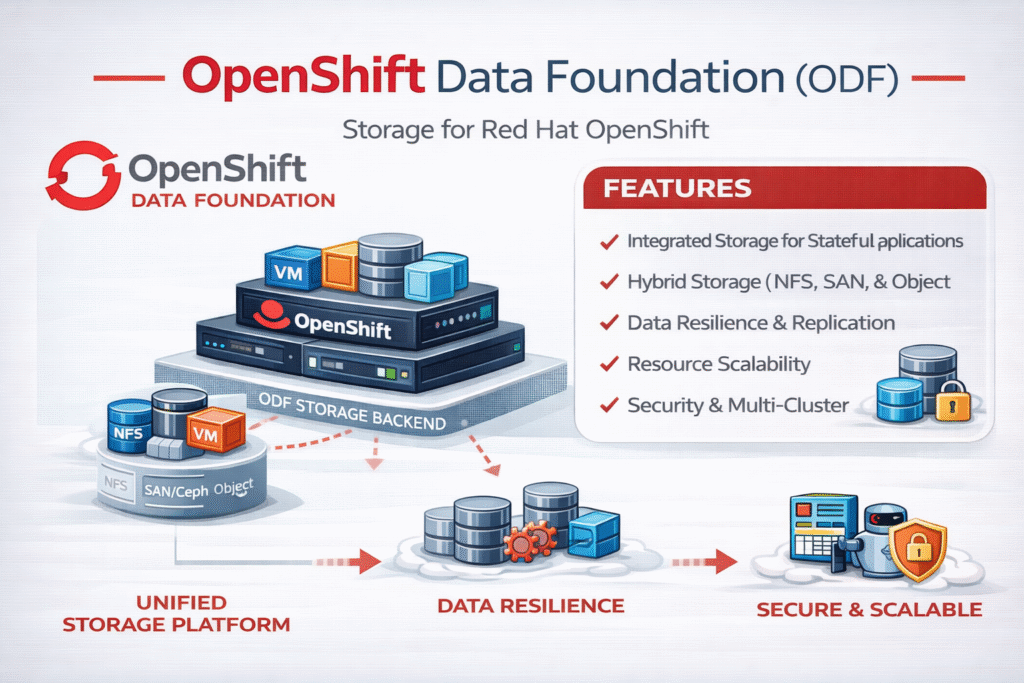

OpenShift Data Foundation (ODF) is Red Hat’s integrated storage layer for OpenShift that delivers block, file, and object services through Kubernetes-native constructs like StorageClasses, PersistentVolumeClaims, and CSI drivers. Many teams run ODF on Ceph and manage it through Operators (often alongside Rook-Ceph components) to get scale-out storage with replication, failure domains, and automated day-2 operations.

ODF standardizes how stateful workloads consume storage on OpenShift while staying aligned with the Operator lifecycle for upgrades, patching, and policy control. This positioning makes ODF appealing as a SAN alternative for OpenShift, especially when an organization prefers software-defined storage on baremetal or converged infrastructure over external arrays.

Tuning OpenShift Data Foundation (ODF) for Enterprise-Grade Operations

ODF performs best when teams design storage as a first-class part of the platform. Most reliability and performance issues come from shared-cluster contention, congested networks, and a mismatch between failure domains and the physical layout.

Executives usually face one key choice: should ODF serve as the default shared storage substrate for most teams, or should the platform include a dedicated Software-defined Block Storage tier with strict QoS for performance-critical apps? Platform teams can turn that choice into repeatable patterns by standardizing node roles, reserving CPU and memory for storage services, and enforcing workload-aware placement.

🚀 Run OpenShift Data Foundation (ODF) Workloads on NVMe/TCP Storage in OpenShift

Use Simplyblock to simplify persistent storage and reduce tail-latency bottlenecks at scale.

👉 Upgrade OpenShift Storage with Simplyblock →

How OpenShift Data Foundation (ODF) Fits into Kubernetes Storage Workflows

ODF plugs into Kubernetes Storage through CSI, so application teams request capacity through PVCs and StorageClasses rather than vendor tooling. That approach speeds up provisioning and standardizes operations, but it can hide the mechanics that drive latency and throughput, such as NVMe placement, daemon CPU pressure, interrupt load, and network queue behavior.

In OpenShift environments, teams commonly use ODF for RWO block volumes that back databases, RWX file volumes that support shared access patterns, and object interfaces that support backup and data pipeline workflows. The main benefit is consistent lifecycle control that fits the OpenShift Operator model.

Where NVMe/TCP Changes the ODF Storage Conversation

NVMe/TCP brings NVMe-oF behavior over standard Ethernet, so platform teams can pursue higher performance without building an RDMA-only fabric. Many organizations also choose NVMe/TCP because it works well with routable networks, segmentation, and existing operational tooling.

OpenShift teams often use NVMe/TCP to support disaggregated designs where storage targets scale separately from compute nodes. For latency-sensitive workloads, pairing NVMe/TCP with SPDK-based datapaths can cut kernel overhead and improve CPU efficiency, which matters in dense clusters that run both apps and storage services.

Building a Repeatable Method to Test OpenShift Data Foundation (ODF) Performance

Benchmarking ODF should focus on what applications feel under load. Tail latency and stability under contention matter more than peak throughput, and rebuild events matter as much as steady state.

A practical method uses controlled FIO jobs from dedicated benchmark pods, then correlates results with OpenShift monitoring. Track p95/p99 latency by I/O profile, IOPS, and bandwidth at defined block sizes, CPU cost per I/O, and degraded-mode behavior during planned failures such as node drains or disk loss. This approach also helps teams separate application limits from storage limits during incident reviews.

Practical Levers That Typically Improve ODF Throughput and Tail Latency

Most ODF bottlenecks come from architecture choices and resource boundaries. Teams usually improve results when they align CPU, networking, and placement rules with the workload profile, and when they test during recovery scenarios rather than only during quiet periods.

- Reserve resources for storage daemons, or use storage-dedicated nodes, so application spikes do not starve storage CPU.

- Isolate storage traffic with dedicated NICs or VLANs, then validate MTU and congestion behavior end-to-end.

- Match failure domains and placement rules to the physical topology to reduce cross-rack or cross-zone chatter.

- Standardize media classes (NVMe vs SSD) and keep WAL or journal placement consistent with latency targets.

- Validate SLOs with tail latency during rebuild, rebalance, and backfill events, not only during steady-state runs.

When the organization needs tighter predictability, a dedicated Software-defined Block Storage tier with multi-tenant isolation and explicit QoS often reduces variance compared to shared-everything designs.

Decision Matrix – ODF vs simplyblock vs Traditional SAN

Before selecting a platform standard, compare how each approach supports OpenShift operations, latency targets, and scale requirements.

| Category | OpenShift Data Foundation (ODF) | Simplyblock | Traditional SAN / iSCSI arrays |

|---|---|---|---|

| OpenShift integration model | Operator-driven, CSI-native | CSI-native, OpenShift-ready | External provisioning, added integration layers |

| Data-path efficiency focus | Scale-out, depends on topology and tuning | SPDK-based, user-space, zero-copy architecture | Controller-centric, appliance-bound |

| Network transport options | Ethernet-based designs vary by deployment | NVMe/TCP and NVMe/RoCEv2 support | iSCSI/FC common; NVMe-oF varies |

| Multi-tenancy and QoS | Supported, but depends on configuration | Built-in multi-tenancy and granular QoS | Vendor-specific; often array-centric |

| Deployment flexibility | Hyper-converged or disaggregated patterns | Hyper-converged, disaggregated, or mixed/hybrid | Supported, but depends on the configuration |

Making ODF-Like Predictability Practical with Simplyblock™

OpenShift clusters that run databases, analytics, and latency-sensitive pipelines often need consistent tail latency under contention, not just higher peak numbers. Simplyblock targets predictable performance with an SPDK-based design that reduces kernel overhead and improves CPU efficiency, which helps in clusters where storage services share cores with application workloads.

From a platform perspective, Simplyblock provides Software-defined Block Storage for Kubernetes Storage and OpenShift with NVMe/TCP as a first-class transport and support for RDMA options when the environment justifies it. It supports hyper-converged, disaggregated, and mixed deployments, so teams can keep one operational control plane while they right-size infrastructure for bare-metal, edge, and centralized clusters. Tenant-aware QoS reduces noisy-neighbor effects across namespaces and helps align storage performance with business SLOs.

What’s Next for OpenShift Data Foundation (ODF) and the OpenShift Storage Stack

ODF and the OpenShift storage ecosystem are moving toward stronger control of tail latency, better visibility into rebuild impact, and clearer guidance that separates “platform services storage” from “tier-1 application storage.” Expect more NVMe-centric designs, wider use of NVMe-oF transports such as NVMe/TCP, deeper automation for placement and recovery workflows, and more offload options through DPUs or IPUs that reduce host CPU load.

Many enterprises will end up with a tiered model: ODF covers broad shared needs, while a dedicated NVMe/TCP-capable Software-defined Block Storage tier supports workloads that demand predictable tail latency.

Related Terms

Teams often review these glossary pages alongside OpenShift Data Foundation (ODF) when they set targets for Kubernetes Storage and Software-defined Block Storage on OpenShift.

OpenShift Container Storage

CRUSH Maps

Storage High Availability

Asynchronous Storage Replication

OpenShift Data Foundation vs Ceph

OpenShift Data Resiliency

Questions and Answers

ODF delivers persistent storage to OpenShift using a CSI-compliant interface. It supports block, file, and object storage, making it compatible with stateful Kubernetes workloads like databases and analytics platforms.

ODF is tightly integrated with OpenShift, providing seamless automation, monitoring, and lifecycle management. Unlike external storage, it’s optimized for cloud-native operations and works well with dynamic volume provisioning.

Yes, NVMe over TCP can be used as a fast, low-latency backend for ODF. It enhances performance for high-throughput applications running on OpenShift, especially in data-intensive environments.

Absolutely. ODF provides persistent, high-availability storage with replication and snapshot support. It integrates with Kubernetes-native storage systems to support production-grade databases like PostgreSQL and MongoDB.

ODF is built for cloud-native infrastructure. Unlike traditional SAN or NAS, it offers automated scaling, self-healing, and seamless integration with Kubernetes, making it ideal for software-defined storage environments.