OpenShift Data Foundation vs Ceph

Terms related to simplyblock

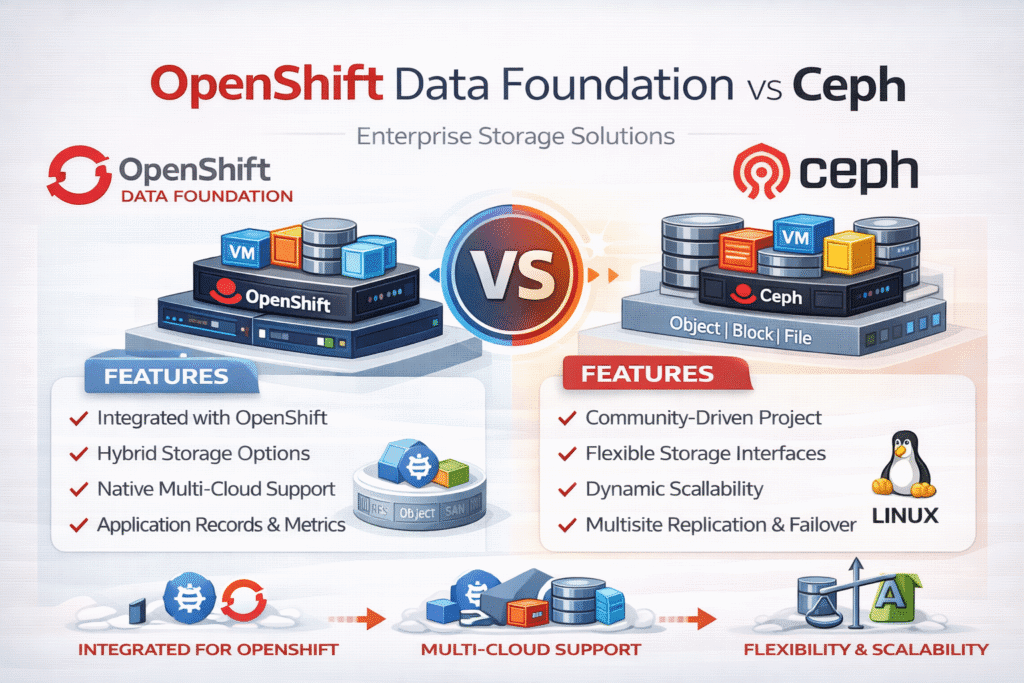

OpenShift Data Foundation (ODF) and Ceph share DNA, but they lead to different ownership models in production. Red Hat positions ODF as an OpenShift-focused storage layer, and Red Hat documents it as part of the OpenShift ecosystem.

Ceph is the upstream, open-source storage platform behind many software-defined storage stacks. The Ceph project describes itself as a unified, distributed storage system, and it supports block, file, and object interfaces.

For executives, this decision often comes down to lifecycle risk and support coverage. For platform teams, it comes down to day-2 operations, rebuild behavior, and tail latency in Kubernetes Storage for Software-defined Block Storage.

What OpenShift Data Foundation Brings Beyond Upstream Ceph

ODF targets OpenShift operations first. It leans on the Operator model, curated versions, and a vendor support path that fits change control and audit needs. Red Hat’s ODF documentation emphasizes deployment, management, updates, monitoring, and troubleshooting as OpenShift-native workflows.

That packaging reduces integration work when many teams share one cluster. It also shortens upgrade planning because Red Hat publishes supported combinations and guidance. Your team still runs the platform, but the vendor owns more of the validation burden.

🚀 Compare ODF and Ceph for OpenShift, Then Add NVMe/TCP Predictability

Use simplyblock to simplify persistent storage and cut tail-latency bottlenecks at scale.

👉 Use simplyblock for OpenShift Storage →

What Running “Ceph Direct” Changes for Platform Teams

Upstream Ceph gives storage teams more control over timing and tuning. Many Kubernetes users deploy Ceph with Rook, which provides an Operator pattern to help manage Ceph using Kubernetes resources.

This path can work well when you have strong in-house skills. It also raises the operational load, since your team owns version alignment, pre-prod testing, and incident playbooks end-to-end.

Kubernetes Storage Reality – CSI Unifies Consumption, Not Outcomes

Both approaches typically expose storage through CSI, so application teams request volumes using PVCs and StorageClasses. CSI defines the interface that allows container platforms to provision and attach storage consistently across implementations.

Even with the same CSI surface, the data path still decides what your apps feel. CPU pressure, network congestion, and recovery work often drive p95 and p99 latency more than “ODF vs Ceph” branding. A busy OpenShift cluster makes those effects visible fast, especially for databases that rely on small-block random I/O.

Performance Drivers That Separate “Works” From “Predictable”

Ceph performance depends on placement and topology. Teams often tune CRUSH placement rules to match racks, zones, and media types, which helps the cluster avoid unnecessary cross-domain traffic.

Rebuild behavior matters just as much as steady-state results. When a node or disk fails, the cluster must restore redundancy, and that background work can raise latency for apps that share the same CPU and network.

NVMe/TCP – A Transport Choice That Can Shift the Result

Many evaluations assume the same transport and focus only on the storage stack. In practice, the transport can change both latency and operational fit. NVMe/TCP runs NVMe-oF over standard TCP/IP networks, which helps teams keep routable Ethernet designs while moving toward lower latency and higher throughput.

When your goal is predictable Software-defined Block Storage, teams often add a dedicated NVMe/TCP tier for tier-1 databases. That approach also supports disaggregated designs where storage scales on its own schedule.

How to Benchmark an ODF vs Ceph Decision So the Data Holds Up

Start with workload-fit tests. Use repeatable I/O profiles that match your real block sizes, read/write mix, and concurrency. Then rerun the same tests during events you will hit in production, such as node drains, disk loss, and rolling updates.

Track tail latency and CPU cost per I/O, not just peak IOPS. For teams that push NVMe hard, SPDK often matters because it targets a user-space, low-overhead data path for storage I/O.

- Choose ODF when you want vendor-backed upgrades, tested combinations, and OpenShift-aligned Operator workflows.

- Choose upstream Ceph when your storage team wants full control over releases, tuning, and automation.

- Add NVMe/TCP when you need routable Ethernet and a clean path to disaggregated scale.

- Use tenant controls and QoS when many teams share one cluster and p99 latency drives business risk.

- Evaluate SPDK-aligned designs when CPU cost and tail latency matter as much as throughput.

Decision Matrix for OpenShift Data Foundation vs Ceph

This table summarizes what platform owners usually compare: lifecycle load, operational effort, and predictable Kubernetes Storage outcomes for Software-defined Block Storage.

| Category | OpenShift Data Foundation (ODF) | Upstream Ceph (often via Rook) |

|---|---|---|

| Lifecycle ownership | Vendor guidance, support boundaries | Team-owned cadence and validation |

| OpenShift fit | Tight OpenShift packaging and workflows | Strong flexibility, more integration work |

| Day-2 operations | Standardized processes and docs | More tuning freedom, more runbook work |

| Kubernetes Storage interface | CSI-driven consumption | CSI-driven consumption |

| Failure and rebuild impact | Depends on sizing and topology | Depends on sizing and topology |

| Placement control | Platform policies plus topology design | Deep control, CRUSH tuning becomes central |

Predictable Software-defined Block Storage with Simplyblock™

When ODF vs Ceph turns into a latency problem, teams often need a more direct block path for tier-1 apps. Simplyblock™ offers OpenShift-ready storage with NVMe/TCP, and it positions itself as an alternative to ODF or Ceph for high-performance block workloads on OpenShift.

From an ops view, this approach supports hyper-converged and disaggregated layouts, while keeping the storage surface native to Kubernetes Storage. It also fits teams that want a SAN alternative without pulling storage out of the cluster lifecycle.

Where OpenShift Storage Is Heading Next

OpenShift storage roadmaps keep moving toward NVMe-centric transports, clearer SLO-driven ops, and better visibility into rebuild impact. Many teams also look at offload paths and more efficient data paths to protect CPU for applications.

As more organizations standardize on NVMe/TCP for Ethernet-friendly deployments, platform teams will likely split storage into tiers: broad coverage for shared services, plus a low-latency Software-defined Block Storage tier for databases and other I/O-heavy workloads.

Related Terms

Teams often review these glossary pages alongside OpenShift Data Foundation vs Ceph when they set standards for Kubernetes Storage and Software-defined Block Storage.

Local Node Affinity

Synchronous Storage Replication

Storage Area Network

Persistent Storage

Questions and Answers

OpenShift Data Foundation (ODF) is based on Ceph but tailored for seamless integration with OpenShift. It includes Kubernetes-native features like CSI support, dynamic provisioning, and better lifecycle management for stateful workloads.

Yes, ODF offers a fully integrated experience within OpenShift, with built-in monitoring, automation, and updates. Traditional Ceph requires manual setup and tuning, while ODF simplifies operations—ideal for Kubernetes-native environments.

Yes, ODF can leverage high-performance storage backends such as NVMe over TCP, improving throughput and latency. When compared to standard Ceph setups, this enables better performance for I/O-intensive applications.

For OpenShift users, ODF is typically better—it offers tighter integration, operator-based deployment, and support for persistent Kubernetes storage. Ceph is more flexible outside Kubernetes but requires more manual management.

ODF offers similar core performance as Ceph but with better integration and automation in Kubernetes. When combined with software-defined storage and fast protocols like NVMe/TCP, ODF can deliver more consistent performance in containerized environments.