PCIe-Based DPU

Terms related to simplyblock

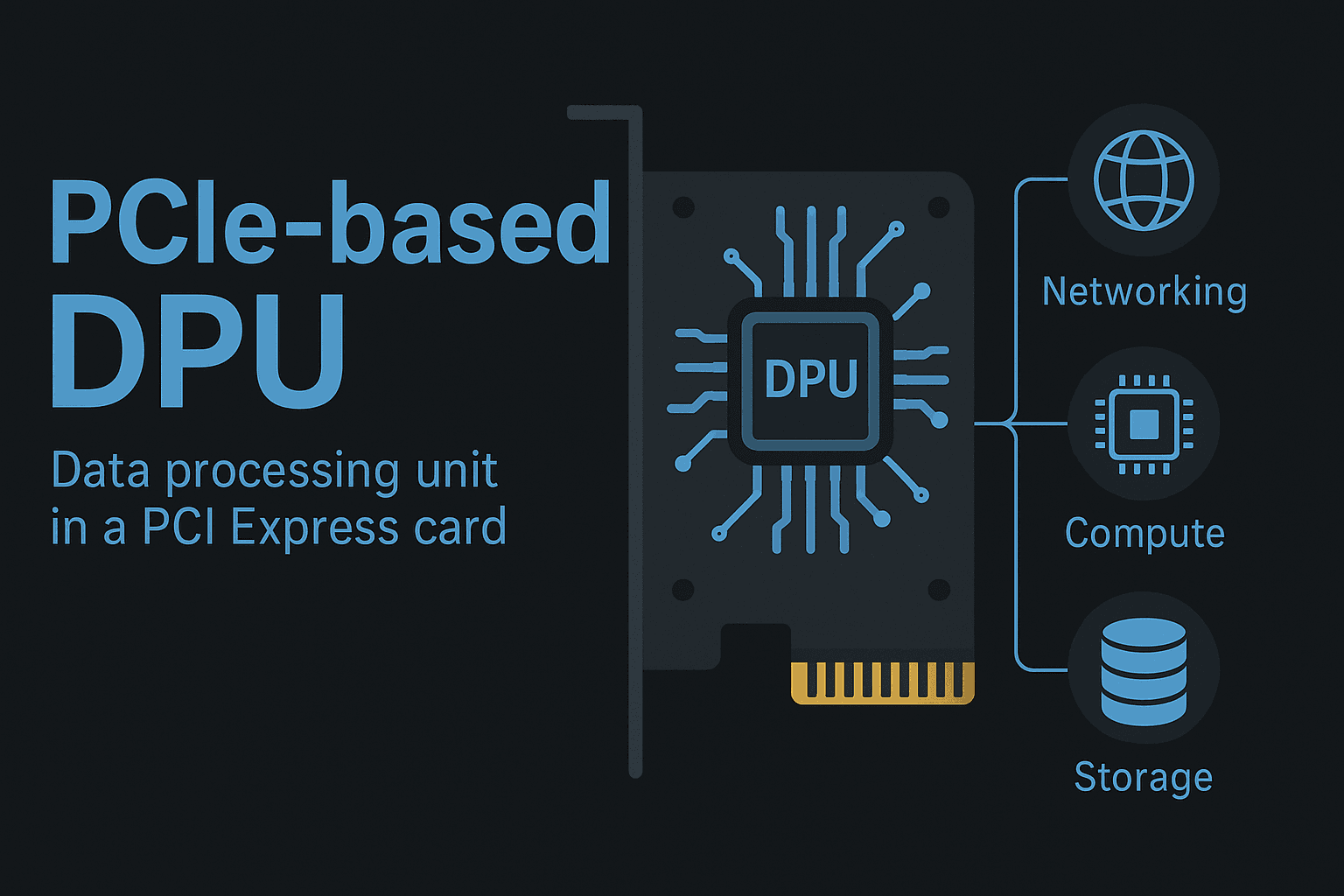

A PCIe-based DPU (Data Processing Unit) is a hardware accelerator that connects directly to a server through the PCI Express bus. Unlike traditional NICs, a PCIe-based DPU includes its own compute cores, memory, and specialized engines that allow it to manage networking, storage, and security tasks independently of the host CPU.

By operating on the PCIe bus, the DPU gains fast and predictable access to system resources while remaining isolated from application workloads. This design makes PCIe-based DPUs a strong choice for environments that demand consistent performance and lower CPU overhead.

How a PCIe-Based DPU Interacts with the Host System

A PCIe-based DPU functions as a peer device rather than a simple peripheral. It communicates directly over PCIe lanes, enabling low-latency data movement between the DPU, system memory, and connected storage or network interfaces.

This allows the DPU to process traffic before it reaches the CPU. Tasks such as packet inspection, encryption, storage I/O handling, and virtualization can be executed entirely on the DPU, keeping application workloads isolated from infrastructure processing.

🚀 Optimize PCIe-Based DPU Storage Paths

Leverage Simplyblock’s high-performance storage solutions for PCIe-based acceleration.

👉 Learn More About PCIe →

Why PCIe-Based DPUs Are Seeing Wider Adoption

As servers take on more networking and storage responsibilities, CPUs often become a bottleneck. PCIe-based DPUs reduce this pressure by shifting infrastructure work to dedicated hardware.

Their PCIe connection provides stable bandwidth and predictable latency, which is critical for environments running storage-heavy workloads or large distributed systems. This makes them particularly useful when performance consistency matters more than raw compute power.

Core Capabilities of PCIe-Based DPUs

PCIe-based DPUs provide more than simple acceleration. Their capabilities allow infrastructure tasks to run independently from application workloads while maintaining strong performance.

- Full Infrastructure Offload: Networking, storage processing, and security functions run on the DPU instead of the CPU.

- Secure and Isolated Data Paths: Infrastructure services remain separated from application workloads, improving stability and security.

- Direct PCIe Memory Access: Access to system memory through PCIe reduces latency and improves data transfer efficiency.

- Predictable Performance at Scale: Systems can grow without CPU usage increasing at the same rate.

These capabilities help organizations scale infrastructure while keeping compute resources focused on applications.

Where PCIe-Based DPUs Are Commonly Used

PCIe-based DPUs are often deployed in environments with heavy infrastructure demands. These include cloud platforms that require strong tenant isolation, distributed storage systems handling replication and encryption, virtualized infrastructures with constant network traffic, Kubernetes clusters with significant east–west communication, and enterprise data centers modernizing legacy designs.

In each case, the DPU serves as a dedicated engine for infrastructure processing.

PCIe-Based DPU vs NIC-Based Offload

NIC-based offload solutions focus primarily on networking tasks. PCIe-based DPUs provide a broader scope by handling networking, storage, and security through a single acceleration layer.

| Feature | NIC-Based Offload | PCIe-Based DPU |

| Connection Type | Network interface | PCIe bus |

| Offload Scope | Mostly networking | Networking, storage, security |

| System Access | Limited | Direct memory and device access |

| Isolation Level | Moderate | Strong |

| Best Fit | Network-heavy workloads | Full infrastructure offload |

This distinction becomes important in systems that rely on consistent I/O performance.

PCIe-Based DPUs in Storage and Data Path Acceleration

Storage workflows benefit significantly from PCIe-based DPUs because data processing happens closer to where traffic enters the system. Encryption, compression, replication logic, and protocol handling can all occur on the DPU without consuming CPU resources.

This improves I/O responsiveness, lowers latency, and keeps performance steady during peak usage. For storage platforms, it also simplifies scaling by avoiding CPU saturation as capacity grows.

How Simplyblock Complements PCIe-Based DPU Deployments

Simplyblock aligns well with PCIe-based DPU architectures by keeping storage operations efficient and offload-friendly.

- CPU Offload Alignment: Storage traffic follows optimized paths that avoid pushing work back onto the host CPU.

- Stable Latency Under Load: Performance remains consistent even when I/O and network activity increase.

- Simpler Scaling Across Nodes: Storage scales without adding operational complexity or tuning overhead.

- Efficient Data Movement: Data paths match the performance characteristics expected from PCIe-attached acceleration.

Together, these capabilities help PCIe-based DPU environments maintain predictable performance while reducing the operational burden on compute nodes.

What PCIe-Based DPUs Mean for Future Infrastructure

As infrastructure continues to separate application compute from system services, PCIe-based DPUs will play a larger role in server design. Their ability to handle complex infrastructure tasks while remaining tightly integrated with the host makes them a practical foundation for next-generation data centers.

They allow organizations to scale storage and networking performance without redesigning core compute architectures.

Related Terms

Teams often review these glossary pages alongside PCIe-based DPU when they assess PCIe bandwidth, host-to-device isolation, and which networking or storage functions should be offloaded to the card.

DPU (Data Processing Unit)

PCI Express

Storage offload on DPUs

NVMe-oF target on DPU

Questions and Answers

A PCIe-based DPU offloads networking, storage, and security tasks from the host CPU by operating directly over the PCIe bus. This reduces CPU load, improves latency, and ensures more predictable performance for application workloads.

PCIe-based DPUs are ideal for accelerating virtualization, secure networking, distributed storage, and cloud-native environments. They help optimize workloads such as Kubernetes, NFV, and high-density VMs by handling infrastructure services independently.

While SmartNICs offload portions of networking tasks, PCIe-based DPUs include dedicated compute cores that can run full storage, security, and virtualization services. This allows deeper infrastructure offload and greater isolation from the host CPU.

Organizations need available PCIe slots, compatible server platforms, and software that supports DPU offload features. Proper integration with orchestration tools and network configurations is also essential for maximizing performance gains.

Challenges include managing new hardware components, updating drivers, and integrating DPUs into existing workflows. Teams may also need new operational skills to monitor and control DPU-accelerated networking and storage functions.