Rancher vs OpenShift

Terms related to simplyblock

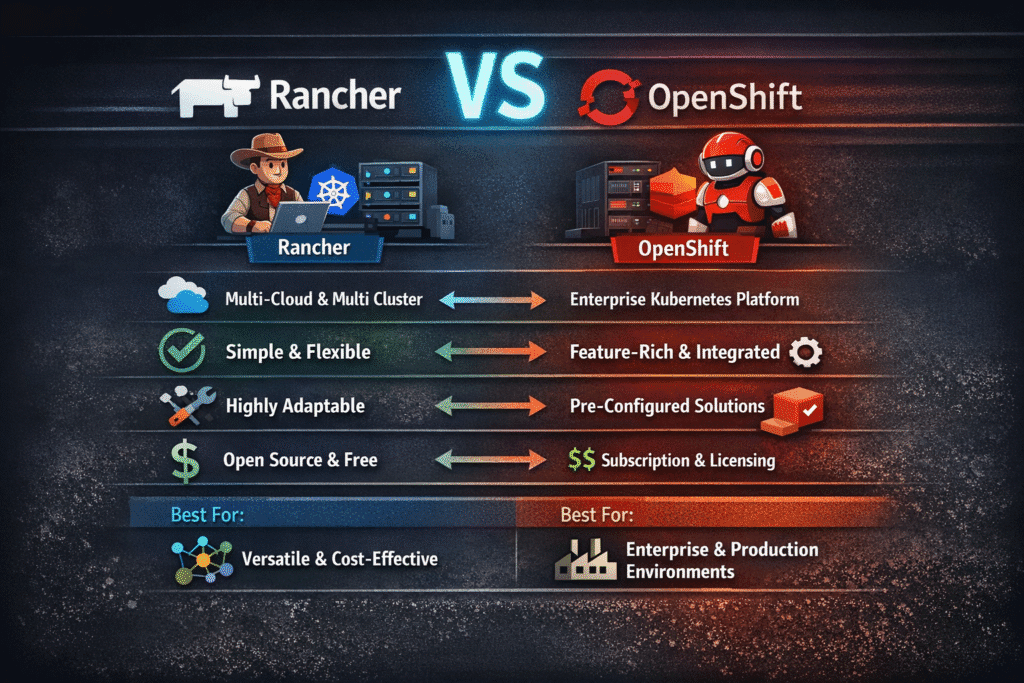

Rancher and Red Hat OpenShift both run Kubernetes, but they solve different problems. Rancher focuses on multi-cluster lifecycle management across many upstream Kubernetes distributions (including RKE2 and k3s), while OpenShift ships a tightly integrated Kubernetes platform with built-in security controls, an operator-centric app model, and opinionated day-2 operations.

For executives, the key question in Rancher vs OpenShift usually comes down to risk, standardization, and operating model. For platform teams, it often comes down to upgrade paths, policy enforcement, and how storage, networking, and observability behave under load.

Stateful workloads make the trade-offs visible fast. That’s where Kubernetes Storage, NVMe/TCP, and Software-defined Block Storage decide whether clusters feel stable or fragile.

Optimizing Rancher vs OpenShift with Platform-Ready Storage Patterns

Rancher simplifies fleet management, but storage behavior still varies by cluster distro, CNI choices, and node profiles. OpenShift standardizes more of the stack, yet that stack can add layers between applications and storage.

To reduce surprises, teams treat storage as a shared platform service with clear SLOs and policy guardrails. A storage layer that supports both hyper-converged and disaggregated layouts helps because cluster topology changes over time. This matters when you add GPU nodes, edge sites, or separate “build” clusters from “prod” clusters.

When you plan Rancher vs OpenShift for stateful apps, optimize for predictable latency, consistent throughput, and clean operational controls like snapshots, replication, and QoS, not just raw IOPS.

🚀 Run Rancher and OpenShift with NVMe/TCP Storage, Natively in Kubernetes

Use Simplyblock to standardize persistent volumes across clusters and cut tail-latency under load.

👉 Use Simplyblock for Rancher and OpenShift Storage →

Rancher vs OpenShift in Kubernetes Storage

Rancher clusters often run across diverse environments, so storage teams must handle mixed node types, mixed network gear, and mixed failure domains. That makes CSI behavior, topology hints, and scheduling rules critical. OpenShift pushes deeper platform integration, and that can help with guardrails, but it can also increase coupling to specific operators and platform workflows.

In both cases, CSI remains the contract. You still need the same fundamentals: PV/PVC lifecycle, StorageClasses, volume expansion, and safe upgrade procedures. If you want portability between Rancher-managed and OpenShift-managed clusters, keep storage policies consistent and map them to workload tiers (database, analytics, CI, logs).

A strong fit here is Software-defined Block Storage that exposes the same primitives everywhere, so teams can lift workloads between clusters without rewriting storage assumptions.

Rancher vs OpenShift and NVMe/TCP

NVMe/TCP matters in Rancher vs OpenShift because it delivers NVMe-oF performance characteristics over standard Ethernet, without forcing RDMA hardware everywhere. That gives platform teams a clear path: start on existing 25/50/100GbE, then add RDMA only where a specific tier needs it.

In practice, NVMe/TCP helps when you separate compute from storage, scale stateful services horizontally, and keep latency stable during node churn. It also reduces the odds that storage becomes the “special snowflake” part of the platform.

If you run mixed clusters, NVMe/TCP lets you apply one transport baseline across environments and still keep room for NVMe/RoCEv2 on performance-critical pools.

Measuring and Benchmarking Rancher vs OpenShift Performance

Benchmarking Rancher vs OpenShift for stateful apps works best when you test the storage path, not just the control plane. Use real workloads or close proxies, and measure p50, p95, and p99 latency, not only averages.

Common patterns include fio-based tests for block volumes, plus app-level tests for databases and streaming platforms. Track queue depth, block size, read/write mix, CPU per I/O, and network drops. Then rerun the same tests during upgrades, node drains, and failure events, because those are the moments when storage regressions show up.

For OpenShift, include operator-driven changes in the test plan. For Rancher, include cluster distro variance (RKE2 vs upstream) and multi-cluster differences.

Approaches for Improving Rancher vs OpenShift Performance

Teams usually get the biggest gains by tightening the I/O path and enforcing a consistent storage policy. Here is a focused checklist you can apply to either platform:

- Set workload-tier StorageClasses with clear QoS targets and limits

- Keep latency-sensitive volumes on NVMe/TCP pools with consistent MTU and ECN settings

- Use topology-aware placement, so replicas align with zones, racks, and failure domains

- Right-size fio and app tests to match real concurrency, not synthetic extremes

- Watch tail latency under disruption events, then tune timeouts and retry behavior

- Standardize snapshot and replication policies to reduce ad hoc “backup scripts.”

Rancher vs OpenShift at a Glance for Stateful Kubernetes Workloads

Before you decide, it helps to compare how each platform affects day-2 operations and storage outcomes for Kubernetes Storage.

| Area | Rancher | OpenShift |

|---|---|---|

| Primary focus | Multi-cluster Kubernetes management | Integrated enterprise Kubernetes platform |

| Kubernetes distro model | Manages many distros (RKE2, k3s, upstream) | Ships a curated Kubernetes stack |

| Upgrade experience | Varies by distro and cluster profile | More standardized, platform-driven |

| Security posture | Depends on distro hardening and policy setup | Strong defaults and integrated controls |

| App delivery model | Flexible, GitOps-friendly | Operator-first, curated workflows |

| Storage integration | CSI-based, depends on chosen backend | CSI plus deep platform workflows |

| Best fit | Heterogeneous fleets, multi-cloud ops | Standardized enterprise platform needs |

Predictable Storage for Rancher vs OpenShift with simplyblock™

Simplyblock positions storage as a consistent control surface across both environments. With SPDK-based, user-space I/O, simplyblock reduces kernel overhead and supports a zero-copy architecture that helps CPU efficiency when I/O load rises. That matters when clusters run hot, nodes churn, and platform upgrades happen.

For Kubernetes Storage, simplyblock provides Software-defined Block Storage that fits hyper-converged, disaggregated, or mixed designs in the same fleet. For transports, simplyblock supports NVMe/TCP as a practical baseline, while still allowing NVMe/RoCEv2 where specific workloads justify RDMA.

In OpenShift environments, simplyblock integrates through CSI and aligns with operator-style operations, so teams can provision, monitor, and scale volumes without bolting on extra tooling. In Rancher fleets, simplyblock helps standardize storage behavior across clusters, even when the underlying distros differ.

What’s Next for Rancher vs OpenShift

Rancher vs OpenShift decisions will keep shifting toward policy automation, platform portability, and performance isolation. Multi-tenancy controls, storage QoS enforcement, and workload-aware placement will matter more as more enterprises run databases, AI pipelines, and real-time analytics on Kubernetes.

On the storage side, expect broader NVMe-oF adoption, more disaggregated designs, and increasing use of DPUs/IPUs to offload parts of the data path. Platforms that keep the storage interface stable while letting the hardware mix change will reduce long-term risk.

Related Terms

Teams often review these glossary pages alongside Rancher vs OpenShift when they set targets for Kubernetes Storage and Software-defined Block Storage:

Questions and Answers

Rancher is a lightweight Kubernetes management platform that operates on top of any CNCF-conformant Kubernetes cluster, while OpenShift is a full enterprise Kubernetes distribution with opinionated defaults and built-in developer tooling. Both platforms support CSI-based storage integration for dynamic volume provisioning.

Both platforms can run stateful workloads, but OpenShift includes stricter security defaults and integrated CI/CD, making it ideal for regulated environments. Rancher offers flexibility across cloud and edge use cases. Simplyblock supports stateful Kubernetes storage on both platforms with high-performance block volumes.

Rancher uses Helm and cluster templates for CSI deployment, while OpenShift uses an Operator-based model. Simplyblock’s CSI driver architecture is compatible with both methods, ensuring seamless integration regardless of platform preference.

Yes. OpenShift includes integrated authentication, SCCs (Security Context Constraints), and encrypted communication out of the box. For Rancher, these features must be manually configured. Simplyblock enhances both by offering encryption at rest with DARE for persistent volumes.

Rancher is often preferred for multi-cloud and hybrid deployments due to its lightweight footprint and provider-agnostic design. Combined with Simplyblock’s multi-cloud block storage capabilities, Rancher becomes a powerful choice for flexible infrastructure strategies.