RoCEv2

Terms related to simplyblock

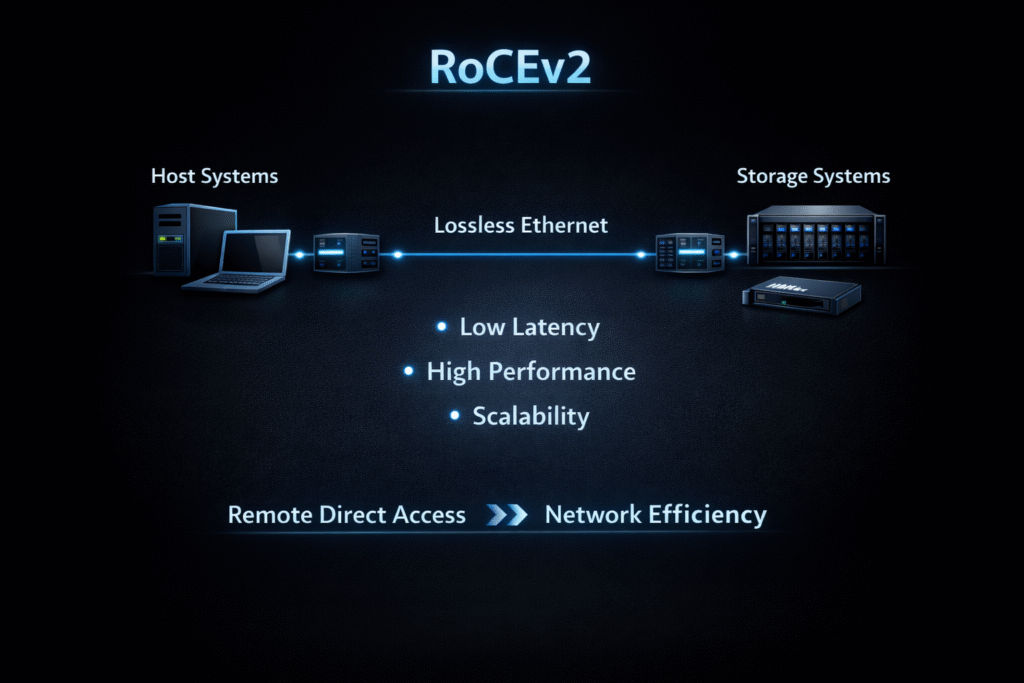

RoCEv2 (RDMA over Converged Ethernet v2) runs Remote Direct Memory Access over standard Ethernet while using IP routing. Teams pick it when they need very low latency and low CPU overhead across a routed data center fabric, especially for NVMe-oF targets, AI clusters, and latency-sensitive databases. You can think of RoCEv2 as “RDMA that fits inside an IP network,” which helps at scale compared with L2-only designs.

RoCEv2 commonly shows up in storage as NVMe over RoCE, where hosts send NVMe commands over an RDMA-capable Ethernet network. That path competes with NVMe/TCP when teams weigh raw latency against operational simplicity.

Building a RoCEv2 Fabric That Stays Stable Under Load

RoCEv2 rewards good network hygiene. The fabric needs consistent QoS markings, predictable buffering behavior, and congestion controls that keep queues short. Switches and NICs typically rely on Explicit Congestion Notification (ECN) and RoCE congestion control (often DCQCN) to slow senders before queues overflow. Priority Flow Control (PFC) can also protect RDMA traffic, but it can amplify outages if the fabric misconfigures priorities or buffer thresholds.

To keep results repeatable, standardize these decisions early: which VLANs carry RDMA, how you mark traffic classes, and how you prevent “pause storms.” When you lock those down, teams avoid late-stage surprises during scale tests.

🚀 Deploy RoCEv2-Ready NVMe-oF Storage on Ethernet

Use Simplyblock to run NVMe/RDMA (RoCEv2) alongside NVMe/TCP for Kubernetes Storage with SPDK acceleration.

👉 See RoCEv2 system recommendations →

Where RoCEv2 Fits in Software-defined Block Storage Architectures

RoCEv2 helps most when CPU cycles matter as much as IOPS. RDMA moves data with fewer copies and fewer interrupts, so hosts spend less CPU per I/O. That efficiency matters in Software-defined Block Storage designs that push high parallelism across many volumes and tenants.

Still, RoCEv2 does not win by default. Many platforms standardize on NVMe/TCP for broad deployability, then reserve RoCEv2 for workloads that demand the lowest latency or the highest IOPS per core. That “two-transport” posture also reduces risk when teams operate mixed fleets across baremetal and virtualized nodes.

RoCEv2 in Kubernetes Storage Without Creating a Network Silo

In Kubernetes Storage, storage traffic competes with service traffic, east-west app traffic, and telemetry. RoCEv2 can coexist with that mix, but the cluster team must treat it as a first-class network service, not an afterthought.

Kubernetes adds churn. Nodes drain, pods reschedule, and IPs rotate. A RoCEv2 design works best when you automate the network intent and keep the RDMA settings consistent across racks. For many teams, this becomes the dividing line: RoCEv2 fits best when the org already runs disciplined data center networking, and NVMe/TCP fits best when the org wants simpler ops and faster rollout.

RoCEv2 vs NVMe/TCP for NVMe-oF Targets

RoCEv2 usually delivers lower latency and lower host CPU overhead than TCP-based transports. NVMe/TCP often delivers “good enough” latency with easier routing, troubleshooting, and change control on commodity Ethernet. When your storage team needs clean operations across many clusters, NVMe/TCP often wins on time-to-value.

When your platform team needs the smallest tail latency for a narrow set of high-value workloads, RoCEv2 can justify its fabric requirements. Many orgs run both: NVMe/TCP for the broad fleet, and RoCEv2 for the tightest SLOs.

Measuring RoCEv2 Performance the Right Way

Measure RoCEv2 storage performance with a full-path view: application node, NIC queues, switch behavior, target CPU, and media. Track IOPS and throughput, then focus on p95 and p99 latency because congestion and microbursts show up there first.

Use fio profiles that match real workloads, and keep test inputs stable across runs. Change one thing at a time: queue depth, block size, read/write mix, or number of clients. In Kubernetes, run tests through the same CSI path your apps use so you capture scheduling and network variance.

Operational Moves That Improve RoCEv2 Results

- Set clear traffic classes for RDMA, and keep markings consistent across hosts and switches.

- Configure ECN and the matching RoCE congestion control on NICs, then validate behavior under incast tests.

- Limit PFC to the RDMA class only, and verify buffer thresholds to avoid pause spreading.

- Keep MTU consistent end-to-end, and document it as a change-controlled standard.

- Separate noisy tenants with QoS at the storage layer so one workload cannot dominate queues in Kubernetes Storage.

RoCEv2 Transport Options Compared

The table below frames RoCEv2 alongside common alternatives that storage and platform teams evaluate when they design Software-defined Block Storage for Kubernetes Storage.

| Option | Routable across L3 | Typical latency | CPU cost per I/O | Ops complexity | Notes |

|---|---|---|---|---|---|

| RoCEv1 | No | Very low | Low | High | L2 scope limits scale in routed fabrics |

| RoCEv2 | Yes | Very low | Low | High | Needs disciplined congestion and QoS design |

| iWARP (RDMA over TCP) | Yes | Low | Medium | Medium | Leans on TCP behavior, simpler fabrics |

| NVMe/TCP (non-RDMA baseline) | Yes | Low | Higher | Low–Medium | Broad deployability on standard Ethernet |

Getting RoCEv2-Grade Outcomes with Simplyblock™

Simplyblock runs a high-performance data path built around SPDK concepts and keeps operations Kubernetes-native. Teams can standardize on NVMe/TCP for broad coverage, then evaluate RDMA options, including RoCEv2, where the latency budget demands it. That approach helps platform teams avoid overbuilding the fabric while still giving executives a path to tighter SLOs.

When multi-tenancy matters, simplyblock adds volume-level controls that reduce noisy-neighbor impact, which helps stabilize p99 latency even as clusters grow.

Future Directions and Advancements in RoCEv2

Data center teams keep pushing RoCEv2 toward larger routed domains with better congestion control defaults and clearer operational playbooks. DPUs and SmartNICs also shift work away from host CPUs, which makes RDMA transport choices more about fabric readiness than raw compute budget.

As those offloads mature, more teams will treat RDMA as a selectable “performance lane” inside Kubernetes Storage, rather than a separate, special-purpose stack.

Related Terms

Teams often review these glossary pages alongside RoCEv2 when they plan NVMe-oF transports for Kubernetes Storage and Software-defined Block Storage.

NVMe over RoCE

NVMe/RDMA

SmartNIC

Zero-Copy I/O

Questions and Answers

RoCEv2 (RDMA over Converged Ethernet v2) enables direct memory access across servers using standard Ethernet networks. It bypasses the CPU and TCP stack, reducing latency and improving throughput—especially beneficial for high-performance storage like NVMe-oF.

Yes, RoCEv2 offers significantly lower latency and CPU overhead compared to iSCSI. While iSCSI is widely supported, RoCEv2 is ideal for environments that demand ultra-fast data access, such as distributed databases and real-time analytics.

RoCEv2 is one of the supported transport protocols for NVMe over Fabrics, alongside TCP. While NVMe/TCP is easier to deploy, RoCEv2 offers lower latency and higher IOPS for latency-critical Kubernetes workloads.

RoCEv2 delivers better performance but requires a lossless Ethernet fabric, which adds complexity. NVMe over TCP is more flexible and easier to scale, making it preferable in most cloud-native deployments despite slightly higher latency.

Yes, modern DPUs like NVIDIA BlueField support RoCEv2 offloads, enabling hardware acceleration for both networking and NVMe-oF target processing. This boosts performance while reducing host CPU usage in disaggregated architectures.