SPDK Target

Terms related to simplyblock

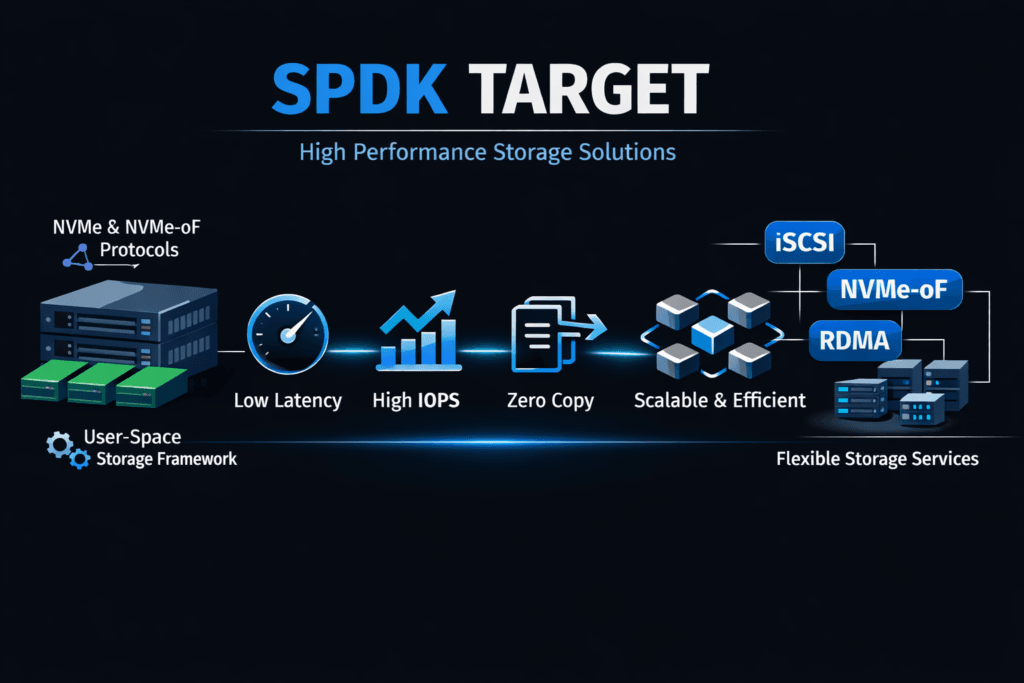

An SPDK Target is a user-space storage target that serves block I/O to remote hosts using NVMe-oF transports, including NVMe/TCP. It runs the data path outside the kernel, which cuts context switches and extra copies. That design helps teams push more IOPS per core and keep p99 latency steadier under load.

In practical terms, an SPDK Target matters when the CPU becomes the bottleneck before SSDs do. It also matters when you run multi-tenant platforms and want predictable behavior during bursts, rebuilds, and mixed traffic.

What makes a target “SPDK-based” in production

A target has two jobs: it exports namespaces, and it turns network requests into local device I/O. With SPDK, the target keeps that fast path in the user space and uses polling and tight CPU affinity to avoid random latency spikes that come from interrupts and scheduler noise.

This approach fits Software-defined Block Storage because the control plane can manage pools, placement, and QoS policies while the SPDK fast path handles high-rate I/O. When those two layers work together, the platform can scale without “mystery jitter” as node count grows.

🚀 Cut Storage CPU Overhead in Kubernetes

Use simplyblock to run Software-defined Block Storage with an SPDK dataplane and predictable QoS.

👉 See simplyblock SPDK + NVMe-oF Features →

SPDK Target in Kubernetes Storage Environments

Kubernetes Storage adds churn. Pods restart, nodes drain, and volume attach and mount steps happen all day. A storage backend needs a clean operational model, not just raw throughput.

An SPDK Target fits Kubernetes when the platform wraps it with CSI automation, stable discovery, and clear failure handling. The target should reconnect fast after a node event, and it should keep paths consistent when the scheduler moves a workload. Multi-tenancy also becomes non-negotiable in shared clusters. Without QoS, one batch job can distort latency for the busiest database pods.

SPDK Target and NVMe/TCP data paths

An SPDK Target commonly exposes storage over NVMe/TCP because NVMe/TCP runs on standard Ethernet and fits most ops teams. It also gives a clean upgrade path. Teams can start on TCP, then add RDMA-capable pools for the strictest latency targets later, without rebuilding their storage model.

The target still needs good “plumbing” to deliver results. CPU pinning, NUMA awareness, and NIC queue alignment often decide whether the platform holds p99 latency under pressure. If the storage layer ignores those details, the system looks fast in a lab and unstable in production.

Measuring SPDK Target performance the right way

A good test plan answers one question: Does the platform hit the latency target while it sustains the needed throughput under real constraints?

Run mixed read/write tests, include small blocks, and track p50, p95, and p99 latency. Then repeat the same tests during failure and recovery events, because rebuild work often exposes weak isolation. In Kubernetes Storage, measure through real PVCs and StorageClasses so you include CSI, networking, and scheduling effects.

Practical tuning levers for lower CPU cost and tighter latency

Use these knobs to improve results without guessing:

- Pin target threads and align them with NIC queues to reduce jitter at high concurrency.

- Keep MTU and congestion behavior consistent across racks, so bursts do not create random tail spikes.

- Use multipathing with clear policies, so a single link loss does not cause a cliff in latency.

- Apply QoS per tenant or tier, so scans do not choke log writes in shared clusters.

- Re-test during rebuilds, because steady-state numbers hide the worst behavior.

Architecture comparison for storage targets

The table below shows how common target approaches compare when you care about CPU efficiency, multi-tenant consistency, and ops fit.

| Target approach | Data path | CPU efficiency | Tail-latency control | Best fit |

|---|---|---|---|---|

| Kernel-based target | Kernel I/O stack | Medium | Medium | Simple, low-scale systems |

| User-space SPDK Target | User space, polled fast path | High | High | Performance-critical clusters |

| Proprietary array target | Vendor-controlled stack | Varies | Varies | Legacy SAN-centric ops |

| Software-defined Block Storage with SPDK dataplane | Control plane + SPDK fast path | High | High | Kubernetes-first, scale-out platforms |

Predictable performance with simplyblock™ SPDK dataplane

Simplyblock™ uses an SPDK-based, user-space, zero-copy dataplane to reduce overhead and keep CPU use efficient under load. That design supports NVMe/TCP, and it aligns well with Kubernetes Storage, where concurrency, churn, and multi-tenant traffic come standard.

As Software-defined Block Storage, simplyblock adds the control-plane features that a raw target does not cover on its own. Teams can run hyper-converged, disaggregated, or hybrid layouts, then apply QoS and policy at the volume level to protect p99 latency across tenants.

Where SPDK Target designs are heading

Targets keep moving closer to the network. DPUs and SmartNICs can take on more storage protocol work, which frees the host CPU for applications and improves consolidation.

At the same time, teams want better observability that ties latency spikes to specific causes, such as queue contention, noisy neighbors, or rebuild pacing. Platforms that combine a low-overhead fast path with strong policy controls will set the pace in large Kubernetes estates.

Related Terms

Teams review these terms when they design an SPDK Target for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

An SPDK target is a user-space storage service that presents NVMe devices to remote clients over fabrics like TCP or RDMA. Unlike kernel targets, it uses polling and CPU pinning to reduce latency and boost IOPS—key for NVMe over TCP performance in modern storage stacks.

The SPDK target eliminates kernel bottlenecks by processing I/O entirely in user space using DPDK for networking and a lockless architecture. This delivers consistent, ultra-low latency—ideal for software-defined storage platforms operating at scale with NVMe/TCP.

SPDK targets support NVMe-oF protocols, including TCP and RDMA. NVMe over TCP is most commonly used due to its standard Ethernet support and scalability, while RDMA offers lower latency in specialized environments with lossless networking.

Yes, SPDK targets can expose high-performance NVMe volumes to Kubernetes clusters via NVMe-oF. When paired with a CSI-compatible orchestrator like Simplyblock, they enable multi-tenant, persistent storage with low latency and dynamic provisioning.

While Simplyblock does not publicly document SPDK usage, its NVMe over TCP architecture delivers similar outcomes—high IOPS, low latency, and kernel bypass. Features like per-volume encryption and replication echo capabilities are typically built on SPDK-style backends.