Stateful Application in Kubernetes

Terms related to simplyblock

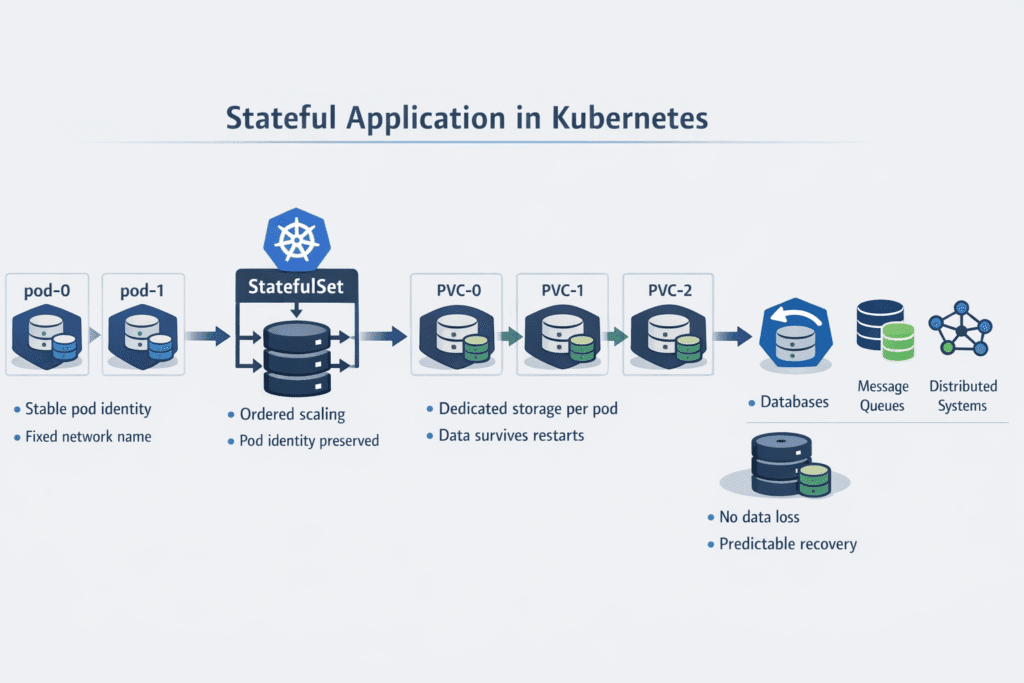

A stateful application in Kubernetes stores data that must survive pod restarts, node drains, and rolling updates. Databases, message queues, and search engines fit this model because they depend on durable storage and stable identity. Kubernetes supports these workloads with StatefulSets plus persistent volumes, so each replica keeps its own name and disk over time.

Stateful apps also amplify storage problems. A small p99 spike can slow queries, trigger retries, and stretch recovery, even when the pods look “Ready.”

What “Stateful” Really Means Day to Day

A stateful workload expects the same data to appear after every restart. It often needs an ordered startup and a stable network name, especially for primary/replica systems. Kubernetes StatefulSets exist for exactly that: they keep a sticky identity for each pod and pair it with persistent storage.

Once you treat storage as part of the app, you avoid most production surprises. The platform can reschedule pods, but the data path must stay predictable.

🚀 Run Stateful Apps on NVMe Storage, Natively in Kubernetes

Use Simplyblock to simplify persistent storage and keep database and queue latency predictable at scale.

👉 Use Simplyblock for Persistent Storage for Kubernetes →

Keeping Identity and Disks Stable When Pods Move

StatefulSets usually create a PVC per replica through a volume claim template. Kubernetes then reattaches the right volume when it recreates or moves the pod. This pattern protects you from “wrong disk” issues during upgrades and node maintenance.

Dynamic provisioning makes this easier at scale. A StorageClass + CSI driver can create new volumes on demand as you scale replicas up.

Stateful Application in Kubernetes and the Storage Contract

A stateful app requires a clear storage contract, including latency targets, durability rules, and recovery behavior. Access modes also matter, as they determine whether a volume can be attached to one node or multiple nodes. Picking the wrong mode can block scaling or cause attach errors when a pod moves.

Set expectations early with a simple SLO: “p99 under X ms” plus “recover in Y minutes.” That makes storage choices much easier.

NVMe/TCP Data Paths for Low-Latency State

Many stateful apps feel tail latency more than peak throughput. NVMe/TCP can help because it runs a standards-based block path over Ethernet and fits disaggregated designs where compute and storage scale separately.

Even with fast transport, contention still hurts. QoS and tenant isolation keep one noisy workload from dragging down the rest of the cluster.

Benchmarking Stateful Workloads the Right Way

Test what your app will actually do. Use the real block sizes, real concurrency, and real background tasks like snapshots, rebuilding, or rebalancing. Then track p95/p99 latency, throughput, and “time to steady” after a forced pod reschedule.

A benchmark that avoids failure paths lies to you. Add node drain and node loss tests, then watch how quickly the app returns to normal.

Operational Checklist for Stable Stateful Storage

- Define latency + recovery SLOs per service (not one generic “fast storage” goal).

- Pick a StorageClass that matches write rate, growth, and failure domain needs.

- Use QoS limits so one namespace can’t spike everyone’s p99.

- Test node drain, reschedule, and restore in staging, then record the results.

- Validate backup and restore workflows with real data sizes and real load.

Storage Choices for Stateful Apps

This table helps you choose storage for a stateful application in Kubernetes based on latency stability, failover behavior, and day-2 ops. Use it when you plan upgrades, node drains, and recovery tests.

| Storage approach | What you get | Common pain point | Best fit |

|---|---|---|---|

| Node-local disks (Local PV) | Lowest latency | Pod pins to a node; recovery depends on app replicas | Edge, caches, or apps with strong replication |

| Hyper-converged block | Local-ish speed + shared ops | Rebuild/resync can hit p99 | Small to mid clusters with steady growth |

| Disaggregated network block (NVMe/TCP) | Pod mobility + scalable pools | Needs QoS to avoid contention tails | Most production StatefulSets |

| Managed cloud volumes | Easy ops | Tier variance + attach/zone limits | Convenience-first cloud teams |

Stateful Application in Kubernetes with Predictable Performance Using Simplyblock

Simplyblock targets stateful Kubernetes workloads with NVMe-first, Kubernetes-native storage and day-2 controls. Its positioning focuses on keeping latency stable while you scale, drain nodes, and run background work.

If you want predictable outcomes, lock down the basics first: isolation, QoS, and repeatable backup/restore runs. After that, tuning becomes much easier.

What’s Next for Stateful Kubernetes Design

Teams now ship “stateful templates” that bundle StorageClasses, snapshot policies, and SLO dashboards with the app. More clusters also mix hyper-converged nodes for local speed and disaggregated storage for shared scale.

As tooling improves, stateful apps will feel less fragile. Platform teams will treat storage like an API with clear limits, not a mystery box.

Related Terms

Teams often review these glossary pages alongside Stateful Application in Kubernetes when they plan stable rollouts, volume lifecycles, and predictable scaling.

- Kubernetes StatefulSet

- Kubernetes Block Storage

- Dynamic Provisioning in Kubernetes

- Persistent Volume (PV)

- Kubernetes PodDisruptionBudget for Storage

- Kubernetes StatefulSet VolumeClaimTemplates

Questions and Answers

Stateful applications in Kubernetes use StatefulSets, PersistentVolumeClaims, and CSI drivers to maintain identity, data persistence, and stable network IDs across pod restarts—critical for databases and message queues.

Stateful workloads require persistent, high-performance volumes. Kubernetes provisions these using stateful storage backends that support dynamic provisioning, replication, and data durability.

Yes, NVMe over TCP offers ultra-low latency and high IOPS, making it ideal for stateful workloads like PostgreSQL or MongoDB that demand consistent and fast access to persistent volumes.

Stateful apps use CSI-based snapshots or external tools like Velero for backup. The CSI Snapshot Controller enables point-in-time recovery by capturing consistent snapshots of persistent volumes.

Stateless apps don’t retain data between sessions, while stateful apps rely on persistent data. Running stateful apps requires careful storage planning using software-defined storage to ensure high availability and data integrity.