Storage Composability

Terms related to simplyblock

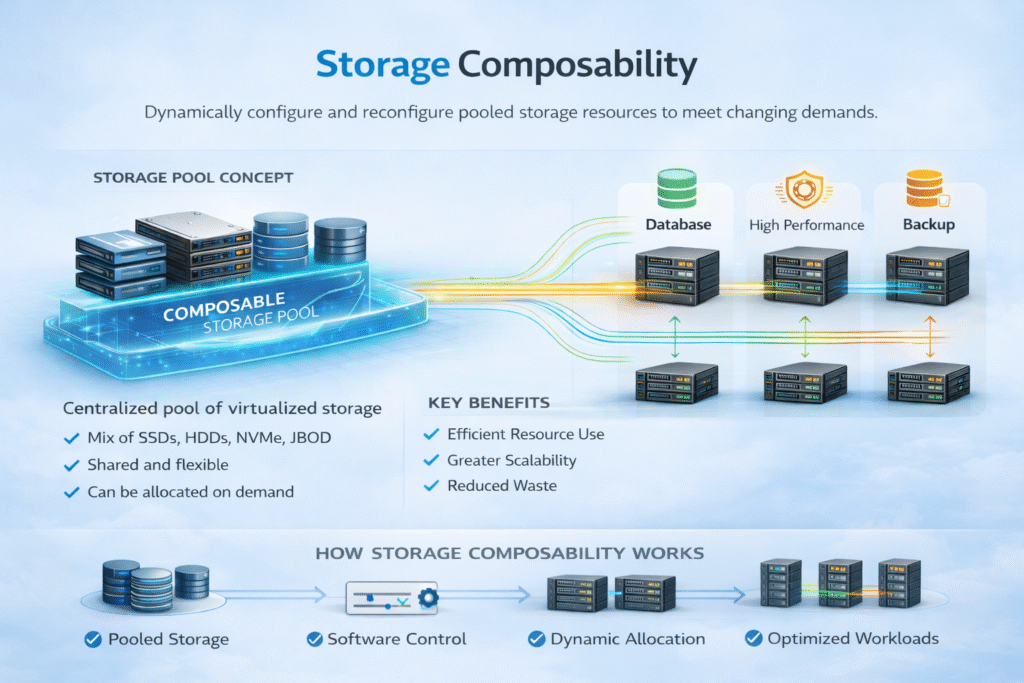

Storage composability is the ability to assemble, change, and reuse storage resources through software, without swapping hardware or rewriting applications. In practice, teams pool NVMe and SSD capacity, expose it as Software-defined Block Storage, and allocate the right performance and protection profile per workload.

Executives care because composability reduces stranded capacity, speeds up provisioning, and lowers refresh risk. Platform teams care because composability turns storage into an API-driven service with clear guardrails for multi-tenancy, QoS, and failover.

Storage composability often shows up alongside disaggregated designs. Compute scales on one curve, and storage scales on another. That separation helps you avoid overbuying nodes just to get more capacity, and it also supports a SAN alternative that behaves like cloud primitives on baremetal.

Making Storage Resources Composable with Software Control

Modern composable stacks rely on three building blocks – a control plane that enforces policy, a fast data path that keeps latency stable, and a fabric that scales without special handling. When these parts line up, operators can provision volumes in minutes, move them across hosts, and apply the same rules in dev, test, and production.

You get the best outcomes when composability pairs with storage virtualization and automation. A good platform abstracts media details, standardizes storage classes, and keeps placement decisions close to workload intent. It also exposes cost drivers, such as replication level, erasure coding, and tiering, so finance and operations can share the same model.

For cloud-native shops, composability works best when it integrates with Kubernetes APIs instead of adding a second workflow. That means CSI alignment, predictable behavior during node drains, and clean observability of p95 and p99 latency.

🚀 Deploy Storage Composability for NVMe/TCP Kubernetes Storage

Use Simplyblock to pool NVMe into Software-defined Block Storage and keep QoS predictable at scale.

👉 Use Simplyblock for Storage Composability →

Storage Composability Across Kubernetes Storage Clusters

In Kubernetes Storage, composability becomes a scheduling problem as much as a capacity problem. Pods move. Nodes roll. Storage must keep up without turning every upgrade into a maintenance event.

Composable designs usually map to one of three patterns. Hyper-converged storage keeps data services on worker nodes for short paths and simple footprint sizing. Disaggregated storage runs on dedicated storage nodes and serves volumes over the network for better pooling. Hybrid mixes both and lets you place hot volumes close while you pool cold capacity across the cluster.

Composability also helps you standardize storage classes for different application tiers. A platform team can offer “low-latency OLTP,” “throughput analytics,” and “cost-optimized logs,” and keep the rules consistent across clusters and regions.

Storage Composability with NVMe/TCP as the Fabric

NVMe/TCP matters because it turns composable storage into a fabric service over standard Ethernet. You keep NVMe command semantics, and you avoid the operational cost of specialized networking in many environments. For organizations that run mixed workloads, NVMe/TCP can serve as the default transport, while NVMe/RDMA can remain a performance tier for select cases.

When composability uses NVMe-oF, the network becomes part of the storage design. Teams should track latency, packet loss, and congestion behavior, and they should treat p99 latency as a first-class SLO. CPU cost also matters, especially at high queue depth. A user-space path built around SPDK-style polling can reduce overhead and help keep performance steady under load.

Testing Composable Storage for Tail-Latency Stability

Benchmark composable storage, the way production hits it. Run steady-state tests, burst tests, and mixed-workload tests with noisy neighbors. Track IOPS, throughput, average latency, and tail latency. Also track CPU per I/O, because a system that burns cores can look fine in a lab and then fall over when the cluster gets busy.

For Kubernetes, include operational events in your benchmark plan. Volume attach and detach time, reschedules, node drains, and rolling upgrades can change the I/O profile. A good test plan also checks multi-tenant behavior, because performance isolation often decides whether composability works at enterprise scale.

Tools like fio help, but your job mix matters more than the tool. Match block size, read/write mix, queue depth, and job count to your databases, streaming systems, and analytics jobs.

Operating Levers That Improve Composable Performance

Composable systems win when they keep the data path lean and enforce hard boundaries between tenants. Teams usually get the biggest gains from these steps:

- Keep the hot path in user space where possible, and reduce copies and context switches with SPDK-based design.

- Apply per-volume QoS limits to protect critical workloads and control noisy neighbors.

- Use disaggregated pools for better utilization, and reserve local NVMe for the strictest latency tiers.

- Tune the fabric for storage traffic, keep MTU consistent, and watch congestion signals.

- Validate p95 and p99 latency during operational events, not only during clean benchmarks.

Storage Model Trade-Offs for Composable and Traditional Architectures

Storage composability changes how you plan capacity and how you manage change. This table shows the most common options platform teams compare when they need predictable performance and fast provisioning.

| Model | Resource layout | Strengths | Common trade-off |

|---|---|---|---|

| Traditional SAN | Dedicated array and fabric | Clear separation, mature tooling | Slower scaling, higher lock-in risk |

| Hyper-converged SDS | Storage on worker nodes | Short path, simple small clusters | Storage scale often forces compute scale |

| Composable NVMe-oF pool | Shared pool served over NVMe-oF | High utilization, fast allocation, SAN alternative | Requires strong QoS and fabric discipline |

Storage Composability with Simplyblock™ for Deterministic QoS

Simplyblock supports storage composability by turning NVMe media into a pooled service with policy controls for performance and protection. Simplyblock exposes Software-defined Block Storage for cloud and baremetal environments, and it fits both hyper-converged and disaggregated Kubernetes deployment models.

Simplyblock focuses on fast data paths and stable latency under concurrency. Its SPDK-based, user-space architecture helps reduce CPU overhead and avoids extra copies in the I/O path. That design pairs well with NVMe/TCP at scale, especially when teams run many stateful workloads side by side and need multi-tenant QoS to stay strict.

In day-to-day operations, simplyblock integrates with Kubernetes Storage through CSI workflows. Teams can provision, expand, snapshot, and automate volumes using familiar platform primitives, while keeping the storage layer consistent across environments.

Roadmap – Policy, Offload, and Fabric Options

Composability trends point toward tighter policy control, more offloading, and smarter fabrics. Platform teams increasingly define intent through SLOs, and storage systems enforce those targets with automated placement and QoS.

Offload via DPUs and IPUs can reduce host CPU load and isolate noisy neighbors, which matters as clusters densify. On the fabric side, NVMe/TCP remains a practical default, and many enterprises add NVMe/RDMA tiers when they need the lowest latency.

Related Terms

Teams often review these glossary pages alongside Storage Composability when they design Kubernetes Storage and Software-defined Block Storage with predictable latency.

Disaggregated Storage

Storage Virtualization

Tail Latency

Storage Quality of Service (QoS)

Questions and Answers

Storage composability refers to the ability to dynamically allocate, scale, and manage storage resources independently of compute. In modern cloud-native setups, composable storage allows flexible provisioning through APIs and orchestration layers like Kubernetes, improving agility and infrastructure utilization.

Composable storage enables fine-grained control over persistent volumes in Kubernetes, supporting features like encryption, snapshots, and replication. Solutions like Simplyblock’s CSI driver integrate composable storage natively into Kubernetes for seamless, automated volume management.

Unlike rigid SAN or NAS architectures, composable storage allows you to build elastic, software-defined storage pools that scale on demand. It reduces hardware dependency, lowers costs, and enhances performance—especially when used with protocols like NVMe over TCP.

Yes, composable storage is crucial for workloads like AI, analytics, and databases, where IOPS, latency, and throughput matter. By decoupling storage from compute, you can scale resources independently and optimize performance without overprovisioning infrastructure.

Composable storage is a key enabler of Software-Defined Storage by providing modular, API-driven control over storage volumes. It supports dynamic provisioning and multi-tenant isolation, making it ideal for modern, scalable SDS environments.