Storage offload on DPUs

Terms related to simplyblock

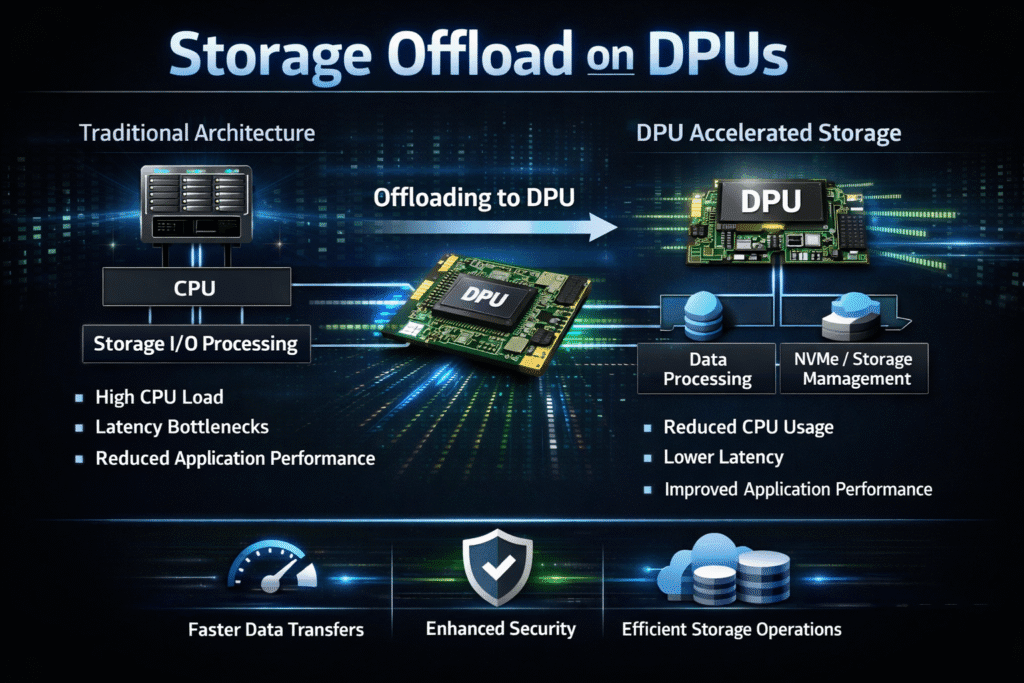

Storage offload on DPUs moves latency-sensitive storage, networking, and security work off the host CPU and into a dedicated Data Processing Unit (DPU) that sits on the PCIe path, next to the NIC and data plane. The goal is to free host cores for applications while improving consistency of tail latency for block I/O.

In practice, this matters most when Kubernetes Storage stacks are saturated by noisy neighbors, encryption, TCP processing, or multi-tenant virtualization overhead, and when Software-defined Block Storage needs to scale without a SAN appliance.

What is storage offload on DPU?

Storage offload on a DPU is the use of on-card compute (often Arm cores plus accelerators) to handle parts of the storage data path—such as NVMe-oF target/initiator processing, TCP/IP work, or inline crypto—so the host sees fewer interrupts, fewer copies, and fewer CPU cycles burned on infrastructure.

A common DPU pattern is “infrastructure isolation,” where the host runs apps, and the DPU runs storage and networking services with stronger separation.

🚀 Reduce Host CPU Overhead with DPU-Ready NVMe/TCP Kubernetes Storage

Use simplyblock to deliver consistent p99 latency with Software-defined Block Storage and multi-tenant QoS.

👉 See simplyblock Kubernetes Storage →

How does DPU storage offload work with NVMe-oF and NVMe/TCP?

With NVMe over Fabrics, the DPU can accelerate the NVMe-oF data path and, in some implementations, offload substantial target-side work in hardware. NVIDIA’s documentation describes NVMe-oF Target Offload, where regular I/O can be processed by the adapter and forwarded directly to an NVMe PCIe device via peer-to-peer PCIe, thereby reducing CPU involvement for NVMe-oF traffic outside of connection management and error handling.

For NVMe/TCP, vendors also position DPUs as co-processors that offload storage tasks from the host and can handle parts of the storage interface through the DPU’s on-card compute and accelerators.

What gets offloaded, and what usually stays on the host?

Offload coverage varies by vendor and generation, but storage-oriented DPU deployments typically target:

- NVMe-oF target/initiator processing and queue management, plus parts of NVMe/TCP handling

- Inline encryption (in-flight and, depending on design, at-rest primitives), checksum, and data integrity features

- Virtual switching, SR-IOV, and per-tenant isolation primitives that protect shared Kubernetes Storage clusters

Host CPUs typically keep orchestration, control-plane logic, and “slow path” exception handling (connection management, error handling, policy, and scheduling).

Why SPDK and zero-copy matter for DPU-ready storage stacks

A DPU can reduce host overhead, but the storage stack still has to be efficient end-to-end. SPDK is built around a user-space, polled-mode NVMe driver designed for zero-copy access and reduced latency variance, which is aligned with the same “CPU efficiency” goals that drive offload.

This is one reason SPDK-based storage engines are a strong fit for disaggregated, Ethernet-based NVMe/TCP deployments that need predictable p99 latency.

Simplyblock™ fit for DPU and IPU-oriented infrastructure

Simplyblock runs as Software-defined Block Storage with NVMe/TCP at its core and an SPDK-accelerated data path for Kubernetes-native environments.

For executives, the strategic angle is that DPU/IPU adoption is usually driven by three outcomes: higher host CPU availability, better multi-tenant isolation, and reduced infrastructure variability. Intel’s IPU positioning explicitly calls out infrastructure offload, storage virtualization, disaggregation, and “storage initiator offload,” including hardware acceleration on NVMe transports.

Operationally, simplyblock aligns with this direction by focusing on protocol-aware performance (including NVMe/TCP and NVMe/RDMA), Kubernetes integration, and tenant isolation/QoS behavior that matters in shared clusters.

Quick comparison of host-only versus DPU/IPU offload

The table below summarizes what changes when storage infrastructure work moves off the host CPU, which is often the deciding factor for SAN alternative designs on bare-metal Kubernetes.

| Approach | Where the storage data path runs | Host CPU impact | Best fit |

| Host-only (no offload) | Host kernel/userspace | Highest, especially under mixed infra + app load | Small clusters, low multi-tenancy |

| Host userspace acceleration (SPDK) | Host userspace polling | Lower overhead, better latency variance | High-IOPS NVMe/TCP on commodity servers |

| DPU/IPU-assisted offload | DPU/IPU + host control plane | Lowest host overhead, stronger isolation options | Multi-tenant Kubernetes Storage, security-heavy pipelines |

Offload is not “all or nothing.” Many teams start with host-side SPDK efficiency, then add DPUs where isolation, crypto, or infrastructure overhead becomes the limiting factor.

How Storage Offload on DPUs Changes Kubernetes Storage Design

In Kubernetes, storage is delivered through CSI, which standardizes how block and file systems are exposed to workloads. That abstraction lets you keep the Kubernetes control plane stable while changing the performance and isolation properties underneath, including DPU/IPU adoption.

The practical design choice is whether you place the storage data plane hyper-converged (close to workloads) or disaggregated (separate storage nodes), and where the DPU sits in that I/O path. DPU offload is usually most valuable when networked block storage is the bottleneck—especially for NVMe/TCP at scale—because both storage and networking overhead stack up on the host without acceleration.

Related Technologies

These terms come up often when designing DPU-accelerated NVMe/TCP data paths for Kubernetes Storage and Software-defined Block Storage.

What Is Storage Quality of Service (QoS)?

Zero-copy I/O

What Is a DPU?

Infrastructure Processing Unit (IPU)

Questions and Answers

No. NVMe/TCP is designed to run over standard Ethernet and TCP/IP, and it is widely used without specialized NICs. Offload is a scaling and isolation lever, not a requirement.

No. Offload can help hyper-converged storage (where nodes are CPU-contended) and disaggregated storage (where networked storage traffic is dominant). Hybrid clusters can benefit most because they concentrate high-I/O workloads onto the nodes best equipped for them.

Many vendors use IPU as a broader category for infrastructure processors; some product lines overlap in goals (network, storage, and security acceleration) but differ in hardware architecture and software ecosystem.

Typical offloads include network + storage protocol termination (NVMe/TCP, NVMe/RDMA, iSCSI), inline crypto (TLS/IPsec/at-rest encryption), packet steering, virtual switching, telemetry, and sometimes parts of RAID/erasure coding or replication pipelines—depending on the DPU stack and the storage software’s architecture.

It shines when hosts are CPU-bound (dense Kubernetes nodes, database fleets, virtualization platforms), when latency consistency matters (tail latency under load), and when you want strong multi-tenant isolation (per-tenant QoS, rate limiting, noisy-neighbor control). It’s also valuable when storage traffic is heavy and predictable, and CPU budgeting is hard.