Storage Orchestration

Terms related to simplyblock

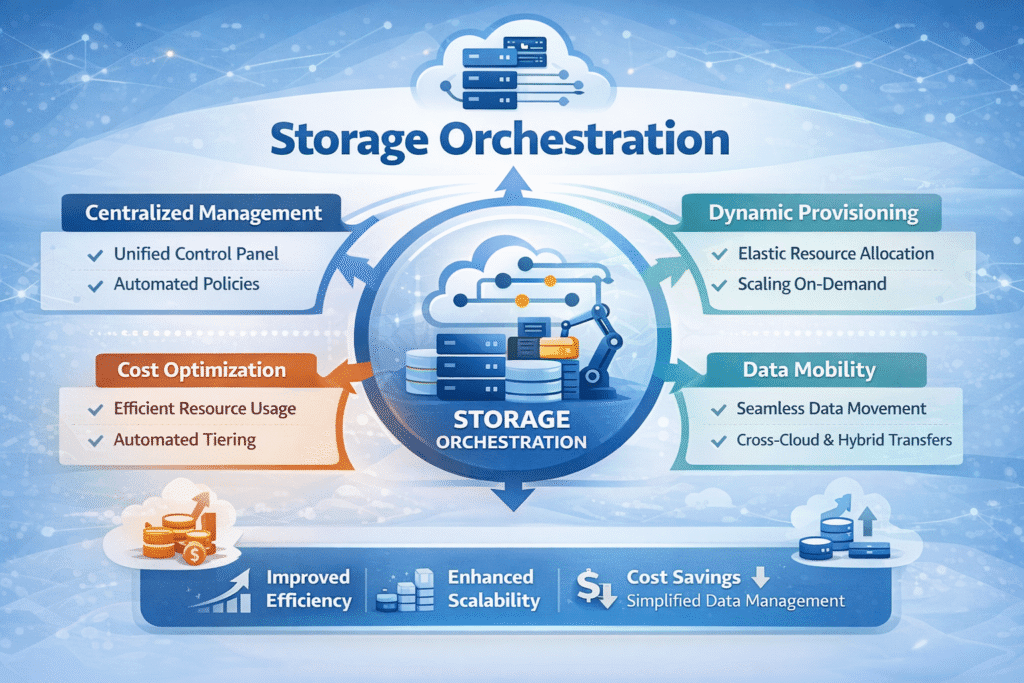

Storage orchestration is how a platform creates, attaches, grows, protects, and cleans up storage automatically across many nodes and workloads. It turns storage into an API: apps ask for capacity and behavior, and the platform executes the steps.

Teams use orchestration to cut manual work, prevent “one-off” setups, and keep performance steady as clusters grow. In Kubernetes, orchestration usually flows through StorageClasses, PVCs, and a CSI driver that connects Kubernetes to the storage system.

Making Storage Workflows Faster with Policy-Driven Platforms

Modern orchestration focuses on speed, safety, and repeatability. It provisions volumes on demand, applies the same rules every time, and keeps lifecycle actions predictable (resize, snapshot, clone, failover).

Strong platforms also separate concerns. The control plane decides what should happen, while the data plane moves I/O with low jitter. That split helps you scale operations without turning every change into a risky manual task.

🚀 Orchestrate Persistent NVMe Storage, Natively in Kubernetes

Use Simplyblock to automate provisioning, scaling, and lifecycle operations through CSI—so stateful apps keep predictable performance as clusters grow.

👉 Use Simplyblock for Kubernetes Storage →

Storage Orchestration Inside Kubernetes Workflows

Kubernetes expresses intent through objects like StorageClass and PersistentVolumeClaim (PVC). A StorageClass describes the “shape” of storage you want—performance tier, protection level, and behavior—and the backend makes that real.

Dynamic provisioning closes the loop. When a PVC appears, the cluster can create a matching volume automatically and bind it to the workload without ticket-based requests or manual steps.

CSI acts as the standard contract. It keeps Kubernetes storage portable because the cluster talks to drivers in a consistent way, even when the underlying storage system changes.

High-Performance Data Paths Over NVMe/TCP

NVMe/TCP delivers NVMe-style queues over standard Ethernet. That makes it a practical fit when you want orchestration plus a clean, standards-based protocol path.

Even with a fast protocol, orchestration must protect the hot path. Provision bursts, snapshots, and rebuilds can collide with latency-sensitive workloads if everything shares the same bottleneck. Good orchestration uses limits and guardrails so background work stays in its lane.

How to Benchmark Provisioning, Attach, and Recovery Times

Orchestration performance includes more than raw I/O speed. It also includes how quickly and safely the platform completes lifecycle actions.

Track two sets of metrics. On the data plane, watch p95/p99 latency, IOPS, throughput, and variance during load. On the control plane, measure time to provision a PVC, attach/mount time, expand time, snapshot/restore time, and recovery time after a failure.

Run a mixed test, not only a clean one. Add lifecycle events while a steady app workload runs. If p99 jumps during those actions, your orchestration needs tighter isolation.

Practical Tuning Moves for Better Storage Orchestration

- Define StorageClasses as clear contracts (latency tier, durability tier, cost tier) and enforce them consistently.

- Use dynamic provisioning so teams can self-serve volumes and stop waiting on manual steps.

- Cap provisioning and maintenance bursts with rate limits and safe defaults.

- Protect latency-sensitive workloads with per-volume or per-tenant caps on IOPS and bandwidth.

- Test lifecycle actions under load (create, expand, snapshot, restore) and watch p99 during each event.

- Standardize the protocol path (like NVMe/TCP), so host behavior stays predictable across nodes.

Side-by-Side Comparison – Storage Orchestration Approaches

Teams often choose an orchestration approach based on how much automation they need and how much performance risk they can accept. This table summarizes the tradeoffs so you can match the model to your cluster size, workload mix, and operational style.

| Orchestration approach | What it automates well | Common pain point | Best fit |

|---|---|---|---|

| Manual scripts + static volumes | Basic creation and mounting | Drift, slow changes, human error | Small clusters, dev/test |

| Cloud-managed block volumes | Easy provisioning, basic policies | Cost and performance swings under contention | General workloads |

| Kubernetes + CSI + software-defined block | Full PV lifecycle via API, strong policy control | Needs good defaults and guardrails | Stateful apps at scale |

| NVMe/TCP-based software-defined block | High performance plus a scalable network path | Must shield hot workloads from background work | I/O-heavy, latency-sensitive clusters |

Simplyblock for Storage Orchestration – Consistent Volumes at Scale

Simplyblock focuses on Kubernetes-native orchestration through CSI, so teams can provision and manage persistent volumes with consistent policies. It also supports NVMe/TCP-based Kubernetes storage for workloads that need stable latency at scale.

The goal stays simple: keep volume lifecycle work repeatable, keep multi-tenant behavior controlled, and keep p99 latency steady even when the cluster runs maintenance and provisioning bursts.

The Future of Storage Automation – More Control, Less Manual Work

Storage orchestration keeps moving toward safer defaults and more automation. Platforms increasingly attach policies to intent (“gold/silver/bronze”), then apply placement, limits, and protection automatically.

Expect tighter links between orchestration and observability. When the platform detects rising tail latency, it can slow background work, adjust placement, or apply stricter limits. Over time, orchestration will feel less like a set of scripts and more like an automated control loop.

Related Terms

Teams often review these glossary pages alongside Storage Orchestration when they standardize storage workflows, reduce manual ops, and keep Kubernetes volumes consistent at scale.

- Container Storage Interface (CSI)

- CSI Snapshot Controller

- Dynamic Provisioning in Kubernetes

- Persistent Volume Claim (PVC)

- NetApp Trident

Questions and Answers

Storage orchestration automates the provisioning, scaling, and management of storage resources for containerized applications. In Kubernetes, this is handled via CSI drivers to dynamically manage persistent volumes.

Storage orchestration ensures stateful workloads get the right storage automatically, based on access patterns, performance needs, and capacity. It reduces manual operations and helps ensure availability.

Yes, especially in software-defined storage setups, where orchestration tools abstract complexity across clouds and automate data placement, failover, and tiering across heterogeneous infrastructure.

Absolutely. NVMe over TCP enables high-speed, orchestrated storage provisioning across standard networks, supporting scalable and fast deployments in dynamic cloud-native environments.

It enables intelligent data placement and automated tiering—moving cold data to cheaper storage and keeping hot data on fast media. This is crucial for cloud cost optimization at scale.