Storage virtualization on DPU

Terms related to simplyblock

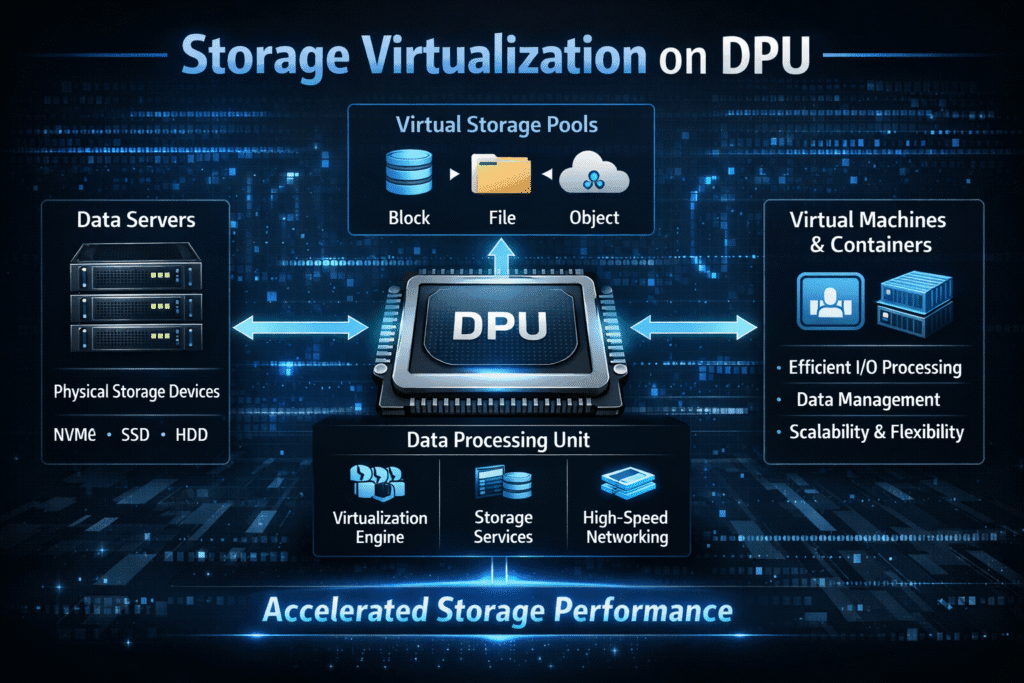

Storage virtualization abstracts physical media into logical constructs like pools, volumes, and namespaces, so teams can provision and govern block devices without coupling workloads to specific drives.

Storage virtualization on DPU places parts of that abstraction, plus enforcement logic, onto a Data Processing Unit in the server I/O path, reducing host CPU overhead and improving isolation for shared infrastructure.

Why Storage Virtualization on DPU Matters

Infrastructure teams evaluate Storage virtualization on DPU when the bottleneck is no longer SSD latency, but the platform tax of packet processing, security work, and storage dataplane overhead on the host. In multi-tenant environments, this tax shows up as uneven p99 behavior, lower workload density, and noisy-neighbor incidents that are hard to reproduce in test clusters.

In bare-metal deployments, the DPU model also supports infrastructure separation, where the host CPU prioritizes application threads while the infrastructure dataplane runs in a more controlled domain.

🚀 Storage Virtualization on DPU for NVMe/TCP Kubernetes Storage

Use simplyblock to reduce host CPU overhead and run Software-defined Block Storage with DPU compatibility.

👉 See Simplyblock Features and Benefits →

NVMe/TCP and NVMe-oF in the Virtualization Data Path

NVMe over Fabrics extends NVMe semantics across a network, while NVMe/TCP maps NVMe-oF onto standard TCP/IP networks. That combination is common in Ethernet-based, disaggregated designs where compute nodes access remote NVMe namespaces, and the virtualization layer maps logical volumes onto pooled NVMe devices and placement rules.

Storage virtualization on DPU becomes relevant here when TCP processing, isolation, and policy enforcement consume host cores, or when the environment needs steadier multi-tenant behavior at high queue depths.

Kubernetes Storage Behavior Under Contention

Kubernetes Storage relies on CSI semantics, so provisioning and attachment workflows stay consistent even when the underlying storage dataplane changes. In production, p99 issues are often contention problems, where shared host CPU time is consumed by networking and storage I/O processing rather than application work.

Storage virtualization on DPU helps by shifting more of that dataplane overhead into an infrastructure domain, keeping CSI workflows unchanged while reducing host contention and tail-latency variance.

Ensuring Fairness with QoS in DPU Storage Virtualization

Storage virtualization is where policy becomes enforceable, not just where devices get pooled. In multi-tenant platforms, the virtualization layer is responsible for guardrails like quotas, priority, and fairness, so one workload cannot monopolize queue depth, bandwidth, or cache.

Storage virtualization on DPU supports this objective by reducing host-side variability that undermines fairness during CPU pressure. When more of the dataplane work is isolated from application scheduling noise, QoS controls tend to behave more consistently for Kubernetes Storage delivered as Software-defined Block Storage.

Understanding the Impact of DPU on Storage Virtualization Models

Storage virtualization delivers the same outcome—logical volumes on top of pooled devices—but the operational model changes based on where the abstraction layer lives. This comparison summarizes the trade-offs executives and platform teams usually weigh.

| Virtualization placement | What the host sees | Typical strengths | Typical trade-offs |

| Array-based (classic SAN) | LUNs exported by an appliance | Centralized control, predictable operational model | CapEx-heavy, fabric complexity, vendor coupling |

| Host-based (kernel/user space) | Local or networked block devices | Fast iteration, flexible on commodity servers | Host CPU and isolation become limiting at scale |

| DPU/IPU-assisted | Virtual controller surface with infrastructure isolation | Lower host overhead, stronger separation, steadier multi-tenant consistency | Requires DPU/IPU operational model and tooling |

Operational Requirements for Storage Virtualization on DPU

Storage virtualization on DPU works best when the boundary between the control plane and the data plane is explicit. The host should keep orchestration (Kubernetes controllers, CSI workflows, placement policy, and change management), while the infrastructure dataplane focuses on the I/O path, isolation primitives, and security enforcement.

Rollouts often fail when DPU firmware and runtime updates are treated like routine NIC updates. Treat them like storage updates: staged upgrades, telemetry validation, and rollback planning, because dataplane regressions commonly show up as tail-latency instability rather than clean failures.

Simplyblock™ Fit for DPU-Era Infrastructure

Simplyblock positions its platform around NVMe/TCP, Kubernetes Storage, and Software-defined Block Storage, with an SPDK-accelerated dataplane emphasis for CPU efficiency and predictable latency.

Storage virtualization on DPU aligns best when your environment is already standardized on NVMe/TCP, and the binding constraint is host overhead, multi-tenant consistency, or p99 variance under mixed workloads, not raw SSD capability.

Related Technologies

These glossary terms are commonly reviewed alongside Storage virtualization on DPU in NVMe/TCP environments.

Questions and Answers

Offloading storage tasks like encryption, compression, and volume management to a DPU minimizes CPU load on Kubernetes nodes. This enhances pod density and IOPS while maintaining low latency, making it a great fit for Kubernetes-native storage solutions.

Running NVMe over TCP on a DPU removes protocol overhead from the CPU, improving storage throughput and reducing latency. It enables true zero-trust multi-tenancy and is ideal for high-performance, scale-out workloads in public or private cloud environments.

DPUs can isolate storage traffic and enforce per-tenant security policies at the hardware level. Combined with data-at-rest encryption, this allows multi-tenant platforms to achieve both performance and regulatory compliance with minimal overhead.

Latency-sensitive workloads like databases, analytics engines, and real-time streaming platforms gain the most from DPU acceleration. When paired with NVMe storage, DPUs reduce contention and scale storage performance efficiently across nodes.

Yes, DPUs can take over many roles of traditional storage controllers by handling virtualization, encryption, and networking in hardware. This shift simplifies architecture, reduces cost, and aligns well with software-defined storage strategies that aim for flexibility and scalability.