vSwitch / OVS offload on DPU

Terms related to simplyblock

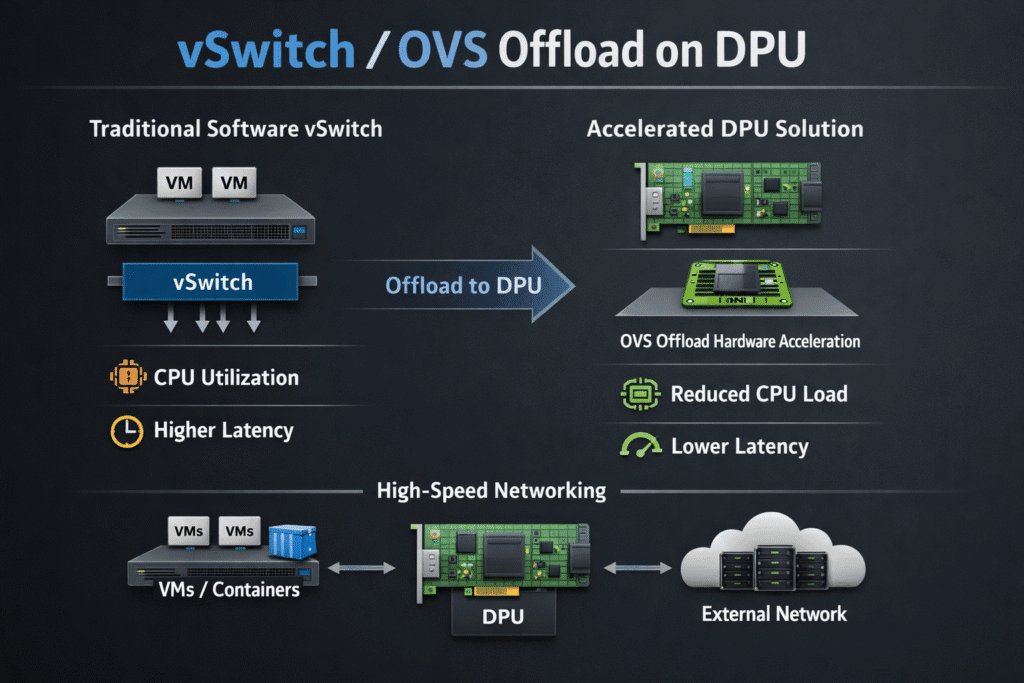

vSwitch / OVS offload on a DPU is an architecture where the Open vSwitch (OVS) control plane remains programmable, but the packet-forwarding data plane is pushed down into DPU or SmartNIC hardware.

The goal is to cut host CPU overhead, keep throughput stable under load, and reduce jitter for east-west traffic in Kubernetes clusters that also run latency-sensitive storage and database workloads.

How vSwitch / OVS offload on DPU works in the data path

OVS is typically split into a control path (flow programming) and a data path (packet forwarding). With offload enabled, OVS still programs flows, but established flows are pushed into the NIC or DPU hardware via mechanisms such as TC flower, SR-IOV representors, and switchdev, depending on the platform and driver capabilities.

The result is a fast path in hardware for steady-state traffic, and a software fallback for misses, exceptions, and features that are not offloadable.

🚀 Deploy vSwitch / OVS Offload on DPU and Free Up Host CPU

Use simplyblock to reduce infrastructure overhead and keep NVMe/TCP and Kubernetes Storage performance predictable at scale.

👉 Use simplyblock for SmartNICs and DPUs →

What vSwitch / OVS offload on DPU changes in Kubernetes

Kubernetes networking often relies on overlays, policy enforcement, and service abstractions that can become CPU-expensive when node density and pod churn increase. With OVS offload, the host spends less time forwarding packets through the kernel or user-space datapaths, which helps on clusters running network-heavy workloads, service meshes, or strict multi-tenant segmentation.

This is commonly evaluated alongside CNI stacks that can integrate with OVS, and with platforms that document and operationalize OVS hardware offload behavior.

Why vSwitch / OVS offload on DPU reduces host CPU overhead

Storage traffic is still network traffic. When a cluster runs Kubernetes Storage alongside high-rate application networking, noisy network forwarding can show up as tail-latency variance, dropped throughput during bursts, or unpredictable CPU contention. Offloading OVS forwarding is one lever that helps stabilize the node’s infrastructure overhead, which is useful when your storage path is NVMe/TCP and your applications expect consistent p99 behavior.

For teams standardizing on Software-defined Block Storage, keeping the host CPU available for applications and storage services is often the difference between “it benchmarks well” and “it holds up under mixed workloads.” This is also why DPU-centric designs are frequently discussed together with user-space acceleration concepts like DPDK (Data Plane Development Kit) and reduced-copy data movement such as Zero-copy I/O.

How vSwitch / OVS offload on DPU affects NVMe/TCP and NVMe-oF traffic

In Kubernetes, OVS is often in the path for east-west traffic, overlays, and policy enforcement, which can consume host CPU and introduce jitter when nodes are busy. When the same nodes also carry storage traffic, that “infrastructure tax” can show up as tail-latency variance, even if storage is provisioned correctly.

NVMe-oF is a way to carry NVMe semantics over a network fabric, and NVMe/TCP is the common Ethernet transport for it in Kubernetes Storage environments. Offloading the vSwitch data plane onto a DPU reduces host forwarding load and keeps packet handling more deterministic, which helps protect NVMe/TCP behavior during bursts, pod churn, and multi-tenant contention without changing the application’s storage interface.

What Usually Gets Offloaded First

Not every feature is offloaded on every platform, but these are common categories that teams attempt to move off the host first:

- Basic L2/L3 forwarding and flow steering for steady-state traffic

- Overlay handling (where supported), including tunnel encapsulation patterns

- Connection tracking acceleration (platform-dependent)

- Policy enforcement primitives (match/action pipelines)

- SR-IOV VF represents forwarding between the host, VFs, and uplinks

vSwitch Data Plane Placement Trade-offs

This table summarizes the most common ways OVS is deployed, and what changes when you introduce a DPU. The useful executive lens is how much host CPU do we spend to get deterministic throughput and isolation, and the useful operator lens is “how much complexity do we add to the NIC, kernel, and lifecycle domain.”

| Approach | Where the vSwitch data plane runs | Strengths | Typical trade-offs | Best fit |

| OVS kernel datapath (no offload) | Host kernel and CPU | Familiar operations, broad feature coverage | Higher CPU overhead, less predictable tail latency | General-purpose clusters, moderate traffic |

| OVS with DPDK (host acceleration) | Host user space (DPDK) | Higher packet rate, lower kernel overhead | Still consumes host cores, tuning and pinning required | Dedicated network-heavy nodes, NFV-style patterns |

| OVS offload on DPU / SmartNIC | DPU or NIC hardware fast path | Lower host CPU cost, strong isolation, stable throughput under contention | Hardware qualification, feature matrix constraints, more lifecycle surface area | Dense multi-tenant clusters, strict SLOs, high east-west traffic |

Operational considerations that affect outcomes

The main failure mode with vSwitch / OVS offload on a DPU is assuming offload is on means everything is offloaded. In practice, you need clear observability into what is in hardware, what is falling back to software, and why.

Good operations teams track flow miss rates, representor behavior, MTU consistency across overlays, and version compatibility between NIC firmware, drivers, OVS, and the CNI stack. This is also where platform-grade Observability helps, because a single node with unexpected software fallback can become a hotspot.

Simplyblock™ and vSwitch / OVS offload on a DPU

Simplyblock aligns with DPU-centric networking because it is designed to keep infrastructure overhead low in environments where storage and networking compete for CPU. For clusters running Kubernetes Storage with Software-defined Block Storage, vSwitch offload helps reduce noisy-neighbor effects on the node, while simplyblock focuses on predictable storage performance over NVMe/TCP.

Simplyblock is also built for disaggregated and hybrid deployment models, where stabilizing node-level CPU and latency behavior improves overall cluster efficiency. DPU and vSwitch offload fit cleanly into that model, especially when you are scaling east-west traffic alongside storage IO.

Related Technologies

These glossary terms are commonly reviewed alongside vSwitch / OVS offload on a DPU in Kubernetes clusters with high east-west traffic and strict latency targets.

Streamline Data Handling with SmartNICs

Infrastructure Processing Unit (IPU)

PCI Express (PCIe)

Tail Latency

Questions and Answers

Offloading vSwitch or OVS to a DPU accelerates east-west traffic between VMs or containers by eliminating CPU bottlenecks. The DPU handles switching at line rate, enabling high-performance microservice communication in environments like Kubernetes or OpenStack.

Offloading OVS to a DPU removes switching overhead from the main CPU, allowing higher throughput and lower latency. It reduces context switches and improves performance for latency-sensitive applications like databases, streaming, and distributed storage.

Yes. Traditional kernel-based switching consumes host resources and adds latency. DPU-based OVS offload bypasses the host OS entirely, enabling near-hardware speeds and better isolation—ideal for multi-tenant environments or secure cloud-native stacks.

Absolutely. DPUs can accelerate virtual switching in Kubernetes, OpenStack, or other cloud-native platforms. They reduce CPU contention for control-plane workloads and enhance pod-to-pod or VM networking without compromising on throughput or latency.

SR-IOV offers basic passthrough networking, while DPU-based OVS offload provides full software-defined switching with advanced features like QoS, ACLs, and VXLAN—all handled by the DPU. This allows better control, observability, and flexibility in software-defined infrastructure.