Ceph

Terms related to simplyblock

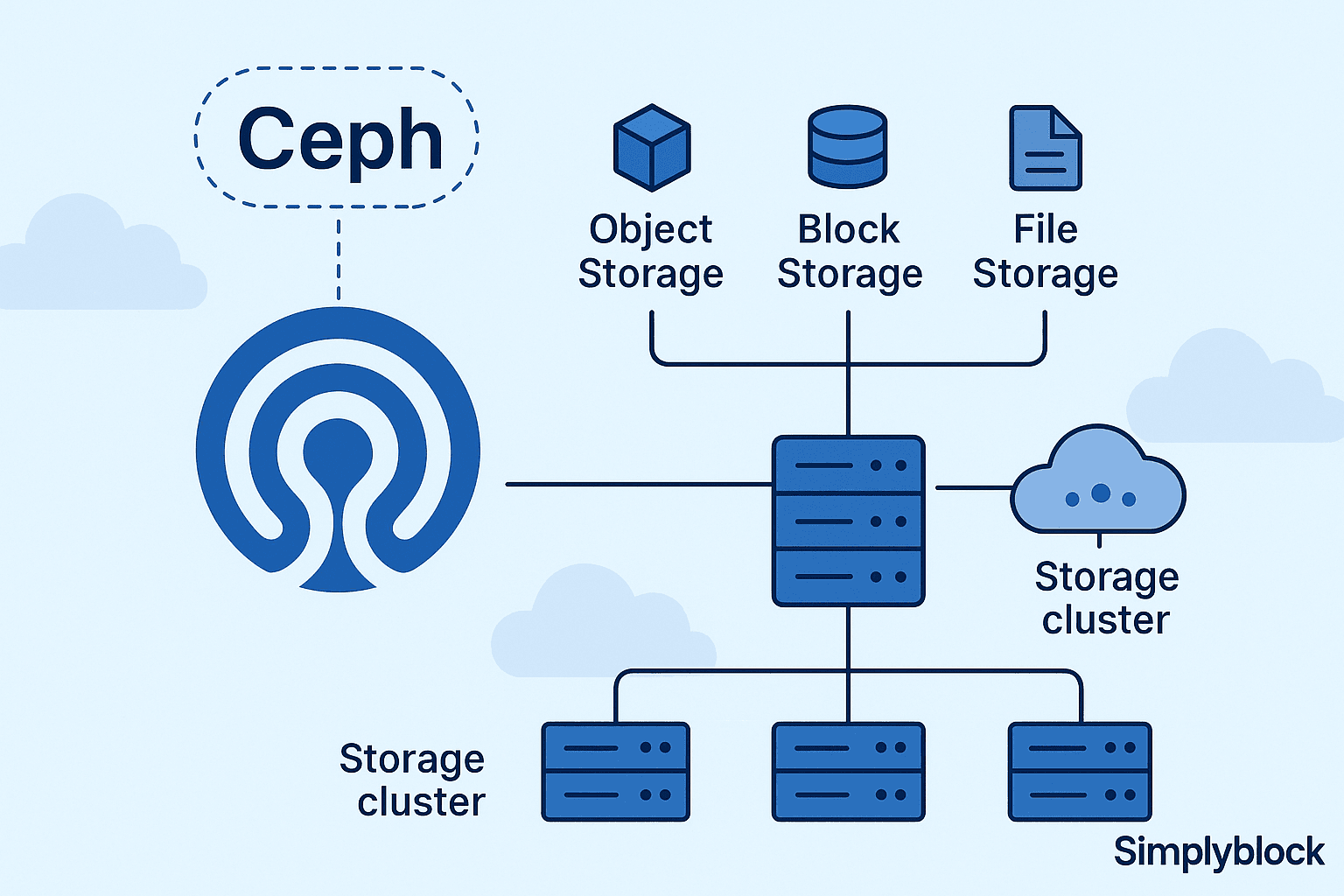

Ceph is an open-source, distributed storage system designed to provide scalability, reliability, and performance. It is widely used for object storage, block storage, and file storage, making it a versatile solution for enterprises and cloud environments. Ceph achieves high availability and fault tolerance through its self-healing and self-managing architecture, which eliminates single points of failure.

How Does Ceph Work?

Ceph operates using a software-defined storage model, meaning it runs on commodity hardware and uses intelligent algorithms to distribute and replicate data. The core of Ceph is the Reliable Autonomic Distributed Object Store (RADOS), which handles data storage and management across multiple nodes. RADOS ensures that data is automatically distributed and replicated, providing resilience against hardware failures.

Components of Ceph

- Ceph Monitors (MONs): Maintain cluster state and manage metadata.

- Object Storage Daemons (OSDs): Store actual data and handle replication.

- Metadata Servers (MDS): Manage metadata for CephFS, Ceph’s distributed file system.

- Ceph Clients: Interact with the storage system using different protocols like RADOS, CephFS, and RBD.

🚀 Ditch Ceph Complexity for High-Performance NVMe Storage

Use Simplyblock to cut latency and simplify storage operations—without giving up scalability.

👉 See Why Simplyblock Is a Better Alternative to Ceph →

Key Features of Ceph

Ceph is known for its scalability, flexibility, and fault tolerance. Some of its key features include:

- Scalability: Can scale to exabytes of storage without performance degradation.

- Self-Healing: Automatically detects and recovers from hardware failures.

- Distributed Architecture: Eliminates single points of failure by distributing data across multiple nodes.

- Support for Multiple Storage Interfaces: Includes RADOS (native object storage), CephFS (file system), and RBD (block storage for virtual machines and containers).

- Thin Provisioning: Optimizes storage allocation to maximize efficiency.

Ceph Use Cases

Ceph is widely adopted in various industries due to its flexibility and reliability. Some of its primary use cases include:

- Cloud Storage: Many cloud providers use Ceph for backend storage.

- Kubernetes Storage: Ceph integrates with Kubernetes via CSI drivers.

- Big Data Analytics: Supports high-throughput workloads for AI/ML and analytics applications.

- Enterprise Backup and Archiving: Provides cost-effective long-term data storage solutions.

Ceph vs Other Storage Solutions

Ceph competes with various distributed storage systems such as MinIO, GlusterFS, and Simplyblock. Below is a comparison of Ceph with other leading alternatives:

| Feature | Ceph | MinIO | Simplyblock |

|---|---|---|---|

| Storage Type | Object, Block, File | Object | Block |

| Scalability | Very High | High | High |

| Self-Healing | Yes | No | Yes |

| Performance | High | High | Very High |

| Kubernetes Support | Yes | Yes | Yes |

| Best For | General-purpose storage | Object storage | NVMe-based high-performance storage |

Challenges of Using Ceph

While Ceph offers significant advantages, it also has some challenges, including:

- Complexity: Requires expertise to deploy and manage.

- High Resource Requirements: Needs substantial CPU and RAM for optimal performance.

- Latency Issues: Can introduce higher latency compared to specialized NVMe-based solutions like Simplyblock.

Ceph and Simplyblock – A Modern Alternative

While Ceph remains a strong choice for large-scale storage, modern workloads demand higher performance and lower latency. Simplyblock provides a cutting-edge alternative by leveraging NVMe-over-TCP technology for ultra-fast storage performance. With lower latency, better IOPS, and simplified management, Simplyblock is an ideal choice for high-performance applications like databases, AI/ML workloads, and containerized environments.

For a detailed feature comparison, visit the Simplyblock vs. Ceph breakdown to see how they compare in performance, scalability, and efficiency.

Learn More

Questions and Answers

Ceph is reliable but often complex to operate and optimize. For high-IOPS and low-latency workloads—like databases or analytics—teams are moving to NVMe-over-TCP solutions that deliver better performance and simpler management using commodity hardware.

Ceph is a legacy SDS platform with broad feature sets but higher operational overhead. Newer SDS platforms like Simplyblock offer native NVMe support, seamless Kubernetes integration, and built-in features like snapshots and encryption, making them ideal for cloud-native environments.

Running Ceph via Rook in Kubernetes introduces complexity, high resource usage, and slower recovery times. In contrast, Kubernetes-native storage solutions provide faster provisioning, better tail latency, and easier day-2 operations.

Ceph remains a good choice for bulk object storage or mixed-use workloads where raw scalability is more important than speed. However, for most cloud-native or performance-first environments, purpose-built SDS platforms offer better results.

Not natively. Ceph can use NVMe disks, but its architecture wasn’t designed for ultra-fast devices. To fully leverage NVMe’s capabilities, a more lightweight and protocol-efficient solution like NVMe-over-TCP is required.