Hazelcast

Terms related to simplyblock

Hazelcast as a Distributed In-Memory Data Grid

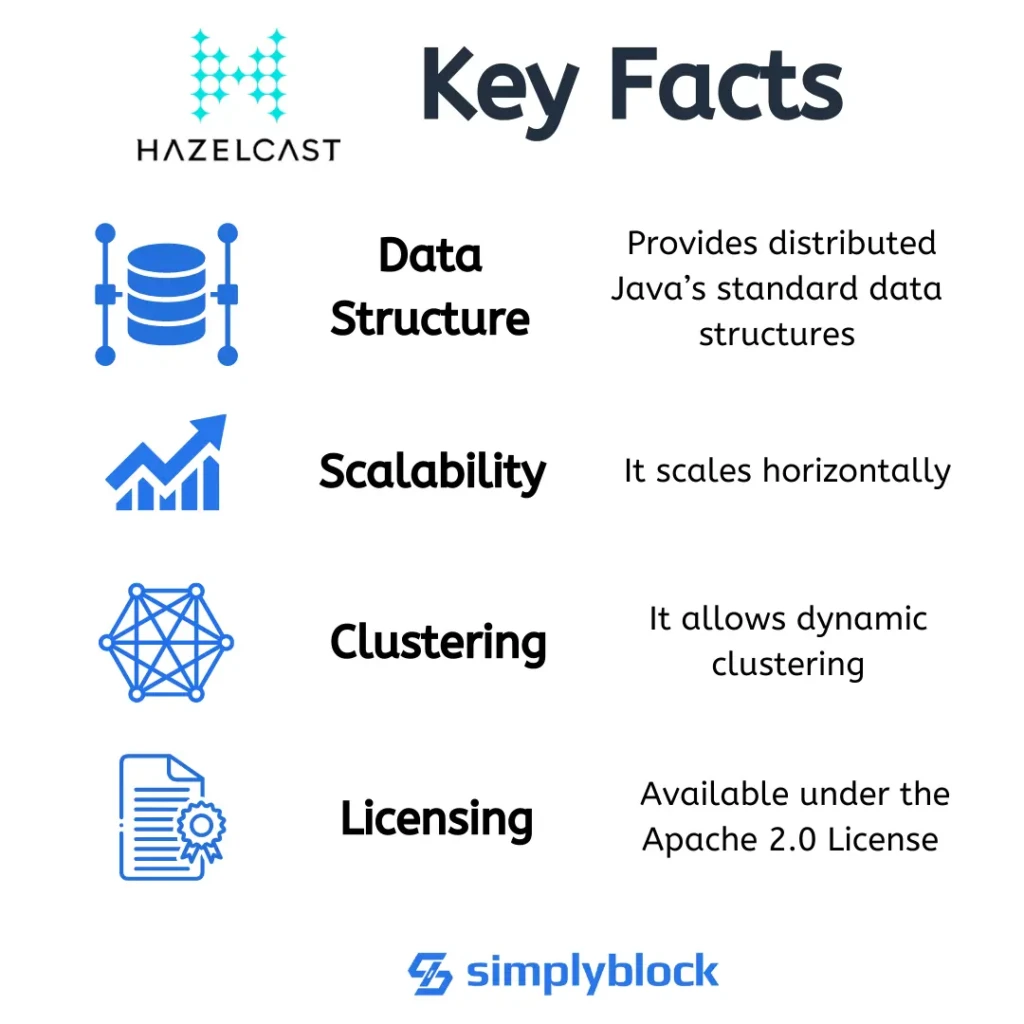

Hazelcast is an open-source, in-memory computing platform that enables ultra-low-latency access to distributed data through caching, key-value storage, and stream processing. Designed for real-time applications and scalable architectures, Hazelcast operates as both a distributed cache and an in-memory data grid (IMDG). It supports multiple programming languages including Java, C++, Python, and Go.

Hazelcast helps offload backend systems by holding frequently accessed data closer to compute resources. It’s often deployed in financial services, telecom, and logistics environments where response times under milliseconds are required.

Hazelcast Architecture

Hazelcast clusters are made up of multiple nodes that form a peer-to-peer network, meaning there is no master node. Each node can store a portion of the data and run compute operations in parallel, ensuring high availability and linear scalability.

Data is stored as key-value pairs and partitioned automatically across cluster members. Hazelcast provides built-in replication and failover capabilities, maintaining data consistency and durability even if nodes fail.

Core Features

- Distributed in-memory key-value store

- Pub/sub messaging and distributed queues

- JCache and MapStore integrations

- Native support for SQL-like querying (Jet SQL)

- Stream processing via Hazelcast Jet

- TLS, mutual auth, RBAC, and fine-grained data access controls

- WAN replication and cross-data-center availability

Hazelcast’s in-memory model is functionally complementary to the disaggregated NVMe storage used by simplyblock™, which supports persistent workloads where ultra-fast response times and scale-out performance are needed

Hazelcast vs Traditional Caching Solutions

Hazelcast distinguishes itself by blending traditional caching with advanced compute and streaming features. Compared to single-node caches like Memcached or Redis (in standalone mode), Hazelcast offers a fault-tolerant, horizontally scalable runtime with built-in high availability.

Comparison Table

| Feature | Hazelcast | Redis (Standalone) |

|---|---|---|

| Deployment Model | Clustered, peer-to-peer | Single-node or sentinel-based |

| Persistence | Optional, with MapStore or external | No (in-memory only by default) |

| Stream Processing | Built-in (Jet) | External tooling required |

| Query Support | SQL-like, filtering, aggregation | Basic key-based operations |

| Use Case Fit | Low-latency apps, distributed compute | Fast caching |

In multi-tenant Kubernetes environments, simplyblock’s QoS and isolation controls allow Hazelcast clusters to be hosted with confidence across multiple teams or business units.

Hazelcast Use Cases

Hazelcast is commonly used in applications where latency and throughput requirements exceed the capabilities of disk-backed systems. Typical deployments include:

- Distributed session management in web-scale applications

- Leaderboards and real-time scoring systems

- Microservices communication via distributed pub/sub

- Fraud detection and alerting using event stream processing

- Real-time analytics pipelines in manufacturing and logistics

- Data offloading from relational systems for faster lookups

For high-throughput systems backed by durable storage, software-defined NVMe storage ensures fast persistence and recovery for Hazelcast’s externalized data states.

Kubernetes and Storage Considerations

Hazelcast supports cloud-native deployments through its Kubernetes Operator and Helm charts. Stateful components—like persistent queues, MapStore-backed datasets, or Jet pipelines—can benefit from persistent volume claims managed via Container Storage Interface (CSI).

When deploying Hazelcast alongside NVMe over TCP volumes, engineers gain flexibility in scaling stateful workloads with high IOPS, avoiding bottlenecks in stream or cache-intensive architectures.

Integration with Simplyblock Storage Architecture

Hazelcast’s memory-first model pairs well with fast persistent backends. For deployments requiring durable backups or fail-safe cache overflow, erasure-coded NVMe storage offers redundancy with minimal capacity overhead.

Simplyblock can be used to:

Support streaming data archives for post-processing

Store Hazelcast backups and snapshots

Serve as overflow storage for MapStore data

Enable fast recovery after node failures

Related Terms

Teams often review these glossary pages alongside Hazelcast when they design low-latency, stateful services and set targets for NVMe/TCP, Kubernetes Storage, and Software-defined Block Storage.

Questions and Answers

Hazelcast enables ultra-fast data processing by storing data in memory and distributing workloads across nodes. It excels in scenarios like fraud detection, session storage, and streaming analytics where low latency and high availability are crucial.

Hazelcast and Redis are both popular for caching, but Hazelcast offers built-in support for distributed computation and clustering without requiring external add-ons. Redis is often favored for simplicity and raw speed, while Hazelcast provides a more comprehensive in-memory data grid with support for distributed objects and services.

Yes, Hazelcast provides native Kubernetes support, including Helm charts and automatic discovery via Kubernetes APIs. It’s a good fit for containerized applications and can be paired with Kubernetes storage solutions like Simplyblock for high-performance persistent volumes.

Hazelcast is well-suited for event-driven systems thanks to its distributed topic and publish/subscribe features. It can handle real-time data streams and processing tasks, making it useful for use cases such as fraud detection or live analytics.

For persistent storage of offloaded data or backups, Hazelcast can be paired with high-throughput NVMe-based solutions like NVMe over TCP storage. This ensures minimal latency while preserving data durability beyond in-memory limits.