Marqo

Terms related to simplyblock

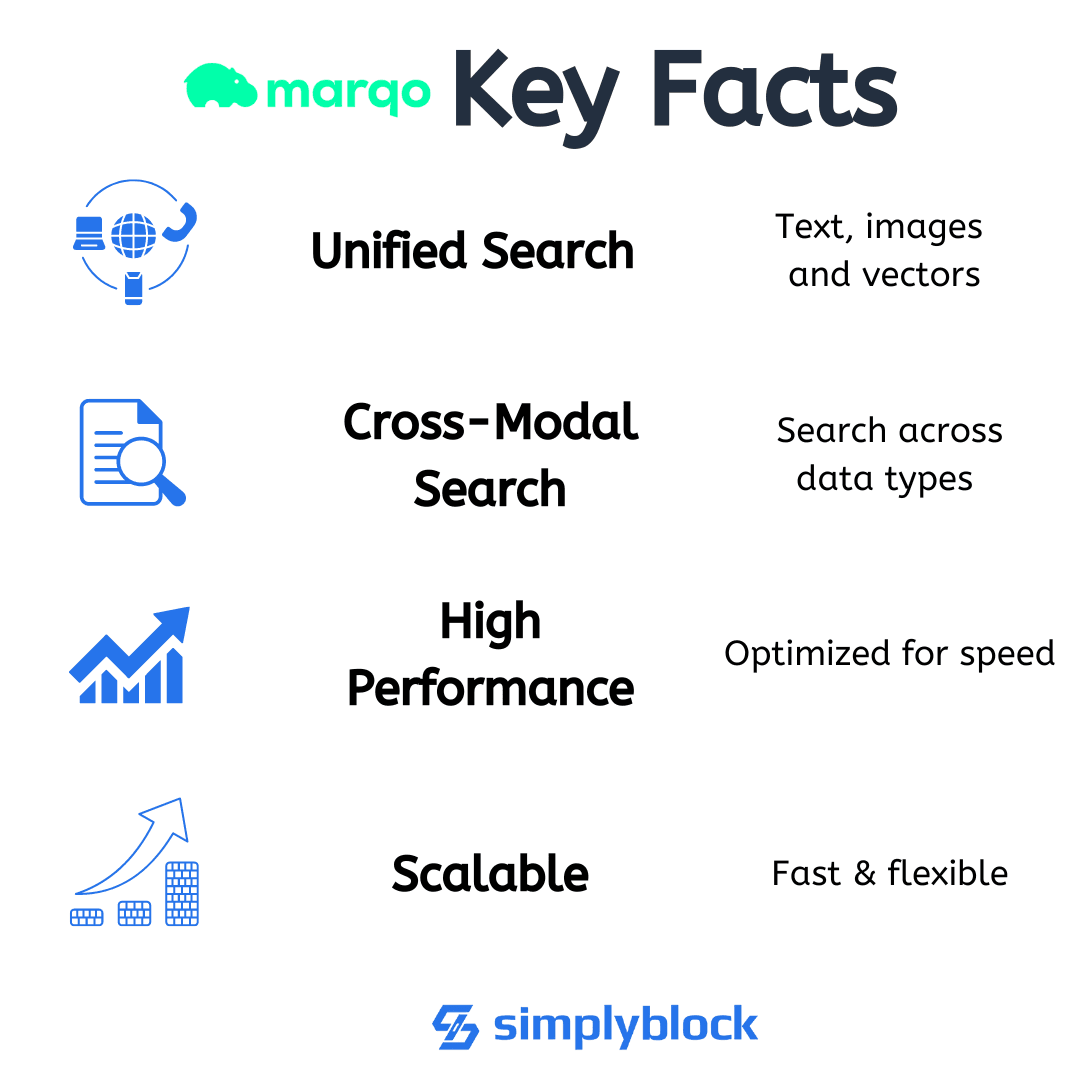

Marqo is an open-source vector search engine optimized for indexing and querying unstructured data using machine learning models. Unlike traditional keyword-based search tools, Marqo enables semantic search, allowing users to retrieve relevant results based on meaning rather than exact terms. It supports multimodal inputs, including text, images, and hybrid content, making it suitable for AI-driven search applications.

By combining vector embeddings with a real-time search engine backend, Marqo delivers relevance and performance in scenarios where traditional search falls short—such as natural language queries, product discovery, and content recommendation.

How Marqo Works

Marqo automatically transforms documents into vector embeddings using integrated machine learning models. These vectors represent the semantic meaning of content and allow for nearest-neighbor searches based on cosine similarity or other distance metrics.

Under the hood, Marqo runs on top of OpenSearch, using its scalable architecture for indexing and querying. The engine continuously updates indices in real-time, supports full-text search, and handles diverse data types without requiring manual feature engineering.

Each search query is also converted into an embedding, enabling comparison against stored vectors to retrieve semantically similar documents, even if there are no exact keyword matches.

Marqo Use Cases

Marqo is engineered for modern search applications where user intent and content relevance matter more than keyword frequency. Its typical use cases include:

- E-commerce Product Search: Search by product features or natural language descriptions (e.g., “lightweight waterproof jacket for hiking”).

- Enterprise Knowledge Management: Unified search across documentation, support tickets, and media files.

- Multimodal AI Applications: Index and query text-image combinations for intelligent recommendations.

- Content-Based Retrieval: Find similar articles, documents, or posts based on meaning, not metadata.

- Media and Image Search: Search via image inputs using integrated vision models.

In AI-heavy environments, integrating Marqo with high-performance backends like simplyblock™ ensures low-latency results at scale, especially for vector-heavy workloads that depend on sub-millisecond access times.

Marqo vs. Other Vector Search Engines

Marqo differentiates itself by combining full-text and semantic search into a unified experience. It’s also designed to be more developer-friendly and accessible out of the box.

Comparison Table

| Feature | Marqo | Weaviate | Pinecone | Elasticsearch + KNN |

|---|---|---|---|---|

| Open-source | Yes | Yes | No (closed SaaS) | Yes |

| Multimodal Support | Yes | Partial | No | No |

| Full-Text + Vector Search | Unified | Separate | Separate | Separate |

| Backend | OpenSearch | Custom DB | Proprietary | Elasticsearch |

| ML Model Integration | Native (zero config) | Plugin-based | External | External |

Marqo is uniquely positioned for organizations seeking both fast deployment and advanced search features in a single open platform.

Architecture and Performance

Marqo runs as a stateless API layer that connects to an OpenSearch cluster. All documents are converted into embeddings at index time, and vector similarity search is conducted using OpenSearch’s ANN (Approximate Nearest Neighbor) capabilities.

Key architectural strengths include:

- Stateless API: Easy to scale horizontally.

- Built-in Model Inference: No need to manage external embedding pipelines.

- Real-time Indexing: New content is searchable in seconds.

- Support for Popular Models: Including CLIP for images and sentence-transformers for text.

To meet performance demands in production environments, vector indices benefit from fast NVMe-backed storage and low-latency SDS platforms like simplyblock. Using NVMe over TCP and erasure coding ensures high-speed reads and fault-tolerant writes without expensive hardware.

Deploying Marqo in Kubernetes or Cloud Environments

Marqo supports containerized deployment via Docker and Helm charts, making it Kubernetes-ready. In distributed architectures, using a persistent storage backend such as simplyblock for Kubernetes offers:

- Dynamic provisioning with CSI

- Persistent volumes for embedding indexes

- High IOPS for ANN performance

- Resilience through data redundancy and thin provisioning

This enables teams to build and scale AI search capabilities without bottlenecks in storage I/O or reliability.

Related Terms

Teams often review these glossary pages alongside Marqo when they compare vector search stacks and plan persistent performance for Kubernetes Storage and Software-defined Block Storage.

Weaviate

Qdrant

Pinecone

Elasticsearch

Questions and Answers

Marqo is purpose-built for semantic search using machine learning models like transformers. It allows you to search across unstructured data such as images and text based on meaning, not just keywords—making it ideal for AI-driven applications that need accurate and relevant search results.

Marqo automatically converts input data into vector embeddings using transformer models. It stores and indexes these vectors for similarity-based search. This makes Marqo ideal for applications like product discovery, document retrieval, and multimodal search experiences.

Yes, Marqo can be deployed in Kubernetes using containers. To support performance at scale, it should be paired with fast Kubernetes-native NVMe storage, which improves indexing speed and lowers query latency for large vector datasets.

Vector search requires high IOPS and low-latency storage. NVMe over TCP is ideal for workloads like Marqo that perform heavy indexing and large-scale similarity search across embeddings. This ensures fast response times even at scale.

Yes, Marqo can be configured for multi-tenant use, especially when backed by a storage layer with per-tenant encryption-at-rest. This allows secure separation of customer data in AI and SaaS platforms while maintaining high throughput.