SLO

Terms related to simplyblock

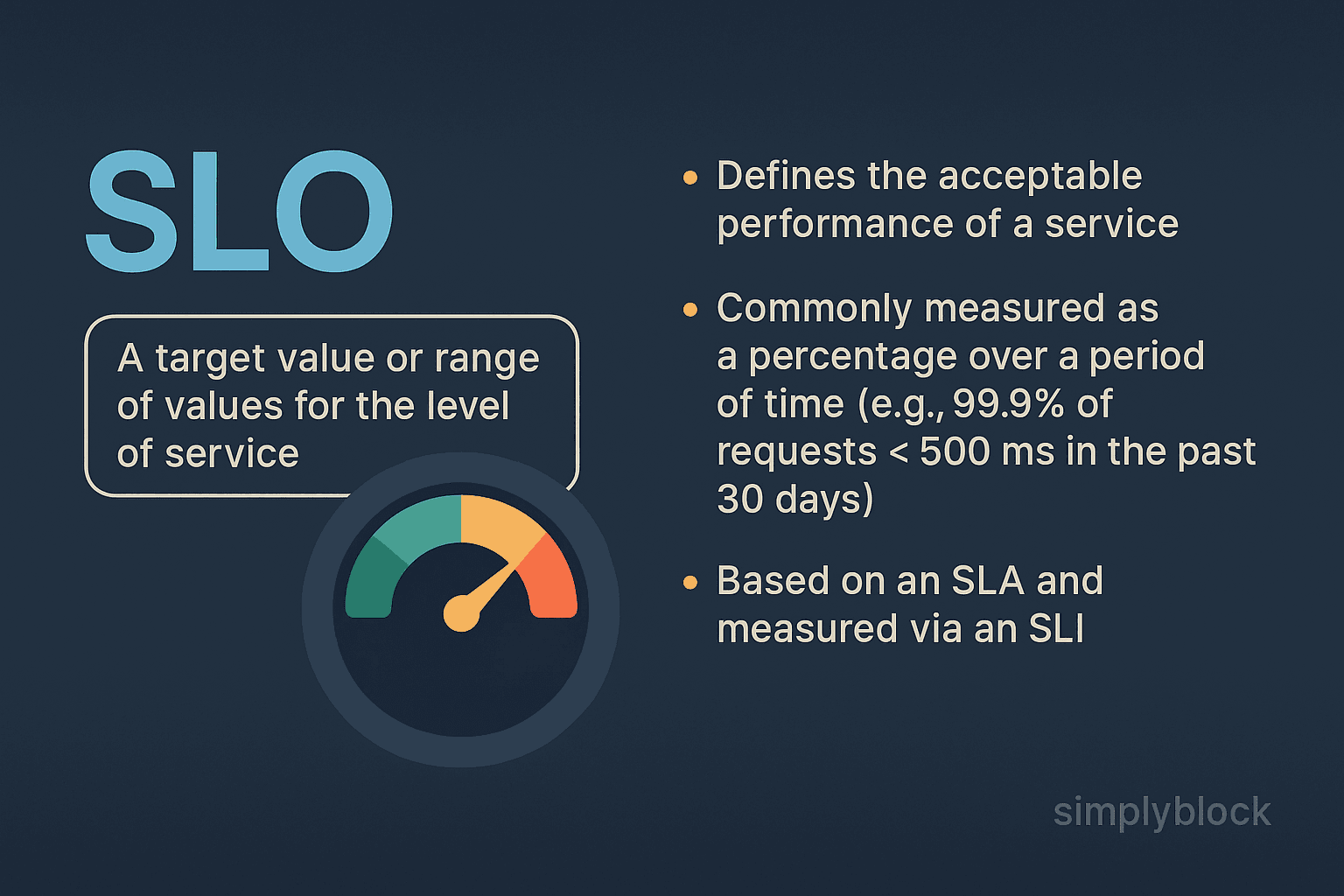

An SLO (Service Level Objective) is a specific, measurable performance target agreed upon between a service provider and a customer, or internally within an engineering team. It defines the acceptable level of service performance over time, such as uptime, latency, or throughput, and is a key part of any Service Level Agreement (SLA).

Unlike an SLA, which is a contractual commitment, an SLO is often operational and internal, used by Site Reliability Engineering (SRE) teams and DevOps practitioners to maintain service reliability and set realistic goals for system behavior. SLOs also serve as the bridge between high-level SLAs and low-level Service Level Indicators (SLIs).

How SLOs Work

SLOs are typically expressed as a percentage over some time. For example:

- “99.9% availability over the last 30 days”

- “95% of API requests must complete within 300 ms.”

Each SLO aligns with one or more SLIs, such as latency, error rate, or system uptime. If an SLO is breached, it may trigger alerts, incident response procedures, or engineering reviews. Unlike SLAs, SLO violations usually don’t incur financial penalties, but they help maintain service health by identifying reliability risks early.

In distributed systems and software-defined storage platforms, SLOs guide resource allocation, autoscaling, and failover policies to preserve service guarantees across Kubernetes clusters, edge nodes, and hybrid cloud deployments.

Benefits of SLOs

Implementing well-defined SLOs provides several technical and strategic advantages:

- Quantifies Reliability: Converts abstract expectations into measurable metrics.

- Aligns Engineering Focus: Helps DevOps and SRE teams prioritize reliability and user experience.

- Supports SLAs: Acts as a foundation for externally-facing service commitments.

- Prevents Overengineering: Avoids wasting resources on marginal improvements that offer little real benefit.

- Improves Incident Management: Triggers alerts when error budgets are consumed, supporting faster response and resolution.

SLOs are a critical part of systems that serve high-throughput, low-latency applications, especially in Kubernetes, multi-tenant platforms, and distributed databases.

SLO vs SLI vs SLA

These terms are closely related but serve distinct purposes. Here’s a comparison:

| Term | Stands For | Description | Example |

|---|---|---|---|

| SLA | Service Level Agreement | Legal contract with penalties | 99.9% uptime guarantee |

| SLO | Service Level Objective | Operational performance target | 99.95% success rate |

| SLI | Service Level Indicator | Raw metric/data source | API latency, error rate |

While SLAs are external and enforceable, SLOs are internal targets, and SLIs are the measurable data used to evaluate them.

Use Cases for SLOs

SLOs are used in a variety of technical and operational scenarios:

- Kubernetes Orchestration: Ensuring persistent volumes maintain <1ms latency.

- Storage Infrastructure: Maintaining IOPS and throughput for production workloads.

- SaaS Platforms: Guaranteeing login API responses remain under 500ms for 99.9% of users.

- Multi-Cloud Deployments: Aligning storage and compute SLAs across cloud regions with consistent SLOs.

- Edge Environments: Monitoring service uptime and replication success in latency-sensitive zones.

In modern SDS environments, SLOs help guide how data is distributed, replicated, and recovered, especially when used with erasure coding or NVMe-over-TCP for high-performance workloads.

SLOs in Simplyblock™

Simplyblock enables real-time observability and policy enforcement to help customers define and meet SLOs for their workloads. Capabilities include:

- Real-Time IOPS and Latency Monitoring: Fine-grained metrics to track SLI values.

- Multi-Tenant QoS Enforcement: SLOs enforced per tenant or workload.

- Error Budget Tracking: Engineering teams can monitor deviation from defined SLOs.

- Scalable Alerts: Threshold-based alerts when an SLO is nearing violation.

- Cross-Cloud SLO Policies: Uniform objectives across hybrid and edge deployments.

By aligning platform behavior with SLO definitions, simplyblock helps teams deliver consistent performance and availability, even under failure or peak load conditions.

Related Terms

Teams often review these glossary pages alongside Service Level Objective (SLO) when they turn latency and availability targets into enforceable controls for production platforms.

Storage Quality of Service (QoS)

Tail Latency

In-Network Computing

Storage Offload on DPUs

External Resources

- Service Level Objective – Wikipedia

- SRE Fundamentals: SLAs vs SLOs vs SLIs – Google Cloud Blog

- SLOs 101: How to Establish and Define Service Level Objectives – Datadog Blog

- Create SLOs – Grafana Cloud Documentation

- A Complete Guide to Error Budgets: Setting up SLOs, SLIs, and SLAs – Nobl9

Questions and Answers

An SLO (Service Level Objective) is a measurable performance target—such as uptime, latency, or throughput—that defines the expected behavior of a service. It forms the foundation of an SLA (Service Level Agreement) and helps set realistic expectations for cloud-native storage performance.

SLOs guide how storage systems are architected, monitored, and optimized. For example, an SLO might specify <1ms latency or 99.99% availability, which platforms like NVMe over TCP can meet through high-speed, fault-tolerant designs.

In Kubernetes, SLOs are enforced through autoscaling, pod health checks, and CSI-backed persistent volumes. Storage backends must meet SLO targets for IOPS, latency, and availability to support stateful workloads reliably.

Yes. In regulated environments, SLOs often include guarantees around encryption at rest, secure key management, and data isolation, especially in multi-tenant architectures where compliance is critical.

An SLO is the goal (e.g., 99.95% uptime), while an SLI (Service Level Indicator) is the actual measured value (e.g., current uptime is 99.97%). Together, they help track performance against agreed service benchmarks in software-defined storage systems.