Data Locality

Terms related to simplyblock

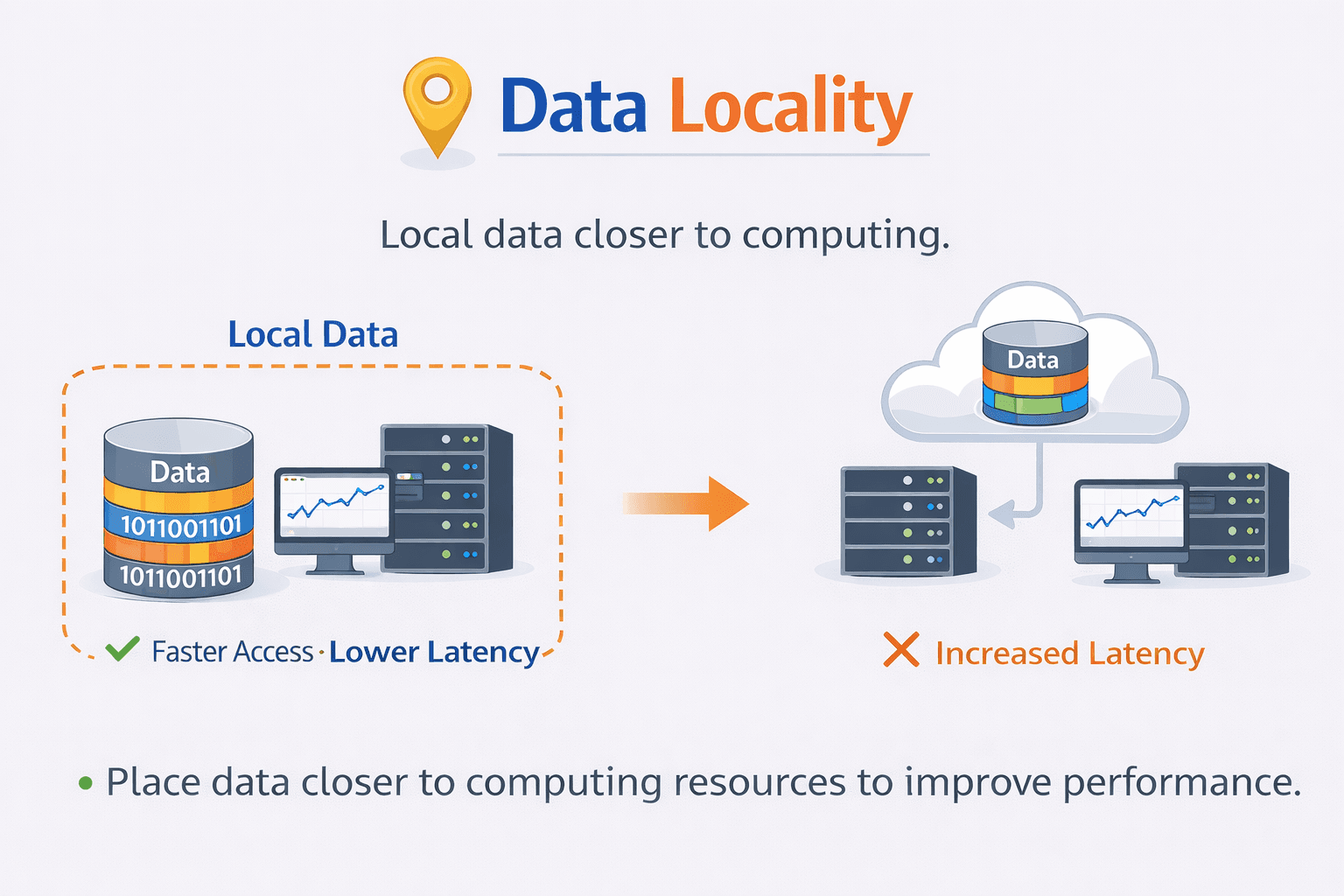

Data locality describes how close your data sits to the compute that uses it. When a workload reads or writes from the same node, rack, or zone, it gains locality. When it reaches across the network for most I/O, locality drops.

Executives care about data locality because it shapes tail latency, cluster efficiency, and cost. DevOps teams care because locality reduces incidents caused by cross-node traffic and uneven placement. Locality also affects recovery. A failover may keep the service up, but it can still add latency if the data path turns remote.

In Kubernetes Storage, locality often changes during normal operations. A node drain, a rolling update, or an autoscaler event can move compute faster than storage can follow. That gap explains many “mystery” latency spikes in databases and streaming platforms.

Locality Optimization in Today’s Architectures

Older storage stacks assumed a central array, stable servers, and predictable paths. Cloud-native platforms broke that assumption. Pods move often, and multi-tenant clusters share fabrics and CPUs.

Modern platforms improve locality by aligning three layers: placement policy, storage topology, and the I/O path. A Software-defined Block Storage platform helps because it can expose topology signals, enforce per-tenant QoS, and reduce overhead in the data path.

Baremetal deployments amplify these gains. Direct access to NVMe devices removes hypervisor noise and lowers jitter, especially for high IOPS workloads. You also gain clearer NUMA behavior, which supports stable latency under load.

🚀 Improve Data Locality for Stateful Kubernetes Workloads

Use simplyblock to keep volumes close to compute and protect tail latency with NVMe/TCP at scale.

👉 Use simplyblock for NVMe/TCP Kubernetes Storage →

Data Placement in Kubernetes Scheduling

Kubernetes Storage locality depends on where the scheduler places pods and where the storage system serves blocks. If those two decisions drift apart, remote I/O becomes the default.

Topological alignment matters most for stateful sets, sharded databases, and log-heavy services. These workloads issue frequent reads and writes, so even a small network penalty multiplies quickly. A platform can keep locality high by giving the scheduler usable hints and by honoring them during provisioning and attachment.

Locality is also tied to failure domains. Zonal constraints improve resilience, but they can force cross-node access when the cluster rebalances unevenly. Good designs treat locality and resilience as paired objectives, not competing ones.

Data Locality Across NVMe/TCP Fabrics

NVMe/TCP extends NVMe-oF semantics over standard Ethernet. It gives teams a practical path to disaggregated storage without requiring an RDMA-only network everywhere. For many organizations, NVMe/TCP acts as a SAN alternative that still supports high throughput and stable latency when the platform controls the I/O path.

Locality still matters in NVMe/TCP environments. Local access stays faster than remote access, even with an efficient fabric. A clean user-space data path can reduce the penalty of remote I/O and protect CPU headroom for applications. That CPU efficiency becomes critical when clusters run mixed workloads with different latency goals.

How to Measure Placement Efficiency and Latency Impact

Treat locality as an operational metric, not a theory. Start with application latency percentiles. Then tie the spikes to placement events and network indicators.

Useful signals include average latency, p95/p99 latency, queue depth, and CPU consumed per I/O. Add placement context such as pod reschedules, node drains, and volume attach and detach counts. Network telemetry also helps, especially with east-west bandwidth and retransmits.

A benchmark should mimic real access patterns. Use small-block random I/O for databases, and use mixed read and write profiles for logs and analytics. Repeat tests during normal cluster activity, not only on an idle lab cluster. That approach shows how locality behaves during churn.

Practical Tactics to Improve Locality

Most teams improve locality by combining scheduling discipline with storage capabilities that respect topology and protect neighbors.

- Set clear placement intent for each stateful workload, and encode it in affinity rules that match your failure domains.

- Provision volumes with topology awareness so that the scheduler can place pods.

- Limit cross-zone attachments unless the workload truly needs them, and document exceptions.

- Apply QoS controls to isolate tenants, especially for shared NVMe/TCP fabrics.

- Keep the I/O path lean to reduce CPU use and jitter during bursts.

- Review reschedule policies and avoid unnecessary pod movement for latency-sensitive tiers.

Data Locality Options Compared

The table below compares common Kubernetes Storage patterns and how they affect locality, resilience, and operations. Use it to choose a default pattern for each workload tier, then standardize the policy.

| Storage pattern | Typical locality | Behavior during node loss | Operational fit | Common use case |

|---|---|---|---|---|

| Node-local media with strict pinning | Very high on one node | Forces recovery or rebuild elsewhere | Requires strong discipline | Edge, caching, fixed-placement apps |

| Topology-aware shared storage | High within a zone or rack | Keeps service running with predictable placement | Balanced | General stateful platforms |

| Disaggregated NVMe/TCP with policy routing | High when policies stay consistent | Preserves access while keeping performance stable | Scales well | Databases, analytics, multi-tenant clusters |

Consistent Performance with Simplyblock™ for Stateful Kubernetes

Simplyblock™ supports consistent locality outcomes by focusing on two levers: placement control and a low-overhead I/O path. SPDK-based user-space design reduces context switching and avoids extra copies, which helps keep latency stable under load. That efficiency matters when the cluster serves many tenants, each with different SLOs.

In Kubernetes Storage, simplyblock can run hyper-converged, disaggregated, or hybrid layouts. Teams can keep data close to compute for latency tiers, while still allowing remote access for mobility and recovery. Multi-tenancy and QoS help protect locality gains when noisy neighbors compete for bandwidth and IOPS.

NVMe/TCP plays a central role in this model. It lets teams scale out without redesigning the entire network stack, and it supports a clear path to higher-performance fabrics when specific tiers demand it.

Next-Stage Platform Capabilities for Placement-Aware Storage

Placement-aware storage will move from manual tuning to policy automation. Schedulers will consume richer topology hints, and storage systems will publish clearer placement signals. More platforms will also route I/O with intent, not only with availability in mind.

DPUs and IPUs will shape the next phase. Offload can preserve CPU for applications while keeping storage services responsive, even during bursts. As teams adopt disaggregated designs, they will rely more on deterministic QoS to protect latency goals across shared fabrics.

Related Terms

Teams often review these glossary pages alongside Data Locality when they set measurable targets for Kubernetes Storage and Software-defined Block Storage.

Storage Affinity in Kubernetes

IO Path Optimization

Network Storage Performance

NVMe-oF Target on DPU

Questions and Answers

Data locality reduces latency by ensuring compute resources are close to the data they process. This is especially critical in high-performance environments where minimizing cross-node or cross-zone communication can drastically improve application speed and reduce infrastructure overhead.

Kubernetes supports data locality via node affinity, topology-aware provisioning, and CSI drivers. These mechanisms ensure workloads are scheduled on nodes close to their associated storage, improving performance and reliability for stateful apps.

Yes. When data is accessed across zones or networks, latency increases and throughput drops. To avoid this, modern platforms like Simplyblock implement intelligent data placement strategies that co-locate workloads and storage.

Poor data locality can increase cross-zone or inter-region traffic, which incurs higher costs. By keeping data near compute, organizations can reduce egress charges and optimize infrastructure. Simplyblock’s topology-aware provisioning supports cloud storage optimization by default.

Use volume affinity rules, storage class zoning, and caching to keep data near compute nodes. In hybrid scenarios, tools like CSI and software-defined volumes allow storage backends to intelligently serve data close to where it’s needed—maximizing performance across environments.