Rancher Kubernetes

Terms related to simplyblock

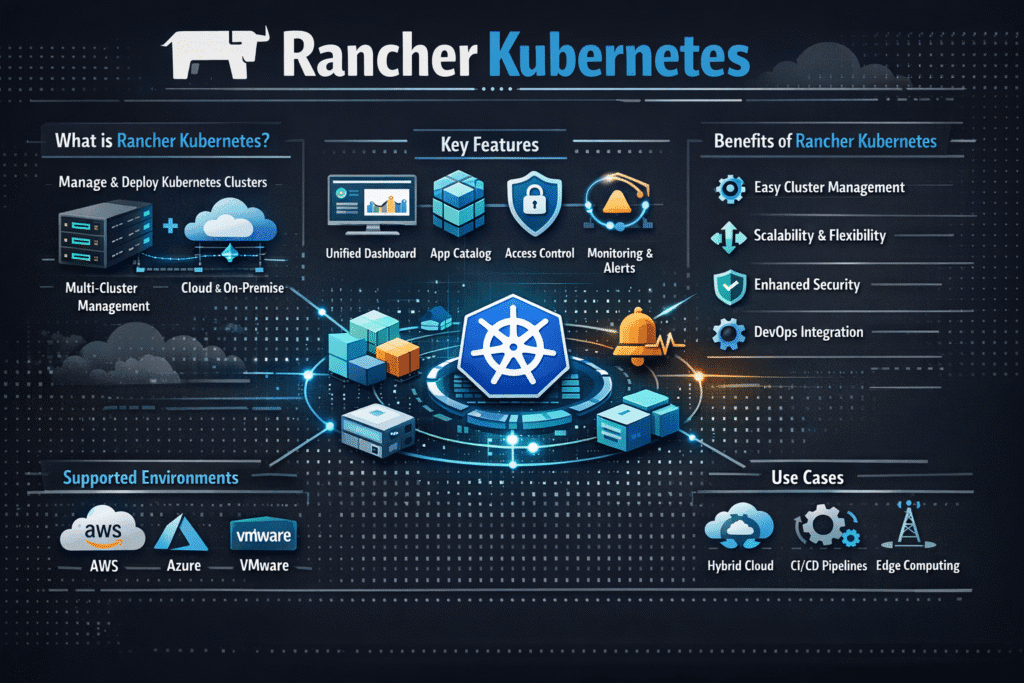

Rancher Kubernetes gives platform teams one place to manage many clusters, across on-prem, edge, and cloud. That control plane helps with access, policy, and app rollout, but it also raises the bar for storage. A single weak storage choice repeats across every cluster, and it shows up as slow deploys, noisy-neighbor events, and long recovery windows.

Stateful apps stress the system in a different way than stateless services. They force clean volume attach, fast reschedule, and steady latency under load. Kubernetes Storage works best when you standardize StorageClasses, labels, and quota rules across clusters, then back them with a storage layer that stays predictable during upgrades and node drains.

Why teams use Rancher for fleet operations

Rancher fits organizations that run many clusters and want shared guardrails. It shines when you centralize identity, enforce policy, and push app updates through one workflow. It also makes drift visible, which helps when different teams manage different sites.

Storage adds a twist. Cluster fleets tend to mix hardware, networks, and failure domains. A policy that works on one site can fall apart on another site if the storage design depends on local assumptions. This is why storage standards matter as much as cluster standards.

🚀 Run Rancher Workloads on NVMe/TCP Storage, Natively in Kubernetes

Use Simplyblock to standardize tiers and QoS across clusters and avoid fleet-wide bottlenecks.

👉 Use Simplyblock for Rancher by SUSE →

Rancher Kubernetes in Kubernetes Storage policy design

Rancher Kubernetes does not replace core Kubernetes storage mechanics. Your workloads still rely on CSI drivers, StorageClasses, PVCs, and access modes. The difference is scale: you apply the same patterns across many clusters, so small design mistakes multiply fast.

A clean policy model starts with tiering. Give databases a low-latency tier, give general services a balanced tier, and keep dev and test in their own pools. Then add limits so one namespace cannot eat the entire queue. Software-defined Block Storage helps here because it lets you express tiers and guardrails in a repeatable way, without tying every site to a single SAN alternative footprint.

Rancher Kubernetes with NVMe/TCP for disaggregated storage

Rancher Kubernetes fleets often move toward disaggregated designs. You keep compute nodes focused on apps, and you scale storage nodes on their own schedule. NVMe/TCP fits that model because it delivers NVMe semantics over standard Ethernet, which most sites already run.

This transport also supports clear ops boundaries. The platform team can manage cluster lifecycle while the storage team manages the storage plane, and both teams can still hit latency targets. When you pair NVMe/TCP with an SPDK-based data path, you reduce CPU waste in the I/O path and protect tail latency during busy periods.

Rancher Kubernetes performance checks that show the real bottlenecks

Start with behavior, not raw speed. Watch how fast pods start when they need storage. Track volume bind time, attach time, and mount time during normal deploys and during node drains. Then look at app latency, especially p95 and p99, because fleet issues often show up as tail spikes first.

Next, test the “stress moments.” Run a rolling upgrade, trigger a node drain, and restore a stateful workload to a new node. These events tell you whether your storage design can keep up with the platform’s change rate. When failures look random, the cause often sits in topology rules, weak QoS, or an overloaded storage network.

One set of tuning moves that improves consistency across clusters

- Split StorageClasses by tier, and keep critical stateful apps off shared dev pools.

- Put clear IOPS and throughput limits in place so one tenant cannot starve others.

- Align topology rules so pods and volumes stay in the same zone or failure domain.

- Validate NVMe/TCP network headroom during peak hours, then retest after growth.

- Drill node drains and upgrades on purpose, and measure attach and mount time each run.

Storage approaches used with Rancher fleets

The table below compares common ways teams deliver persistent storage in Rancher-managed environments, with a focus on control, performance, and scale.

| Approach | What it does well | Where it breaks | Best fit |

|---|---|---|---|

| Local PVs per cluster | Simple and fast on one node | Weak mobility and slow recovery | Small edge clusters with fixed nodes |

| Cloud block via CSI | Quick start, easy procurement | Cost, caps, and noisy neighbors | Smaller clusters, mixed workloads |

| Legacy SAN alternative via CSI | Familiar ops for some orgs | Rigid scaling and uneven latency | Sites with existing SAN contracts |

| Software-defined Block Storage over NVMe/TCP | Strong latency control and clean scale | Needs tier design and QoS rules | Multi-tenant fleets, databases, analytics |

simplyblock™ fit for Rancher-managed multi-cluster storage

Simplyblock™ supports Kubernetes Storage with NVMe/TCP and Software-defined Block Storage, which matches how Rancher fleets evolve. You can run hyper-converged clusters for smaller sites, disaggregated clusters for larger sites, and mixed layouts in between, without changing the core storage model.

The SPDK-based design helps keep the I/O path efficient, which matters when several clusters share similar workload peaks. Multi-tenancy and QoS controls help keep one team’s burst from turning into another team’s outage. That combination supports fleet standards that hold up during upgrades, reschedules, and growth.

Roadmap signals for Rancher fleet storage standards

Fleet operators keep pushing for fewer moving parts and clearer policy. Expect more focus on topology-aware placement, stronger storage observability, and tighter QoS controls at the volume level.

NVMe/TCP adoption should keep rising as disaggregated designs become the default for larger clusters that want SAN-like outcomes without SAN complexity.

Related Terms

Teams often review these glossary pages alongside Rancher Kubernetes when they standardize CSI behavior, tiering, and performance isolation across many clusters.

- Container Storage Interface (CSI)

- Dynamic Provisioning in Kubernetes

- Storage Affinity in Kubernetes

- OpenShift Persistent Storage

- Rancher vs OpenShift

Questions and Answers

Rancher is an enterprise Kubernetes management platform that simplifies cluster deployment, multi-cluster governance, and user access control. It integrates seamlessly with Kubernetes-native storage providers like Simplyblock for persistent volume provisioning.

Yes. Simplyblock’s CSI-based storage architecture works natively with Rancher-provisioned Kubernetes clusters, supporting stateful workload deployments, including databases and analytics apps, with fast, persistent block storage.

Rancher offers centralized visibility and control over multiple Kubernetes clusters. When used with Simplyblock, it simplifies multi-zone and multi-cloud storage orchestration, allowing consistent CSI driver deployment and StorageClass management.

Absolutely. Rancher-managed clusters use upstream Kubernetes, fully supporting CSI features like volume snapshots and expansion. Simplyblock provides snapshot-compatible storage to streamline backup, cloning, and scaling workflows under Rancher.

With built-in RBAC, policy enforcement, and access auditing, Rancher enhances operational security. Combined with Simplyblock’s DARE-compliant encrypted volumes, organizations can ensure secure, compliant storage across all managed clusters.