Kubernetes Volume Plugin (in-tree vs CSI)

Terms related to simplyblock

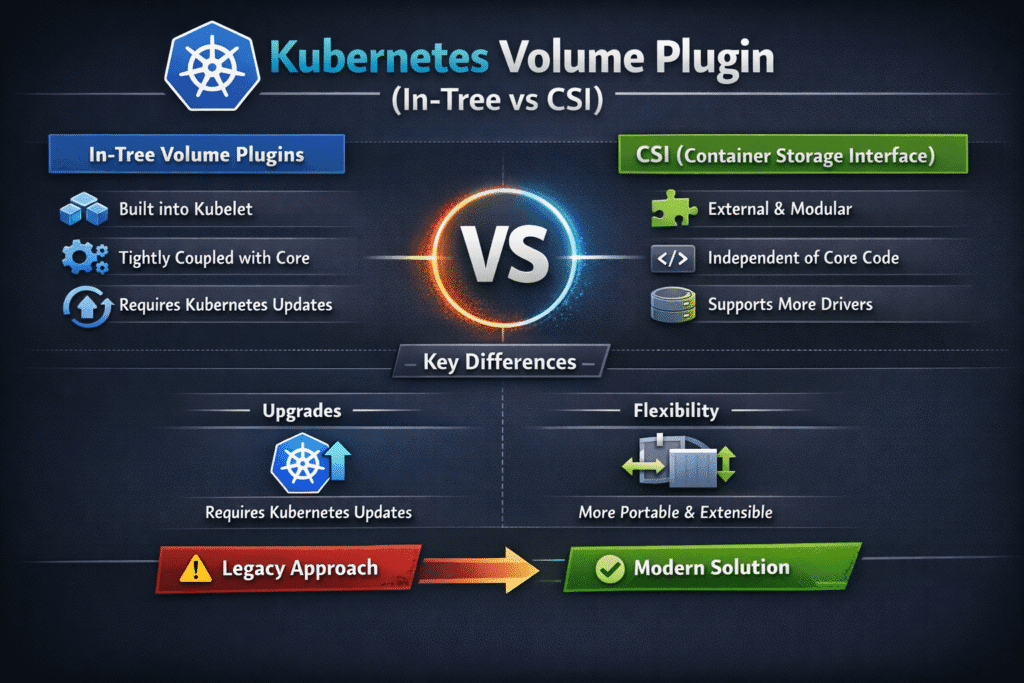

A Kubernetes volume plugin connects Kubernetes to a storage backend so pods can use persistent data. The older “in-tree” model put plugin code inside Kubernetes itself. The CSI model keeps the driver outside Kubernetes and relies on a standard interface. That shift matters most when you run stateful workloads, because storage upgrades, bug fixes, and rollouts hit uptime, not just features.

Executive teams usually care about two outcomes. They want fewer platform-wide upgrades just to patch storage behavior, and they want cleaner failure domains when a driver misbehaves. Platform teams care about repeatable provisioning, fast attach and mount, and clear logs during incidents. When you standardize on CSI, you make those workflows easier to version, test, and automate across Kubernetes Storage.

Kubernetes Volume Plugin (in-tree vs CSI) – What Actually Changes for Operators

In-tree plugins ship with Kubernetes releases. That tie forces a hard coupling: if you need a storage fix, you often need a cluster upgrade or a vendor patch that follows the Kubernetes cadence. CSI drivers move faster because vendors can release driver updates on their own schedule. Teams then upgrade the driver like any other app in the cluster.

CSI also standardizes the call flow for create, attach, mount, resize, and snapshot actions. That standard helps you set clear runbooks. It also helps you compare vendors, because the driver must follow the CSI spec instead of inventing its own rules.

The main tradeoff shows up in operations. CSI adds more moving parts, such as the controller and node components. Good teams treat those parts as first-class services with version pinning, monitoring, and rollback plans.

🚀 Cut Storage Upgrade Risk by Standardizing on CSI Drivers

Use Simplyblock to keep Kubernetes Storage steady with NVMe/TCP and Software-defined Block Storage.

👉 Use Simplyblock for Persistent Storage for Kubernetes →

Kubernetes Volume Plugin (in-tree vs CSI) in Kubernetes Storage – Reliability, Security, and Change Windows

Storage failures rarely look like “the plugin crashed.” They show up as stuck pods, slow failover, or long maintenance windows. CSI improves your odds because you can isolate problems to the driver release, not the whole cluster. You also gain more room for staged rollouts, like canary driver upgrades on a subset of nodes.

Security teams often like CSI because you can manage the driver as a normal workload, including image scanning, signing, and tighter change control. In-tree code rides along with the Kubernetes binary set, so it inherits that upgrade path, too.

Kubernetes Storage also benefits from clearer boundaries. The kubelet still drives node-side work, but CSI keeps the storage backend logic inside the driver. That separation makes audits and root-cause reviews less messy when incidents hit production.

Kubernetes Volume Plugin (in-tree vs CSI) and NVMe/TCP – Keeping Fast Storage Practical on Ethernet

High-performance storage pushes the plugin model hard. NVMe/TCP offers NVMe semantics over standard Ethernet, which helps teams scale fast block storage without betting on specialized fabrics from day one. CSI fits well here because drivers can evolve with kernel, NIC, and backend changes while the cluster stays stable.

NVMe/TCP also raises a common platform question: where does the CPU go? A driver with a lean data path leaves more CPU for kubelet work, sidecars, and application threads. A heavy path can increase tail latency and reduce pod density on worker nodes. Software-defined Block Storage that focuses on efficiency can help keep the node steady under mixed load.

When you combine NVMe/TCP, Kubernetes Storage, and a CSI-first plan, you can run disaggregated, hyper-converged, or mixed designs without changing the app interface.

How to Measure Plugin-Driven Storage Overhead

Benchmarking storage needs more than peak IOPS. Teams should measure the full lifecycle: time to provision, time to attach, time to mount, and time to recover during node drains. Those timings often decide whether a rollout finishes in minutes or drags into the next change window.

A practical test plan mixes steady I/O with real cluster events. Run load that matches your apps, then trigger node drains, reschedules, and rolling upgrades. Track p95 and p99 latency, plus “pod scheduled to ready” time for stateful pods. If those numbers swing during a driver upgrade, the platform needs tighter controls.

One Migration Checklist That Reduces Risk

- Start with a single StorageClass mapping, then expand tiers after you confirm behavior under load.

- Pin driver and sidecar versions together, and document a rollback path before the first upgrade.

- Run canary driver upgrades on a small node pool, then promote after you validate attach and mount timing.

- Add alerts for mount failures, attach retries, and unusual latency spikes during reschedules.

- Validate performance limits early if you rely on multi-tenancy and QoS.

In-Tree vs CSI – Operational Differences That Matter in Production

Before you compare options, align on what “better” means for your org. Most teams weigh upgrade control, feature velocity, and incident blast radius more than they weigh raw throughput.

| Decision Factor | In-tree Plugins | CSI Drivers |

|---|---|---|

| Upgrade cadence | Tied to Kubernetes releases | Independent driver releases |

| Feature delivery | Slower, depends on cluster upgrades | Easier to isolate to the driver version or node pool |

| Failure isolation | Often affects cluster-wide behavior | More components, more control, and visibility |

| Day-2 operations | Fewer components, less flexible | More components, more control and visibility |

| Fit for NVMe/TCP + SDS | Limited evolution path | Better match for fast-changing stacks |

Simplyblock™ – A CSI-First Path for Predictable Kubernetes Storage

Simplyblock™ targets operators who want predictable storage behavior across change windows. Simplyblock provides Software-defined Block Storage for Kubernetes Storage with NVMe/TCP support, plus controls for multi-tenancy and QoS. That focus helps platform teams keep stateful services stable when they scale clusters, roll nodes, and push frequent app releases.

On the data path, simplyblock leverages SPDK-style user-space principles to reduce overhead and cut extra copies where it matters. Lower overhead often translates into more usable CPU for applications and steadier tail latency under pressure. That mix supports both hyper-converged and disaggregated designs without forcing a new app contract.

What to Expect Next in Plugin and Driver Direction

Kubernetes continues to favor CSI for storage integrations, and vendors keep investing in CSI-first capabilities. Teams should expect more emphasis on safer migrations, clearer driver health signals, and stronger policy controls for performance and placement.

The best outcomes will come from treating the storage stack like a product: version it, test it under disruption, and measure it with SLO-grade metrics.

Related Technologies

Teams often review these glossary pages alongside Kubernetes Volume Plugin (in-tree vs CSI) when they standardize Kubernetes Storage and Software-defined Block Storage.

- CSI Driver vs Sidecar

- CSI Node Plugin

- QoS Policy in CSI

- IO Path Optimization

- Kubernetes Volume Mount Options

Questions and Answers

In-tree plugins are built into the Kubernetes core, while CSI plugins are external and follow the standardized Kubernetes CSI spec. CSI allows storage vendors to develop independent drivers, enabling faster updates and broader feature support.

Kubernetes is phasing out in-tree plugins to improve maintainability, security, and scalability. CSI offers a modular approach and is required for advanced features like snapshots, topology awareness, and volume expansion—key for Kubernetes Stateful workloads.

Yes, Kubernetes provides migration paths for many in-tree plugins. Storage solutions like Simplyblock are designed to replace legacy systems using block storage replacement, delivering modern CSI-compatible features for production workloads.

CSI separates volume provisioning, attachment, mounting, and expansion into clear lifecycle stages. This modularity enables observability and custom automation—core benefits of the Kubernetes CSI approach over monolithic in-tree plugins.

While still functional in current releases, in-tree plugins are deprecated and will eventually be removed. Migrating to CSI ensures compatibility with the latest Kubernetes features and storage innovations, such as encryption at rest and snapshots.