Storage Resource Quotas in Kubernetes

Terms related to simplyblock

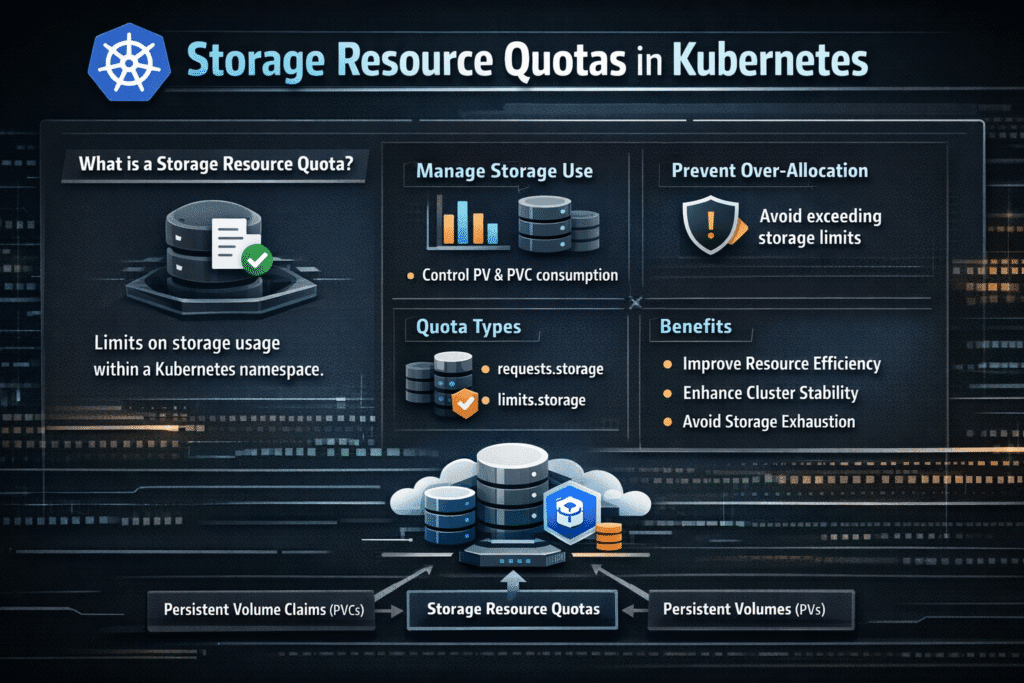

Storage Resource Quotas in Kubernetes limit the amount of storage a namespace can request, consume, and create, preventing one team from draining shared capacity or overwhelming the API with excessive claims. Kubernetes enforces these limits through the ResourceQuota object, and it can reject requests that would break the quota with a 403 Forbidden response.

For exec teams, quotas turn storage from an open-ended bill into a budgeted service. For platform teams, quotas reduce “runaway PVC” incidents, keep Kubernetes Storage predictable, and create clear guardrails for multi-tenant clusters.

Optimizing Storage Resource Quotas in Kubernetes with Platform Controls

Quotas work best when you pair them with defaults and policy. If you set a storage quota but allow pods and PVCs without clear requests, teams hit noisy failures and slow triage. Kubernetes recommends combining quotas with LimitRanges, because LimitRanges can set defaults while quotas cap totals.

Treat quota design like a tiering plan. Set separate budgets for dev, staging, and production namespaces. Align those budgets with StorageClass tiers and retention rules, so teams do not store long-lived data in a “scratch” tier and later blame the platform.

🚀 Enforce Namespace Storage Budgets Without Breaking Performance SLOs

Use simplyblock to pair NVMe/TCP volumes with QoS caps, so Kubernetes Storage stays predictable under growth.

👉 Use simplyblock for Multi-Tenancy and QoS →

Storage Resource Quotas in Kubernetes Storage

Quotas shape more than raw GiB. In real Kubernetes Storage operations, teams also control PVC count, total requested storage, and, in some cases, ephemeral storage consumption. Kubernetes calls out that a quota can cap aggregate usage per namespace, and it can also limit object counts by kind.

The biggest operational win comes from stopping quota violations early, before the cluster creates a partial state. Quotas also keep provisioning workflows sane during scale events, because the API rejects unsafe growth before the storage system allocates capacity.

Storage Resource Quotas in Kubernetes and NVMe/TCP

NVMe/TCP raises the stakes on quota discipline. Fast storage makes it easy for teams to generate more data faster, and “temporary” datasets can become permanent by accident. Quotas prevent one namespace from consuming the headroom that other workloads need to keep latency stable.

In Software-defined Block Storage deployments, quota policy belongs next to performance controls. When you combine storage budgets with QoS caps, you protect both capacity and tail latency during spikes. Simplyblock positions NVMe/TCP as a core transport for high-performance Kubernetes Storage, which fits clusters that want SAN alternative behavior without vendor lock-in.

Measuring and Benchmarking Storage Quota Impact

Measure quota outcomes with operational signals, not only capacity charts. Track the rate of PVC creation, the percentage of rejected requests, and the time teams spend blocked by quota. Add workload metrics that show the “why,” such as p95 and p99 latency for storage I/O, plus restart loops tied to failed claims.

Also, measure local ephemeral storage behavior if your workloads write heavily to node-local scratch. Kubernetes documents ephemeral storage requests, limits at the pod level, and quotas that can include ephemeral-storage When you scope them.

Approaches for Improving Quota Outcomes in Shared Clusters

Use quotas as part of a simple operating model that teams can follow without extra meetings.

- Set quotas per namespace for total requested storage and PVC count, and match those to environment tiers.

- Apply LimitRanges to provide sane defaults, so developers do not guess sizes under pressure.

- Standardize StorageClasses, and map each class to a budget-friendly quota tier.

- Add QoS caps for key namespaces so background jobs do not crush production latency.

- Review quota exceptions on a schedule, and retire “temporary” increases that never roll back.

Quota Models Compared for Kubernetes Storage

The table below compares common quota setups and the trade-offs teams see in day-to-day operations.

| Quota model | What it protects | Common failure mode | Best fit |

|---|---|---|---|

| Capacity-only quota (GiB) | Budget control | Too many small PVCs, API churn | Small teams, low churn |

| Capacity + PVC count | Budget + API stability | Developers hit limits without defaults | Shared clusters without LimitRanges |

| Capacity + count + QoS on Software-defined Block Storage | Budget + stability + latency | Needs clear tier rules | Multi-tenant Kubernetes Storage on NVMe/TCP |

Simplyblock™ for Predictable Storage Resource Quotas in Kubernetes

Simplyblock™ pairs Kubernetes Storage policy with Software-defined Block Storage controls, so quotas connect to real performance and tenant isolation. Simplyblock also targets NVMe/TCP efficiency, which helps teams keep CPU overhead down while they enforce guardrails at scale.

Quota policy stays more effective when storage behavior stays consistent. When a platform can cap throughput and IOPS, and when it can keep locality predictable, teams see fewer “quota is fine, but the cluster is slow” incidents. Simplyblock highlights data locality as a first-class factor for stable performance, which complements quota design in busy namespaces.

Future Directions and Advancements in Namespace Storage Budgeting

Kubernetes keeps adding stronger policy hooks and clearer signals around enforcement. Teams will push for more “self-service with guardrails,” including automated quota sizing based on service tiers, and better alerting before a namespace hits the wall.

Storage platforms will also tighten the link between quotas and performance budgets. As NVMe/TCP adoption grows, teams will expect quotas to protect not only capacity, but also predictable latency during bursts.

Related Terms

Teams often review these glossary pages alongside Storage Resource Quotas in Kubernetes when they set measurable targets for Kubernetes Storage and Software-defined Block Storage.

- Data Locality

- IO Contention

- Storage Metrics in Kubernetes

- Pod Affinity and Storage

- Kubernetes Capacity Tracking for Storage

Questions and Answers

Storage Resource Quotas in Kubernetes limit how much storage a namespace or user can consume. They help enforce fair resource allocation and prevent over-provisioning. This is essential in multi-tenant clusters running Kubernetes Stateful workloads.

Quotas are set using ResourceQuota objects, specifying limits like requests.storage or PVC counts. These controls work with Kubernetes CSI drivers to restrict volume creation based on defined thresholds.

Yes. When a PVC is created, Kubernetes checks quota compliance before provisioning. This ensures dynamic volumes—such as those on block storage replacement backends—stay within the namespace’s allowed capacity.

Absolutely. You can enforce quotas per StorageClass using the storageclass.storage.k8s.io/<class> key. This helps isolate resources in multi-tenant environments using multi-tenant Kubernetes storage.

By capping storage usage at the namespace level, teams avoid runaway PVCs and gain predictability. Combined with usage tracking, this supports optimizing Amazon EBS volumes cost and resource efficiency across Kubernetes clusters.