Kubernetes NodeUnpublishVolume

Terms related to simplyblock

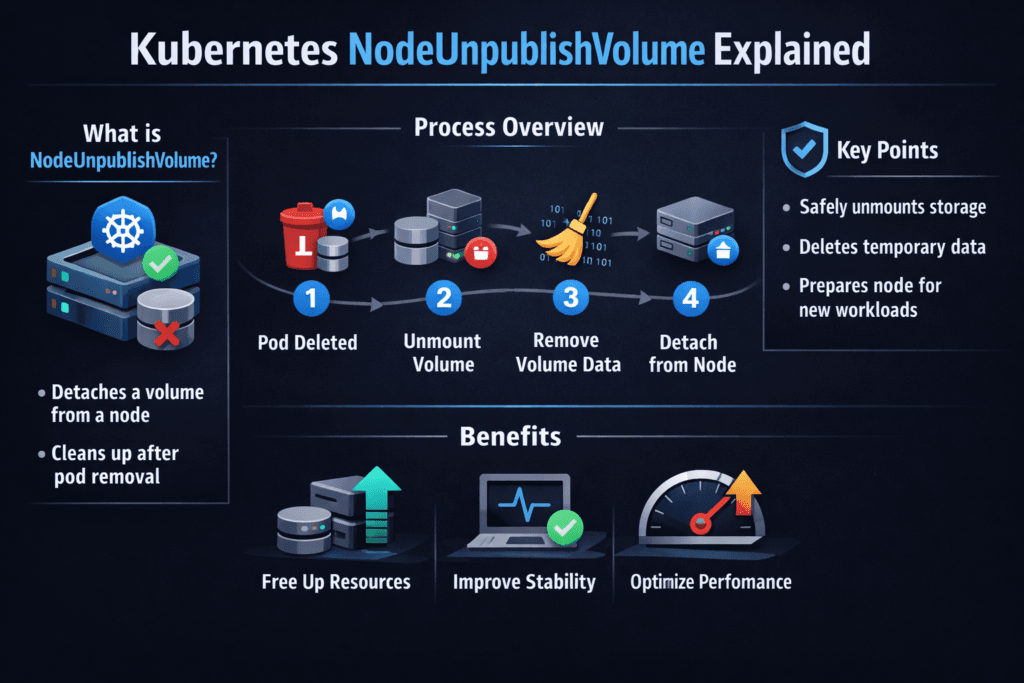

Kubernetes NodeUnpublishVolume is the CSI node-side call that removes a volume mount from a pod’s target path on a worker node. The kubelet triggers it when a pod stops, moves, or gets drained off a node. The CSI driver should treat the call as idempotent, so repeated requests do not cause damage or new errors.

This step sits on the critical path for clean rollouts. If unpublish lags, pods can stick in Terminating, nodes can drain slowly, and stateful apps can miss recovery targets.

Why Unpublish Quality Drives Day-Two Reliability

Unmount problems usually surface when the cluster gets busy. A single stuck mount can block a deployment, delay node maintenance, and raise risk during an outage. The kubelet also chains cleanup work behind a successful unpublish, so one failure can trigger long retry loops and noisy events.

Good platform teams treat unpublish as a first-class SLO. They track it, test it under load, and set clear rules for how apps should open and close files.

🚀 Prevent Stuck Terminating Pods During Node Drains

Use simplyblock to run NVMe/TCP Kubernetes Storage with QoS controls that keep unmount and failover paths stable.

👉 Use simplyblock for NVMe over TCP Storage →

Kubernetes NodeUnpublishVolume in Kubernetes Storage Lifecycles

In Kubernetes Storage, NodeUnpublishVolume ties together the pod lifecycle and the storage lifecycle. The kubelet calls publish when a pod needs the volume, then calls unpublish when the pod no longer needs it. CSI developer docs describe NodePublishVolume and NodeUnpublishVolume as required node methods for drivers.

Some volume types add more nuance. With CSI ephemeral inline volumes, the node service can take on extra work, and some flows expect cleanup during unpublish for ephemeral use.

Kubernetes NodeUnpublishVolume and NVMe/TCP at Scale

NVMe/TCP makes the data path fast, but it does not make unmounts automatic. Unpublish still depends on the kubelet, the CSI node plugin, the mount table, and the app’s file handles. Under heavy I/O, sloppy shutdown behavior can extend unpublish time and inflate tail latency.

Software-defined Block Storage helps when it enforces QoS and tenant limits. That control keeps one namespace from turning a drain window into a noisy-neighbor event. In large Kubernetes Storage fleets, this mix of NVMe/TCP performance and policy often matters more than peak IOPS.

Benchmarking Kubernetes NodeUnpublishVolume End-to-End

Benchmark the full lifecycle, not only the mount step. Measure time from a delete or eviction event to a completed unpublish and cleared mount directory. Add node drain time, because drains expose hidden coupling between workload shutdown and storage cleanup.

Use a simple two-part test:

Run steady writes that match production.

Trigger rolling deletes or drains at a fixed pace, and record unpublish latency, retries, and stuck terminations.

This method catches issues that a single-node test misses, such as mount reference leaks and slow cleanup under pressure.

Operational Guardrails That Shorten Unmount Time

Use one set of rules across teams so unpublish stays predictable during rollouts and maintenance.

- Set clear shutdown hooks in stateful apps, and close files before exit so the node can unmount quickly.

- Avoid sharing the same mount target across pods on the same node unless you truly need it.

- Keep CSI node components on a single upgrade track to reduce behavior drift.

- Watch kubelet events for repeated unmount errors, and alert before drains stall.

- Enforce storage QoS so background churn cannot starve foreground cleanup work.

Unmount and Detach Strategies Compared

The table below compares common patterns that influence unpublish behavior and operational risk.

| Pattern | What it improves | Where it hurts | Best fit |

|---|---|---|---|

| Fast app shutdown + clean close | Short unpublish time | Needs app discipline | Databases and queues |

| Aggressive drain timeouts | Faster node maintenance windows | Can risk data safety if apps ignore SIGTERM | Stateless-heavy clusters |

| QoS + tiering in Software-defined Block Storage | Predictable latency during drains | Needs tier design | Multi-tenant Kubernetes Storage |

| Ephemeral inline volumes with cleanup on unpublish | Simple short-lived storage | Higher churn rate | CI jobs, scratch workloads |

Kubernetes NodeUnpublishVolume Outcomes with Simplyblock™

Simplyblock™ supports Kubernetes Storage on NVMe/TCP and applies Software-defined Block Storage controls that keep noisy neighbors in check. That foundation helps teams drain nodes and roll stateful updates without turning unmounts into an incident.

Simplyblock also fits disaggregated designs where storage lives on dedicated nodes. In those layouts, clean unpublish behavior protects both the compute side and the storage side during upgrades and failure recovery.

Future Directions for Kubernetes NodeUnpublishVolume

Kubernetes keeps tightening the node lifecycle story, especially around planned shutdown and maintenance flows. Node shutdown handling and better drain behavior push clusters toward safer, faster transitions.

Driver and platform teams will also focus more on clarity and idempotency in node calls. Better metrics around unmount phases should make it easier to spot issues before pods get stuck.

Related Terms

Teams often review these glossary pages alongside Kubernetes NodeUnpublishVolume when they harden Kubernetes Storage and Software-defined Block Storage operations.

Questions and Answers

NodeUnpublishVolume is part of the CSI lifecycle that unmounts a volume from a specific pod path on a node when the pod is deleted. It’s essential in Kubernetes CSI workflows to safely clean up node-level mounts after use.

NodeStageVolume prepares a volume for use, while NodeUnpublishVolume reverses that process by detaching it from the pod. This ensures clean unmounts, especially for persistent volumes in Kubernetes Stateful workloads like databases or queues.

A failure in NodeUnpublishVolume can leave the volume stuck in use, blocking reattachment on another node. This impacts failover and rescheduling, particularly for high-availability setups on block storage replacement platforms like Simplyblock.

Yes. Although ephemeral volumes are short-lived, they still require unpublishing when the pod terminates. This phase is part of the same CSI lifecycle, supported fully by Simplyblock’s Kubernetes CSI implementation.

Yes, node resource contention, kernel errors, or lingering mount points can delay unpublishing. For latency-sensitive apps like PostgreSQL on Simplyblock, reliable unpublishing is essential for rapid pod recovery and consistent performance.