Control Plane vs Data Plane in Storage

Terms related to simplyblock

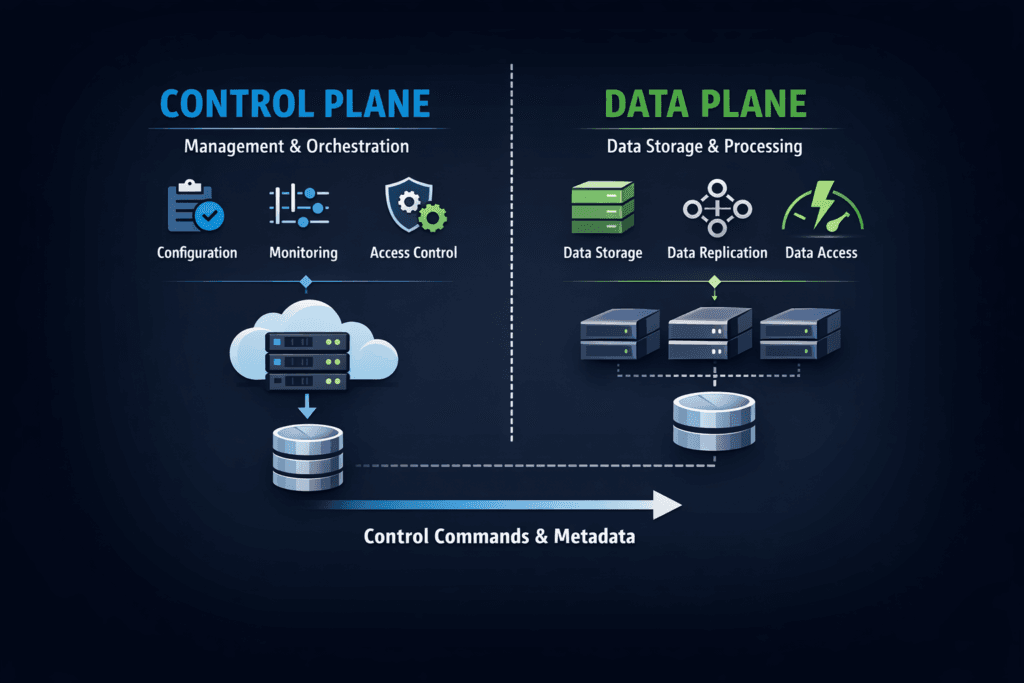

Control Plane vs Data Plane in Storage separates “decisions” from “I/O.” The control plane creates, attaches, resizes, snapshots, and places volumes. The data plane serves reads and writes and carries the performance burden. When teams mix these roles, they usually get slow provisioning, noisy outages, and confusing troubleshooting.

Executives care because the split shapes risk and cost. A weak control plane slows delivery and increases incident time. A weak data plane turns every database spike into a ticket. In Kubernetes Storage, the split matters even more because CSI automation drives most lifecycle tasks, while applications demand stable tail latency. A Software-defined Block Storage platform lets you tune both planes without buying a new array each time requirements change.

Where Control Plane vs Data Plane in Storage Draws the Line

The control plane answers “what should happen” and “where should it run.” It handles policies, placement, health, and orchestration. It also drives safety actions like fencing, failover, and rebuild scheduling.

The data plane answers “how fast can we serve I/O” and “how steady is latency.” It covers the I/O path, protocol processing, queue depth behavior, and CPU cost per I/O. If the data path burns CPU or adds copies, latency drifts up under load.

A simple way to spot the boundary: if an action changes a resource state, the control plane owns it. If an action moves bytes for an app, the data plane owns it.

🚀 Speed Up Storage Control Tasks Without Touching the I/O Path

Use Simplyblock to streamline provisioning, expansion, and snapshots while protecting data-plane latency.

👉 Use Simplyblock for Persistent Storage on Kubernetes →

Running a Strong Storage Control Plane at Scale

Control planes fail in predictable ways. They thrash on retries, overload on reconciliation loops, or stall on leader contention. Those issues do not show up in an IOPS chart, but they still break production.

Good teams set clear SLOs for lifecycle tasks. They measure time-to-provision, time-to-attach, and time-to-resize. They also track error rates on orchestration calls. Fast, steady lifecycle work reduces change risk, especially during node drain, cluster upgrades, and autoscaling.

Kubernetes adds another layer: controllers, sidecars, and API calls. If those parts fall behind, pods wait, even when storage hardware sits idle.

Control Plane vs Data Plane in Storage for Kubernetes Storage

In Kubernetes Storage, the control plane often includes CSI controllers, node plugins, and platform policies. It binds PVCs to PVs, manages snapshots, and handles topology rules. It also coordinates the attach and mount steps so the workload can start.

The data plane serves live reads and writes once the pod runs. That path must stay lean under contention because shared clusters create bursts and mixed I/O patterns. A fast backend can still feel slow if the data path adds overhead per I/O or if it shares CPU with noisy pods.

The best results come from clear separation. Keep orchestration stable and predictable. Keep the I/O path optimized and isolated.

Control Plane vs Data Plane in Storage with NVMe/TCP

NVMe/TCP sits in the data plane. It carries the I/O workload over standard Ethernet and can deliver high parallelism without specialized fabrics. That makes it a strong fit for scale-out designs, including disaggregated Kubernetes Storage deployments.

Even with NVMe/TCP, the control plane still decides placement, failover, and recovery pacing. If it schedules rebuilds too aggressively, it can push p99 latency out of bounds. If it delays healing, the risk rises. The platform needs policy controls that keep the tradeoff explicit.

A well-built stack treats NVMe/TCP performance as a budget. It spends that budget on the workloads that need it, not on avoidable overhead.

Benchmarking Control Plane vs Data Plane in Storage Under Load

Benchmarking should measure both planes, not just I/O. Start with data-plane tests: steady reads, steady writes, and mixed patterns. Capture p95 and p99 latency, plus bandwidth and IOPS. Then add stress: a node restart, a disk loss, or a rebalance event, while the workload keeps running.

Next, measure control-plane work during the same window. Track how long provisioning takes when the cluster stays busy. Track attach time during node drain. Track resize time under load. Those numbers often predict incident impact more than peak throughput does.

Make the test repeatable. Use the same profile, the same number of pods, and the same resource limits for each run. That discipline makes regressions obvious.

Practical Ways to Improve End-to-End Results

One change rarely fixes everything. Teams get better outcomes by tightening the interface between orchestration and I/O, then removing waste from each plane:

- Put clear SLOs on provisioning, attach, resize, and snapshot operations, then alert on drift.

- Keep the data path lean by reducing extra copies and avoiding slow kernel transitions where possible.

- Enforce per-volume QoS so background rebuild work does not steal the entire latency budget.

- Separate control traffic from bulk I/O traffic when the network design allows it.

- Test upgrades with real workloads, not synthetic idle clusters.

Control-Plane and Data-Plane Architecture Comparison

Before you choose an approach, compare how common designs behave when automation and performance collide. The goal is stable lifecycle work and stable I/O at the same time.

| Design approach | Control plane strength | Data plane strength | Typical failure mode | Best fit |

|---|---|---|---|---|

| Traditional SAN + scripts | Medium | Medium–High | Slow change workflows, hard audits | Static environments |

| CSI-first, generic backend | High | Varies | Good ops, uneven p99 latency | Broad workloads |

| Kernel-heavy SDS | Medium–High | Medium | CPU overhead under contention | Cost-focused clusters |

| User-space NVMe-first SDS | High | High | Needs clear QoS policy | Databases, high I/O |

How Simplyblock™ Keeps Both Planes Tight

Simplyblock™ focuses on Software-defined Block Storage that treats control-plane stability and data-plane speed as first-class goals. Its Kubernetes-native approach supports repeatable lifecycle actions, while the NVMe-first data path targets low jitter for stateful workloads.

The data plane benefits from SPDK-based, user-space I/O design, which helps reduce overhead and improve CPU efficiency. That headroom matters when clusters run busy and multi-tenant. With NVMe/TCP support, simplyblock can scale performance over standard Ethernet while keeping operations consistent across racks and nodes.

On the control-plane side, simplyblock aligns with Kubernetes workflows so teams can automate provisioning, expansion, and protection without turning every change into a ticket storm.

What Comes Next for Storage Plane Separation

Storage platforms will keep pushing more work into efficient data-plane paths while simplifying orchestration. DPUs and IPUs will take on more protocol and security tasks, which can reduce CPU jitter on application nodes. Policy engines will get stricter about QoS during rebuild and rebalance, because tail latency drives user pain.

Kubernetes will also demand tighter behavior from storage stacks. Platform teams want fast rollouts, safe upgrades, and clear audit trails. Systems that keep the control plane calm and the data plane fast will win that standard.

Related Terms

Teams reference these related terms when separating lifecycle control from live I/O paths in storage.

CSI Performance Overhead

Storage Orchestration

SPDK vs Kernel Storage Stack

Block Storage CSI

Questions and Answers

In storage architectures, the control plane manages provisioning, replication, and policy enforcement, while the data plane handles actual read/write I/O. Modern systems based on a distributed block storage architecture separate these layers to prevent management operations from impacting performance.

The control plane does not process I/O directly, but inefficient orchestration can delay scaling, failover, or provisioning. Platforms built on scale-out storage architecture ensure control-plane tasks remain independent from performance-critical data paths.

The data plane determines latency, throughput, and IOPS. Technologies like NVMe over TCP optimize the data path by reducing protocol overhead and enabling parallel I/O queues over standard Ethernet.

In Kubernetes, the CSI driver operates in the control plane to provision volumes, while application I/O flows directly through the data plane. Simplyblock integrates with Kubernetes via its CSI-based storage architecture to maintain this separation efficiently.

Simplyblock uses a distributed control layer for orchestration while keeping the I/O path direct and optimized. Its software-defined storage platform ensures automation, replication, and encryption policies do not interfere with high-performance data-plane operations.