CSI Ephemeral Volumes

Terms related to simplyblock

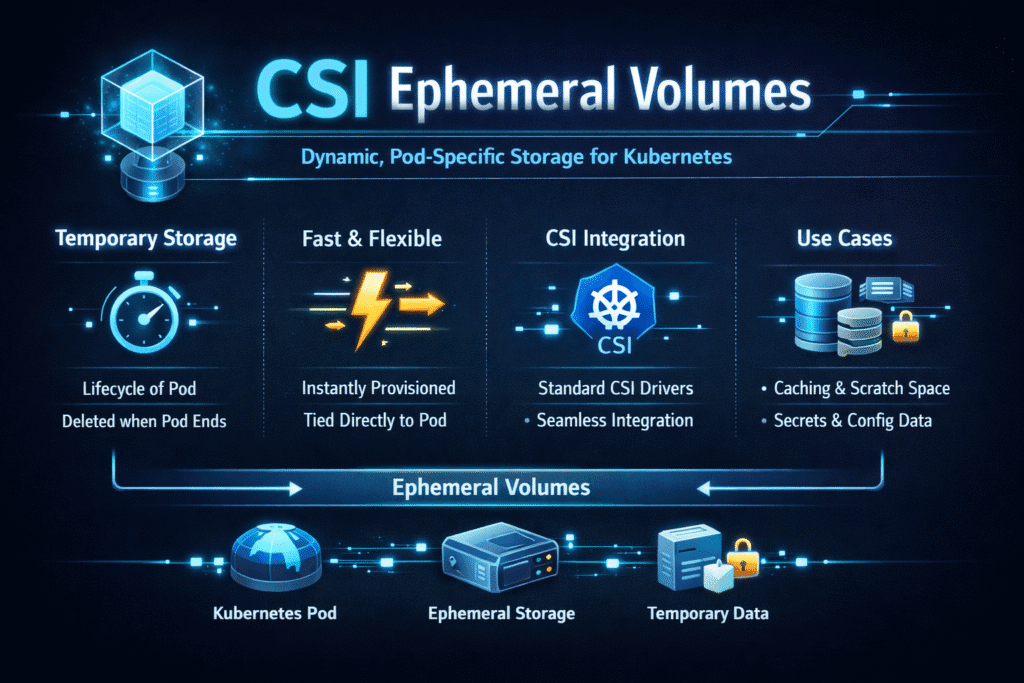

CSI Ephemeral Volumes give a pod a CSI-backed volume that lives and dies with that pod. Kubernetes creates the volume after scheduling, mounts it into the containers, and removes it when the pod ends. Teams use this model for scratch data, build caches, short-lived pipeline stages, and app-side buffering that must act like a real volume, but does not need long-term retention.

This feature sits between simple emptyDir scratch space and full persistent volumes. It keeps the developer flow fast, while still letting platform teams apply storage policy through Kubernetes Storage.

Making Pod-Scoped Storage Safer at Scale

Ephemeral data often looks harmless until a cluster gets busy. Cache churn can spike write load, and short-lived jobs can flood the control plane with create and delete calls. Those effects show up as slower pod start times, noisy-neighbor latency, and sudden drops in throughput for other tenants.

Strong platform design treats ephemeral and persistent I/O as one system. Teams should define where ephemeral volumes belong, how much bandwidth they can consume, and how they share resources with databases and queues. Software-defined Block Storage helps here because it centralizes tiering and QoS, instead of pushing ad hoc limits into each workload spec.

🚀 Keep CI Jobs Fast with CSI Ephemeral Volumes on NVMe/TCP

Use simplyblock to add QoS guardrails and Software-defined Block Storage control for Kubernetes Storage scratch I/O.

👉 Use simplyblock for NVMe/TCP Kubernetes Storage →

CSI Ephemeral Volumes in Kubernetes Storage

Kubernetes supports multiple ephemeral volume patterns, and CSI adds two important benefits: a real storage backend, plus consistent lifecycle handling through the CSI interface. With CSI Ephemeral Volumes, the pod spec can request storage inline, which keeps YAML simple for teams that do not want PVC objects for short tasks.

Generic ephemeral volumes can also use a StorageClass, which gives platform owners more control over performance and placement. That matters in multi-tenant Kubernetes Storage because it lets you separate “cheap scratch” from “high-IO scratch” without letting teams invent one-off storage configs.

CSI Ephemeral Volumes on NVMe/TCP Networks

NVMe/TCP changes what “ephemeral” feels like. A scratch volume can behave like a fast local disk when the network stays clean and the storage path stays efficient. It can also turn into a bottleneck if jobs land on nodes with a congested east-west path or a mismatched MTU.

When you back ephemeral volumes with NVMe/TCP, align three things: node placement, network policy, and storage QoS. Keep the fast path predictable so short jobs finish on time, and keep tenant bursts from crushing the rest of the cluster. Software-defined Block Storage makes that easier because it can enforce queue limits per tenant and per volume class.

Measuring CSI Ephemeral Volumes Performance

Measure outcomes that match the pod lifecycle. Start with pod startup time, because inline volumes add create and mount steps that can delay readiness. Track p95 and p99 latency during bursty job waves, because tail latency reveals contention faster than average IOPS.

Use two test phases for a clear signal. First, run steady writes that mirror your real workload mix. Next, fire short-lived pods that create and delete volumes at a fixed rate. This method shows whether the platform handles churn or falls into backlogs.

Tactics to Keep Ephemeral I/O Predictable

Most wins come from simple guardrails that scale across teams. Use one policy set, then apply it by default.

- Separate “scratch tiers” by StorageClass or backend policy, and keep the names stable across clusters.

- Cap bandwidth and IOPS for bursty namespaces, so build jobs do not starve OLTP traffic.

- Prefer node pools with known-good NIC settings for high-IO ephemeral use.

- Watch create, mount, and delete rates in the control plane, and alert on queueing.

- Keep a tight cleanup policy for failed pods, so orphaned churn does not pile up.

- Load test restores and retries, because failure paths often cost more than happy paths.

Choosing an Ephemeral Volume Model

The table below compares common ephemeral choices and how they behave under load.

| Model | What it’s best at | Main trade-off | Typical use |

|---|---|---|---|

emptyDir on node disk | Simple, fast setup | Node-local limits and noisy neighbors | Scratch files, temp extracts |

emptyDir in memory | Low latency | Memory pressure and eviction risk | Small caches, hot buffers |

| CSI Ephemeral Volumes | Pod-scoped volumes with CSI behavior | Extra create/mount work per pod | Build caches, short pipelines |

| Generic ephemeral volumes (StorageClass-backed) | Strong policy control | Needs class standards | Multi-tenant scratch tiers |

Policy-Driven Ephemeral Storage with Simplyblock™

Simplyblock™ helps teams run fast pod-lifetime storage without turning the cluster into a tuning project. With NVMe/TCP support, Kubernetes Storage integration, and Software-defined Block Storage controls, simplyblock can keep short jobs quick while protecting latency-sensitive services.

Platform teams can use storage QoS to bound cache churn, enforce tenant limits, and keep tail latency steady during burst windows. The SPDK-based, user-space data path also reduces CPU overhead in the I/O stack, which matters when high pod density meets high IOPS.

What Changes Next for Pod-Lifetime Storage

Teams want more control without more YAML. Expect tighter links between the scheduler, capacity signals, and ephemeral provisioning decisions. Expect better policy defaults for burst control, too, because build systems and AI pipelines create spiky storage demand.

Acceleration will matter more as clusters scale. DPUs and user-space I/O stacks can free CPU cycles, reduce jitter, and keep NVMe/TCP performance consistent during short-lived bursts.

Related Terms

Teams often review these glossary pages alongside CSI Ephemeral Volumes when they standardize Kubernetes Storage and Software-defined Block Storage behavior.

Questions and Answers

CSI Ephemeral Volumes allow volumes to be specified inline within a pod spec, making them suitable for short-lived workloads without persistent storage needs. They’re part of the Kubernetes CSI spec and do not require PersistentVolume or PVC definitions.

Use CSI Ephemeral Volumes for temporary workloads like init containers, test jobs, or scratch space. For databases and stateful apps, Kubernetes Stateful workloads should use PVCs to ensure volume durability and data persistence.

No. Since the volume lifecycle is tightly coupled to the pod, features like snapshots, cloning, and resizing are not supported. If you need those capabilities, a better choice is a solution like Simplyblock’s block storage replacement with full PVC integration.

While ephemeral by design, you can still enforce security measures like setting volumes as read-only. For sensitive workloads, using persistent volumes with encryption at rest provides stronger security guarantees over the full lifecycle.

Simplyblock’s CSI driver focuses on high-performance, persistent volumes rather than ephemeral storage. For applications that require durability and high IOPS, Simplyblock is ideal for running PostgreSQL on Simplyblock and other persistent workloads.