CSI External Snapshotter

Terms related to simplyblock

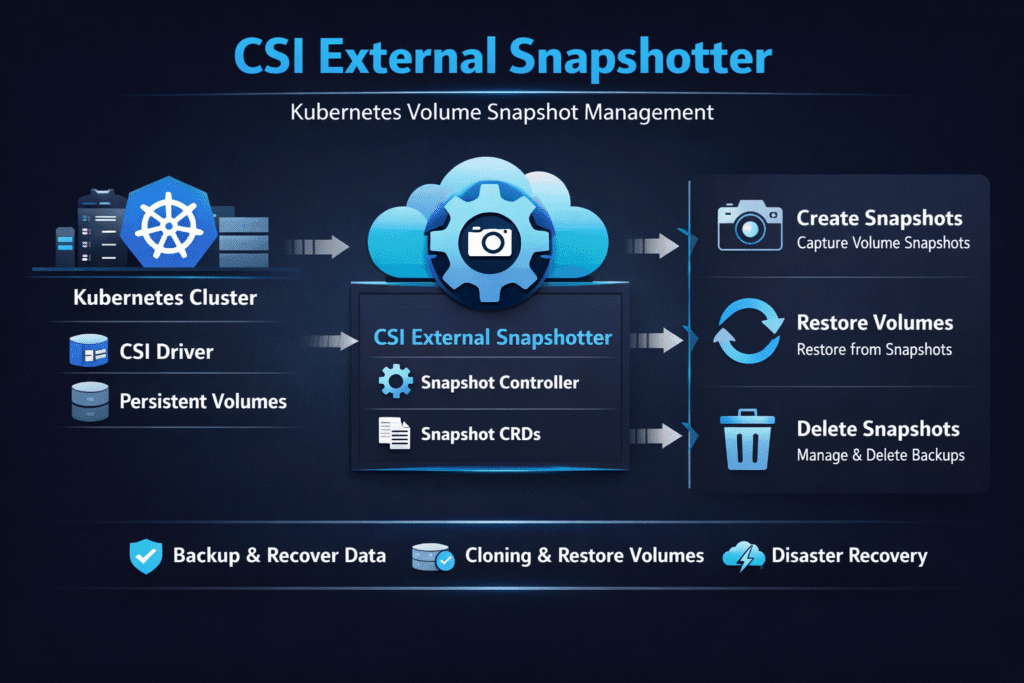

CSI External Snapshotter is a Kubernetes CSI sidecar that connects the Kubernetes snapshot API objects to your storage backend’s snapshot calls. It watches snapshot resources, calls the CSI driver’s snapshot RPCs, and updates status so automation tools can tell when a snapshot is ready, failed, or deleted. Teams rely on it for fast rollback, safe cloning, and repeatable backup flows in Kubernetes Storage, especially when they run stateful services at scale.

Executives tend to care about two outcomes: recovery speed and risk control. The external snapshot path affects both. If snapshots lag, restores lag. If snapshots behave inconsistently, teams lose trust in recovery plans.

Running Snapshot Workflows Reliably at Cluster Scale

A stable snapshot workflow needs clear ownership across the control plane and the data plane. The control plane includes the snapshot CRDs, the snapshot controller, and the snapshot sidecar. The data plane includes the storage system’s copy-on-write logic, metadata handling, and write path.

Treat snapshot automation as a platform feature, not a per-team add-on. Standardize the versions of snapshot components, define a small set of snapshot classes, and enforce guardrails around retention and access. Those steps reduce surprise costs from snapshot sprawl and reduce incident rates tied to “it worked in one cluster” drift.

🚀 Automate Kubernetes Volume Snapshots Without Latency Spikes

Use simplyblock to run copy-on-write snapshots with NVMe/TCP performance, plus QoS controls for safer backup windows.

👉 Use simplyblock for Fast Backups and Disaster Recovery →

CSI External Snapshotter in Kubernetes Storage

In Kubernetes Storage, snapshots work like a contract between the API and the backend. Users create a snapshot request for a PVC, the control plane binds objects, and the sidecar triggers create or delete calls on the CSI endpoint. If any layer drifts, teams see stuck snapshot states, failed restores, or long control-plane delays during busy periods.

Two design choices shape results. First, snapshot classes must map to real tiers and clear retention rules, so teams do not treat every volume the same. Second, the CSI driver must implement snapshot RPCs correctly, because the sidecar cannot “fix” a backend that cannot deliver consistent snapshot semantics.

When you run multi-tenant Kubernetes Storage, combine snapshot policy with storage QoS rules. That pairing limits blast radius when one tenant triggers a burst of snapshots while another tenant pushes peak writes.

Snapshot Control Planes over NVMe/TCP Fabrics

NVMe/TCP changes the performance envelope for snapshot-heavy workloads. Snapshots often stress metadata, but restores and clones can stress bandwidth and latency. If your storage runs over NVMe/TCP, the platform must keep the network path stable, avoid congestion, and preserve predictable tail latency during snapshot windows.

Software-defined Block Storage platforms can help here because they centralize policy and keep the I/O path consistent across nodes. When the snapshot workflow and the NVMe/TCP data path share the same guardrails, platforms can run backup jobs without derailing production latency.

Measuring and Benchmarking CSI External Snapshotter Performance

Measure what users feel, not only what the storage backend advertises. Snapshot creation time matters, but restore time matters more because it defines recovery speed. Track snapshot queue depth, API event lag, and controller retries to see when the control plane becomes the bottleneck.

A practical benchmark uses two passes. Start with a steady-state write load that matches your real workload. Then run snapshots at a fixed cadence while you track p95 and p99 latency, snapshot completion time, and restore time into a new PVC. This test shows whether the platform absorbs snapshot churn or amplifies it.

Practical Tuning to Reduce Snapshot Latency and Drift

Most snapshot issues come from version drift, weak policy, or overloaded controllers. Fix those first, then tune the data path.

- Pin snapshot component versions per cluster release train, and upgrade them as a unit.

- Keep a short list of snapshot classes that map to real tiers and retention rules.

- Limit snapshot rights with RBAC so automation cannot snapshot sensitive PVCs by mistake.

- Cap snapshot bursts per namespace to protect latency for other tenants.

- Validate restore speed under load, because restore exposes hidden bottlenecks.

- Align storage QoS with snapshot workflows, so backup jobs do not starve foreground I/O.

Snapshot Architecture Options Compared

The table below compares common snapshot setups and where teams usually hit scaling limits.

| Option | What it optimizes | Typical pain point | Best fit |

|---|---|---|---|

| Basic snapshots with minimal policy | Fast adoption | Snapshot sprawl and uneven restore behavior | Small clusters, light compliance needs |

| Central snapshot policy with quotas and RBAC | Safety and cost control | More upfront platform work | Shared clusters and regulated workloads |

| Integrated snapshots plus QoS in Software-defined Block Storage | Predictable latency and repeatable restores | Requires clear tier design | Multi-tenant Kubernetes Storage on NVMe/TCP |

Hardening CSI External Snapshotter Operations with Simplyblock™

Simplyblock™ supports Kubernetes Storage snapshots as part of a broader Software-defined Block Storage stack that targets predictable performance. Simplyblock’s NVMe/TCP data path and policy controls help keep snapshot workflows from turning into noisy-neighbor events. Teams can run snapshot automation, enforce QoS, and still keep latency stable for the workloads that pay the bills.

Platform teams also benefit from operational clarity. When snapshot controls, storage tiers, and performance limits live in one system, teams spend less time tracing cross-component blame and more time shipping reliable recovery plans.

Future Directions in Snapshot Automation for Kubernetes

Snapshot workflows keep moving toward tighter control-plane signals and more policy-driven safety. Expect stronger defaults around snapshot class selection, better visibility into snapshot queues, and more consistent restore behavior across storage vendors.

Acceleration will matter, too. As clusters push higher I/O rates, platforms will offload more data-path work to user-space stacks and, in some environments, to DPUs. That shift should reduce CPU contention and help NVMe/TCP stay predictable during snapshot bursts.

Related Terms

Teams often review these glossary pages alongside the CSI External Snapshotter when they standardize Kubernetes Storage and Software-defined Block Storage recovery workflows.

- CSI Snapshot Controller

- Volume Snapshotting

- CSI Driver vs Sidecar

- Dynamic Provisioning in Kubernetes

- OpenShift Volume Snapshots

Questions and Answers

The CSI External Snapshotter is a sidecar component that enables Kubernetes to manage volume snapshots using the CSI spec. It watches for VolumeSnapshot resources and communicates with the CSI driver to create or delete snapshots. It plays a key role in Kubernetes CSI architecture.

The snapshotter monitors VolumeSnapshot objects and uses the VolumeSnapshotClass to determine which CSI driver and parameters to use. For consistent backups, make sure the driver supports CSI snapshots, like Simplyblock’s supported technologies.

No, the CSI External Snapshotter only manages snapshot creation and deletion — it doesn’t handle scheduling. However, you can combine it with third-party tools for automated backups, especially when protecting Kubernetes Stateful workloads.

Yes. Simplyblock’s CSI driver is compatible with snapshot functionality and integrates seamlessly with the CSI External Snapshotter, enabling snapshot creation, retention, and clone operations on block storage replacement volumes.

You need the CSI External Snapshotter, a CSI driver with snapshot support, and CRDs like VolumeSnapshot, VolumeSnapshotClass, and VolumeSnapshotContent. Simplyblock’s CSI implementation supports these features to deliver encryption at rest and snapshot consistency.