CSI Resize Controller

Terms related to simplyblock

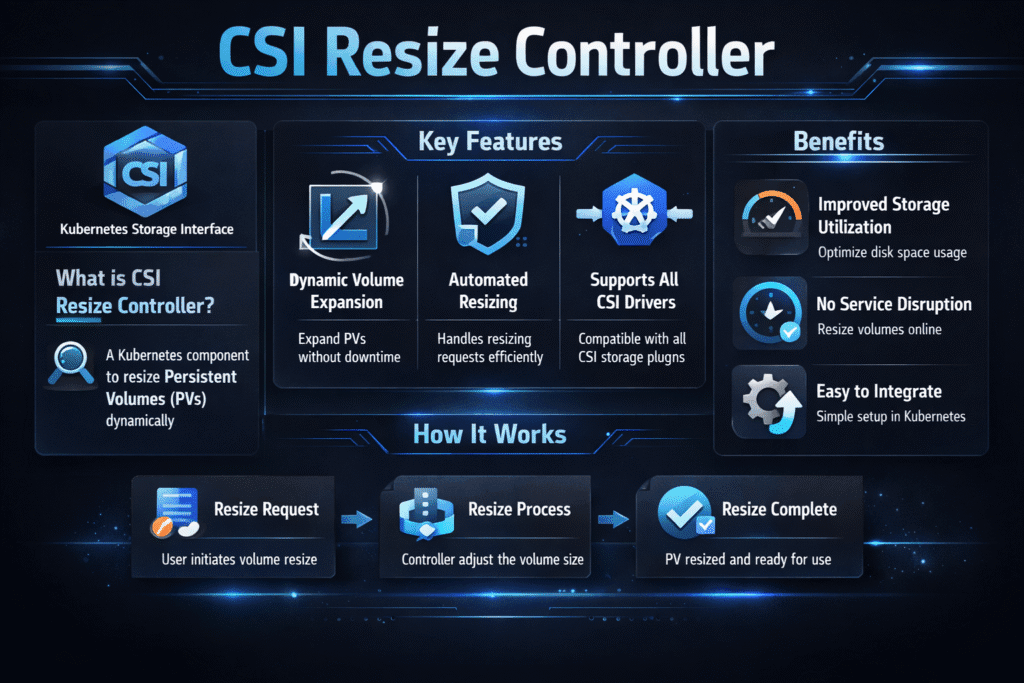

CSI Resize Controller is the Kubernetes control-plane component that turns a larger PVC request into a real backend volume expansion. It watches for a PVC size increase, calls the CSI driver’s controller-side expand operation, and updates Kubernetes objects so the node can finish the job. When the workflow runs clean, teams grow capacity without app rebuilds or copy jobs.

Leaders care because resizing lowers risk and cost. Instead of overbuying from day one, teams start with the right size and expand as data grows. Ops teams care because a resize runbook often shows up during peak traffic, not during a planned window.

Control-Plane Mechanics Behind the CSI Resize Controller

The resize workflow starts when someone edits a PVC and requests more storage. Kubernetes records the new size, then the resizer sidecar triggers ControllerExpandVolume on the CSI endpoint. After the controller finishes, the node side typically expands the filesystem so the extra space becomes usable inside the pod.

Most problems come from mismatched expectations. Some backends resize fast but take longer to rebalance data. Some filesystems grow online, while others need a remount or a restart. Clear StorageClass rules reduce surprises, especially in shared Kubernetes Storage clusters.

🚀 Expand PVC Capacity Safely with CSI Resize Controller Workflows

Use simplyblock to keep NVMe/TCP volume expansion predictable with QoS controls in Kubernetes Storage.

👉 Use simplyblock for Kubernetes Volume Expansion →

CSI Resize Controller in Kubernetes Storage Operations

In Kubernetes Storage, resize becomes a day-two operation that must behave like a routine change. The workflow touches the API server, controller components, the CSI driver, the backend, and the kubelet path. One weak link can turn a simple PVC edit into long “FileSystemResizePending” waits or repeated retries.

Platform teams get better outcomes when they standardize which StorageClasses allow expansion and how they verify completion. They also need clear signals for “done,” such as PVC conditions, controller events, and node-side resize status.

CSI Resize Controller Behavior on NVMe/TCP

NVMe/TCP raises the bar for consistency. A fast transport helps steady I/O during a resize window, but it also makes control-plane delays stand out. If the controller or backend stalls, the app still waits, even when the data path runs hot.

Software-defined Block Storage designs help because they keep policy and performance in one place. When the platform applies QoS limits, a large resize in one namespace won’t steal IOPS from other tenants. That matters most for busy Kubernetes Storage fleets that run databases, queues, and analytics on the same cluster.

Measuring and Benchmarking Resize Outcomes

Measure resize as an operational event, not as a benchmark score. Track time from the PVC edit to a usable filesystem. Watch pod latency during the resize window, because tail latency exposes contention. Pair those signals with controller retry rates and queue depth.

Use a simple test pattern: drive steady writes that match your real workload, trigger resizes at set intervals, then verify filesystem growth and app latency. This approach shows whether the platform absorbs resize work or turns it into jitter.

Practical Ways to Reduce Resize Risk and Delays

Most wins come from policy and guardrails, not from deep tuning.

- Standardize on expansion-capable StorageClasses for tier-1 workloads, and document what “online” means per class.

- Apply tenant QoS so resize work in one namespace cannot crowd out foreground I/O.

- Keep CSI sidecars and controller components on a single upgrade track to avoid version drift.

- Validate resize under load, plus a node-drain test, to confirm the full lifecycle stays stable.

- Set approval thresholds for large jumps, so one edit does not trigger hours of backend work.

Resize Controller Approaches Compared

The table below compares common resize setups and what teams usually trade off.

| Approach | What it does well | Typical downside | Best fit |

|---|---|---|---|

| Basic resize with minimal policy | Quick enablement | Uneven outcomes across StorageClasses | Small clusters |

| Resize with strict StorageClass standards | Predictable ops | More platform ownership | Production Kubernetes Storage |

| Resize plus QoS in Software-defined Block Storage | Stable latency under change | Needs tier design and QoS plan | Multi-tenant fleets on NVMe/TCP |

Keeping Resizes Predictable with Simplyblock™

Simplyblock™ supports Kubernetes Storage with NVMe/TCP and Software-defined Block Storage controls that help keep resizing boring. Simplyblock pairs a high-performance data path with policy tools such as multi-tenant QoS, so capacity growth does not turn into a latency event.

Ops teams also benefit from cleaner standards. When the storage platform treats resize as a first-class workflow, teams spend less time chasing edge cases across sidecars, nodes, and backends. They can focus on clear SLO signals: resize time, p99 latency, and error rate.

What’s Next for Online Expansion

The Kubernetes ecosystem continues to harden resize workflows with clearer signaling and better support for secure resize operations. Expect more focus on auth, stronger status reporting, and fewer hidden node-side surprises.

As NVMe/TCP adoption grows, platforms will also push for lower CPU overhead and tighter jitter control. Those gains will matter most during change windows, when clusters run real traffic and still need to expand storage safely.

Related Terms

Teams often review these glossary pages alongside the CSI Resize Controller when they standardize Kubernetes Storage and Software-defined Block Storage change workflows.

Questions and Answers

The CSI Resize Controller is a Kubernetes component that handles volume expansion requests for CSI-backed PersistentVolumeClaims. It coordinates updates between the CSI driver and filesystem. This is critical for scaling Kubernetes Stateful workloads without downtime.

When a PVC’s size is modified, the controller invokes the ControllerExpandVolume call to the Kubernetes CSI driver. The driver handles backend resizing, followed by a filesystem resize on the node.

Yes, if both the CSI driver and filesystem (e.g., ext4 or xfs) support online expansion. Simplyblock enables seamless scaling of live volumes through its block storage replacement platform, reducing the need for pod restarts or re-attachments.

You must enable allowVolumeExpansion: true in the StorageClass, and use a CSI driver that supports resizing. For workloads like PostgreSQL on Simplyblock, this enables rapid capacity changes without impacting availability.

Absolutely. OpenShift uses the same Kubernetes CSI spec and supports dynamic volume expansion via the CSI Resize Controller. It works seamlessly with Simplyblock’s supported technologies to provide elastic storage provisioning across clusters.