IO Contention

Terms related to simplyblock

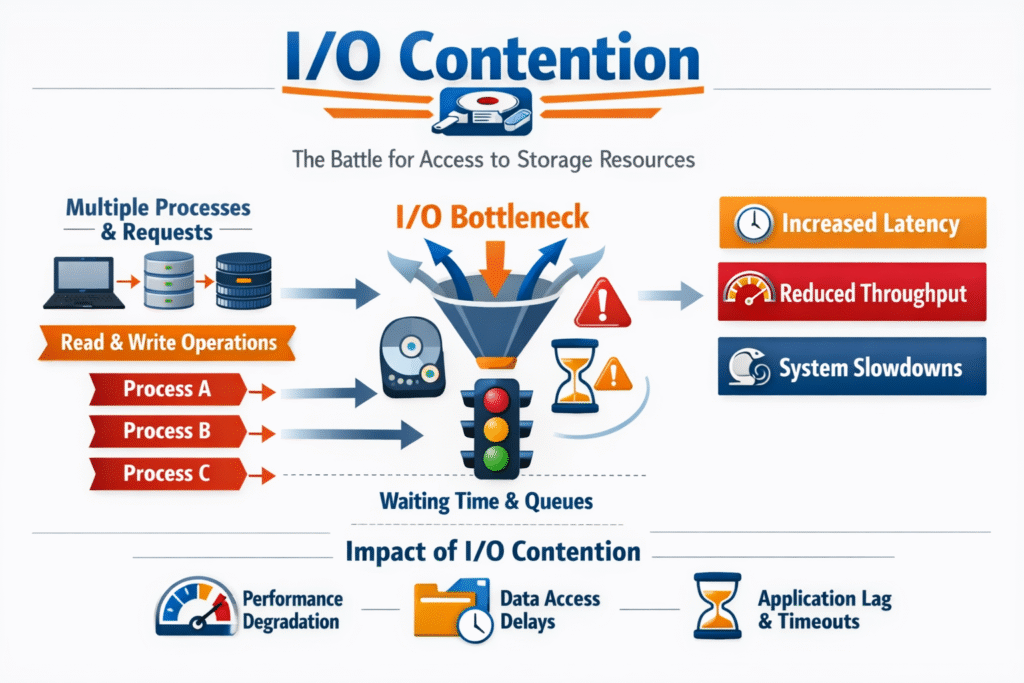

IO Contention happens when multiple applications, VMs, or pods hit the same storage resources at the same time, and the platform cannot complete all requests fast enough. Queues grow, latency rises, and throughput becomes uneven. This shows up first in tail latency, where p95 and p99 numbers spike even if average latency still looks “fine.”

Several bottlenecks can trigger this behavior. Shared controller CPU, saturated network links, oversubscribed NVMe devices, and a noisy neighbor that floods the queue can all push the system past its stable point. Once that point is crossed, adding more concurrency often reduces useful work because requests spend more time waiting than completing.

Executives care because contention breaks predictability. The business then pays twice, once in missed SLOs and again in higher spend from over-provisioning to “make the peaks go away.” DevOps and IT Operations teams feel it as paging noise, performance tickets, and constant tuning.

Optimizing Storage Resource Sharing with Modern Solutions

Modern storage designs reduce contention by shrinking overhead and increasing parallelism end-to-end. NVMe improves command efficiency and supports many queues, which helps when workloads run in parallel. NVMe-oF extends that model across the network, so compute and storage can scale independently.

Software choices matter as much as hardware. Kernel-based stacks add context switches and copies under load, which increases CPU burn when queues grow. User-space, zero-copy designs can keep latency tighter during bursts because they avoid work that does not move data forward.

This is where Software-defined Block Storage delivers value. It gives platform teams policy control over performance, placement, and isolation, rather than hoping shared hardware behaves fairly under pressure.

🚀 Eliminate IO Contention with QoS on NVMe/TCP Storage, Natively in Kubernetes

Use Simplyblock to enforce IOPS and bandwidth limits, prevent noisy neighbors, and stabilize tail latency at scale.

👉 Use Simplyblock Multi-Tenancy and QoS →

How IO Contention Shows Up in Kubernetes Storage

Kubernetes Storage increases the odds of contention because it mixes diverse workloads on shared nodes and shared backends. A deployment can schedule dozens of pods that all land on the same storage path. Autoscaling can also create sudden bursts that look like a denial-of-service event to the storage layer.

Noisy-neighbor effects get worse when multiple tenants share the same pool and the platform lacks strict limits. A batch analytics job can consume the queue and raise latency for an OLTP database. The database then slows down, retries pile up, and the cluster can spiral into a wider incident.

Strong Kubernetes Storage design focuses on isolation and predictable scheduling of I/O. Teams usually get better results when they pair storage classes with clear performance intent, enforce per-tenant limits, and separate hot volumes from general-purpose pools.

Why NVMe/TCP Helps Reduce IO Contention

NVMe/TCP helps in two practical ways. It supports scalable queueing and parallelism over standard Ethernet, and it avoids many legacy protocol costs that add delay under load. Because the transport runs on common network gear, teams can deploy it broadly without specialized fabrics, which reduces “one-off” islands that become new hotspots.

Contention often amplifies CPU overhead. A stack that wastes cycles on copies, interrupts, and kernel transitions leaves fewer cycles for actual application work. SPDK-style user-space processing reduces overhead and can keep CPU efficiency higher when concurrency rises. That typically improves consistency, not just peak numbers.

NVMe/TCP also fits hybrid designs. You can run hyper-converged for latency-sensitive edge clusters, disaggregate for scale, or mix both models when different apps have different bottlenecks.

Measuring and Benchmarking Contention Hot Spots

Measurement should start with latency percentiles, not averages. p95 and p99 tell you how bad waiting gets during bursts. Add queue depth, device utilization, and per-volume IOPS to see where the system saturates.

Benchmarking needs realism. A synthetic test that only runs sequential reads can hide a problem that appears immediately with small-block random writes. Tools like fio can model block sizes, read/write mix, and concurrency, so you can map behavior across workloads instead of guessing.

Good test discipline prevents false confidence. Match the test profile to the application, run long enough to include steady-state effects, and compare results at multiple queue depths. If throughput rises while tail latency explodes, you found a contention cliff.

Approaches for Improving Contention Behavior

The best results come from combining isolation, smarter placement, and a faster data path, rather than tuning a single knob. These actions usually provide the biggest step toward stable performance:

- Set per-tenant and per-volume QoS limits to prevent noisy neighbors.

- Separate hot volumes with placement rules, or move them to dedicated pools.

- Right-size concurrency so workloads do not flood a saturated link or target.

- Reduce overhead in the data path with user-space, zero-copy processing where possible.

- Use protocol choices that match goals, such as NVMe/TCP for broad deployment, or RDMA where the environment supports it.

- Watch tail latency continuously, and automate guardrails before SLOs break.

Tradeoffs Across Common Mitigation Options

The table below compares several common approaches by predictability under load, operational effort, and fit for shared platforms. Use it to weigh noisy-neighbor risk and tail-latency stability against cost and complexity.

| Approach | Predictability Under Load | Operational Effort | Best Fit |

|---|---|---|---|

| Best-effort shared array | Low to medium | Medium | Mixed legacy environments |

| Local NVMe only | Medium | Low | Single-node speed, edge use |

| DIY NVMe-oF stack | Medium to high | High | Specialized teams with time to tune |

| Policy-driven SDS with QoS | High | Low to medium | Multi-tenant platforms and databases |

Predictable IO Contention Control with Simplyblock™

Simplyblock™ targets consistent performance under mixed load by combining an SPDK-based user-space data path with tenant-aware controls. That design reduces overhead in the hot path, which helps when queues build during bursts. It also supports NVMe/TCP and NVMe/RoCEv2, so teams can choose a transport that fits their network and latency goals.

In Kubernetes Storage, simplyblock can run hyper-converged, disaggregated, or in a mixed deployment. That flexibility lets teams place storage services where they can lower contention the most. Multi-tenancy and QoS further protect critical workloads, such as databases, from background jobs that would otherwise steal I/O budget.

Simplyblock fits executives’ goals because it reduces over-provisioning pressure. IT leaders can set performance intent as policy and scale capacity and throughput without rebuilding the platform.

Where Contention Management Is Heading

Data path acceleration will keep moving closer to the network. DPUs and IPUs can take on parts of the storage workload, which reduces host CPU variance and improves consistency during spikes. Transport choice will also become more dynamic as platforms map workloads to the right protocol based on latency targets and fabric readiness.

Automation will matter even more than raw speed. Systems will increasingly tie monitoring to action, such as throttling a batch job when tail latency rises, or shifting workloads to less loaded targets. As more organizations standardize on software-defined control planes, storage will behave more like a schedulable resource, not a black box.

Related Terms

Platform teams review these glossary pages alongside IO Contention because they explain the core signals and control points that keep Kubernetes Storage and Software-defined Block Storage stable under mixed, multi-tenant load.

Zero-Copy I/O

Persistent Storage

Local Node Affinity

NVMe

Questions and Answers

IO contention happens when multiple applications or containers compete for limited storage bandwidth or IOPS. In shared environments like Kubernetes clusters, it leads to latency spikes and degraded performance, especially under peak load.

Using dedicated volumes per workload and deploying topology-aware storage ensures better isolation and reduces IO interference. Pair this with proper pod affinity rules to co-locate high-throughput apps with their local volumes.

While NVMe over TCP provides excellent performance, IO contention can still occur if multiple workloads are not bandwidth-isolated. Proper queue depth tuning and smart provisioning help maintain consistent performance across shared NVMe-backed storage.

Yes. Platforms like Simplyblock use copy-on-write storage with intelligent resource allocation to minimize IO collisions between tenants or services. Combined with snapshots and replicas, this enables scalable, isolated performance even during spikes.

Monitoring tools like iostat, fio Prometheus-based metrics can help detect IO bottlenecks. Look for elevated queue depths and high latency during IO-intensive events. For persistent issues, consider volume-level optimization or scaling storage bandwidth.