Kubelet Volume Manager

Terms related to simplyblock

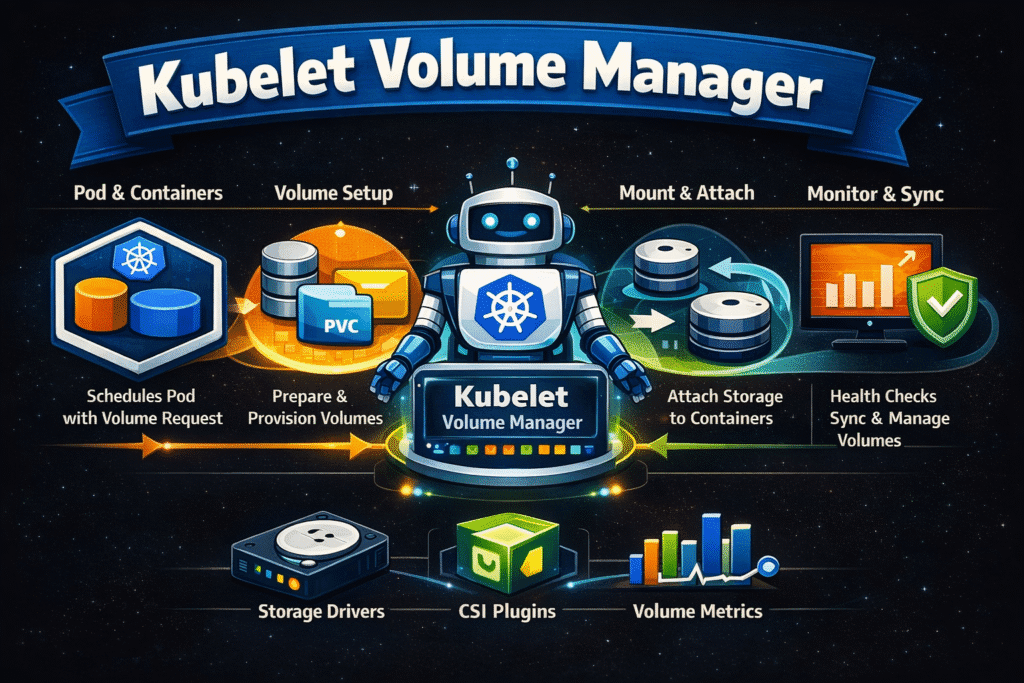

Kubelet Volume Manager is the node-side part of Kubernetes that makes storage usable for pods. It tracks which volumes each pod needs, runs attach and mount workflows through the configured volume plugins (most often CSI), and keeps the node’s “desired state” and “actual state” aligned. When it performs well, pods start fast, restarts stay predictable, and stateful workloads keep steady I/O. When it falls behind, you see symptoms like slow pod scheduling-to-ready times, repeated mount retries, and noisy “timeout” events during peak rollout windows.

In practice, Kubelet Volume Manager sits right on the boundary between orchestration and data path. It triggers operations (attach, mount, unmount, detach), then your storage backend determines how quickly the node can complete those steps. That’s why storage design directly affects Kubernetes Storage reliability, startup behavior, and p99 latency for stateful services.

Optimizing Kubelet Volume Manager with Production-Grade Solutions

Teams improve node-side volume behavior by reducing per-volume work and by cutting latency in the storage path. The cleanest way to do that is to align Kubernetes primitives (StorageClass, topology hints, StatefulSets, and CSI) with a storage layer that responds consistently under load.

For execs, the key questions stay simple: How quickly can the platform bring up 100 new pods that each need a PVC? How stable is performance during rolling upgrades? How much time does the team spend debugging mount issues instead of shipping product? A Software-defined Block Storage platform that keeps latency stable and offers policy control tends to move those metrics in the right direction.

🚀 Speed Up Kubelet Volume Manager Mounts with NVMe/TCP Storage, Natively in Kubernetes

Use simplyblock to reduce mount latency, cut retry noise, and keep pod rollouts predictable at scale.

👉 Use Simplyblock for NVMe/TCP Kubernetes Storage →

Volume Lifecycle Inside Kubernetes Storage

In Kubernetes Storage, the pod spec declares volumes, and controllers handle provisioning and binding. On each node, Kubelet Volume Manager takes over once the scheduler places the pod. It pulls the volume info, calls into the CSI node plugin (or in-tree plugins on legacy setups), mounts the filesystem (or maps a raw block device), and confirms the mount point before it starts containers.

This is where bottlenecks show up fast. High PVC churn, many small volumes per pod, and frequent reschedules increase the operation count. If the backend adds jitter, the node sees it as slower volume operations, and your rollout time stretches.

NVMe/TCP Considerations for Node-Side Volume Workflows

NVMe/TCP reduces storage latency and CPU overhead compared to older SCSI-era network approaches in many environments, while staying deployable on standard Ethernet. That matters to Kubelet Volume Manager because faster, steadier volume operations shorten pod “time-to-ready” and reduce retry storms during scale events.

When you combine NVMe/TCP with an SPDK-based, user-space data path, you also cut kernel overhead and context switching. That can translate to better node efficiency, which helps when a node must handle both application CPU and storage-related work. For many teams, this becomes a SAN alternative that fits cloud-native operations without locking hardware choices.

Measuring and Benchmarking Volume Operation Performance

To measure Kubelet Volume Manager’s impact, track two classes of signals: control-plane timing and data-path behavior.

Control-plane timing starts with the pod lifecycle. Measure “scheduled → containers ready,” then break out “volume setup time” from events and kubelet metrics. Look for spikes during deployments, node drains, or autoscaling. On the storage side, watch attach/mount duration distributions (p50, p95, p99), error rates, and retry counts.

Data-path behavior needs storage latency and throughput context. Run fio against representative PVCs, and correlate IOPS, throughput, and tail latency with kubelet volume operation times. If I/O looks fine but mounts still lag, focus on CSI node plugin performance, mount options, node CPU pressure, and per-node concurrency limits.

Practical Ways to Improve Volume Manager Throughput

The fastest gains usually come from reducing work per pod and from keeping storage latency consistent during burst events.

- Prefer fewer, larger PVCs over many tiny ones when your app design allows it, because each volume adds more work.

- Use a StatefulSet for stable identity and predictable PVC binding when you run stateful apps.

- Choose CSI drivers that expose solid node-side metrics and handle concurrency well.

- Standardize mount options and filesystem choices so nodes don’t drift into “special case” behavior.

- Pin latency-sensitive workloads to storage classes backed by NVMe/TCP, especially when startup time and p99 I/O both matter.

- Apply storage QoS controls so noisy neighbors do not delay volume operations for critical services.

- Avoid unnecessary reschedules by using sane anti-affinity, disruption budgets, and node maintenance planning.

Comparison Table – Storage Options and Node Volume Overhead

The table below summarizes how common storage approaches tend to affect node-side volume operations. Your exact results depend on CSI implementation, network design, and workload mix, but the operational pattern is consistent.

| Storage approach | Typical impact on pod startup and mounts | Operational trade-offs |

|---|---|---|

| Local NVMe (node-local, HostPath-like patterns) | Fast mounts, but limited mobility | Familiar tooling, but it can bottleneck Kubernetes rollouts |

| Traditional SAN / iSCSI-style designs | Often higher latency and more CPU overhead | Familiar tooling, but can bottleneck Kubernetes rollouts |

| NVMe/TCP Software-defined Block Storage | Faster, steadier volume operations at scale | Needs good policy, observability, and sane storage class design |

Keeping Kubelet Volume Manager Volume Ops Stable with Simplyblock™

Simplyblock focuses on predictable Kubernetes Storage behavior by delivering Software-defined Block Storage built for NVMe/TCP and cloud-native control. The design aligns well with what Kubelet Volume Manager needs: low jitter, scalable throughput, and policy guardrails.

Because simplyblock uses an SPDK-based, user-space architecture, it targets high IOPS and low CPU overhead for storage paths. That helps on baremetal and in mixed clusters where nodes already run CPU-heavy workloads. Simplyblock also supports disaggregated and hyper-converged deployments, so you can keep local performance where it matters and scale storage independently when you need it.

For Kubelet Volume Manager outcomes, that usually means shorter, more consistent mount times during rollouts, fewer “slow storage” incident cycles, and a cleaner path to multi-tenant platforms with QoS.

Future Directions for Kubelet Volume Manager and Node-Side Volume Operations

Kubernetes and CSI work is trending toward faster, clearer, and more consistent node-side attach and mount behavior, which directly affects Kubelet Volume Manager timing and retry patterns. Expect better kubelet and CSI metrics that separate node processing delays from backend latency, so teams can set SLOs for “volume setup time” per workload.

Hardware and data-path improvements will also matter. DPUs/IPUs and SPDK-style user-space paths can reduce CPU overhead during volume operations, while NVMe/TCP can lower latency variance, which helps keep pod startup and restarts predictable under rollout spikes.

Related Terms

Teams often review these glossary pages alongside Kubelet Volume Manager when they set targets for Kubernetes Storage and Software-defined Block Storage:

- Container Storage Interface (CSI)

- Kubernetes StatefulSet

- Storage Quality of Service (QoS)

- MAUS Architecture

Questions and Answers

The Kubelet Volume Manager handles the mounting, unmounting, and lifecycle tracking of volumes on a Kubernetes node. It ensures that persistent volumes are properly attached and available to pods, coordinating with the CSI Node Plugin to maintain state.

It communicates with CSI Node Plugins to trigger operations like staging, publishing, and unpublishing volumes. This is essential for container-attached storage environments where volume states must sync between Kubernetes and the storage backend.

Pods will remain in a ContainerCreating state or fail to start. Logs from the Kubelet and CSI Node Plugin should be reviewed to identify issues. Proper configuration of storage classes and node permissions is critical to avoid mount failures.

Yes. Inefficient volume state management or delays in mount/unmount operations can increase pod startup times and affect storage latency. To maintain optimal performance, ensure the Kubelet is tuned and storage plugins are compatible with your volume setup.

Simplyblock’s CSI implementation supports all Kubelet volume lifecycle stages, including ephemeral and persistent mounts. It integrates seamlessly into Kubernetes, enabling high-throughput block storage with minimal configuration.