Kubernetes Raw Block Volume Support

Terms related to simplyblock

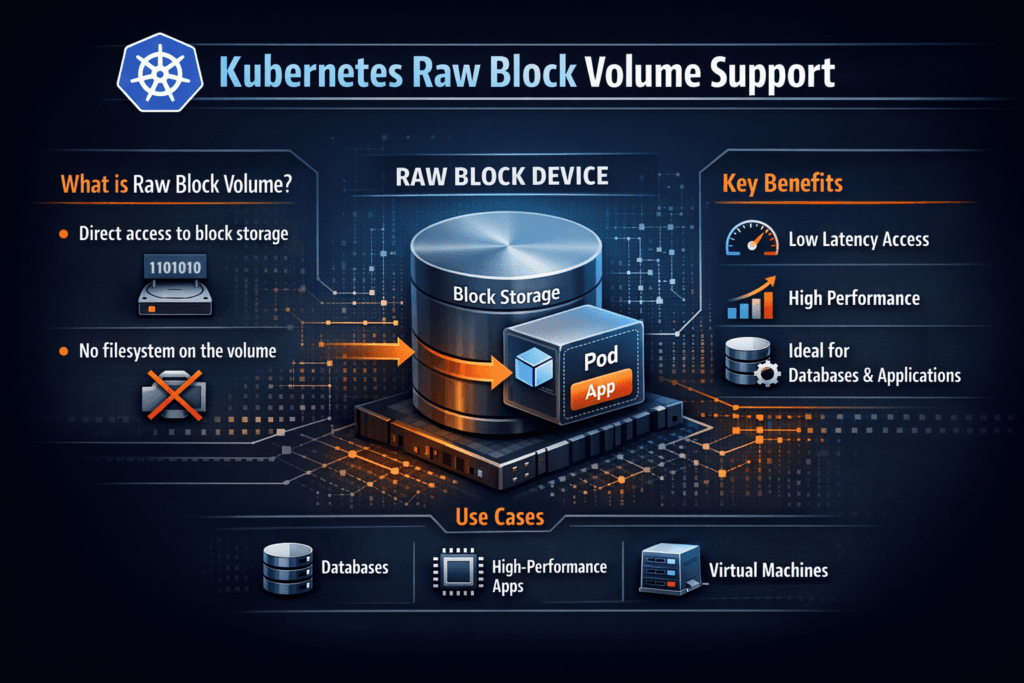

Kubernetes raw block volume support lets a pod use a PersistentVolume as a block device instead of a mounted filesystem. The container receives a device path, and the application decides how to format, align, and write data. This approach fits databases and data services that manage their own layout, caching, checksums, and write-ahead logs.

Platform teams choose raw block volumes to reduce layers in the I/O path and to tighten control over latency swings. A filesystem adds metadata work and journaling, and those costs can show up as jitter during bursts. Raw block access removes the filesystem layer, but it does not remove every bottleneck. Your CSI driver, node CPU budget, storage CPU headroom, and network transport still shape results.

Raw Block Optimization for Enterprise Clusters

You get the best raw block results when the stack keeps I/O direct and stable under concurrency. NVMe media helps, yet the data path often matters more than the device. User-space storage engines can cut context switches and avoid extra memory copies, which improves CPU efficiency and keeps tail latency steadier when the cluster gets busy.

Software-defined Block Storage also helps teams scale without losing control. You can enforce multi-tenant isolation and QoS at the volume layer, even across different clusters and environments. Executives usually prioritize predictable service levels and risk control. DevOps and SRE teams usually prioritize automation, safe upgrades, and repeatable recovery workflows.

🚀 Run Raw Block Volumes on NVMe/TCP, Natively in Kubernetes

Use simplyblock to standardize Kubernetes Storage and keep block I/O latency predictable at scale.

👉 Use Simplyblock for Persistent Storage on Kubernetes →

Raw Block Volumes in Kubernetes Storage

Raw block volumes still use standard Kubernetes Storage objects such as StorageClasses, PVCs, and PVs. The difference comes from how the workload consumes the volume: it treats the volume as a device instead of a file path. That choice changes day-2 operations.

Device initialization becomes an application concern. Some workloads write a filesystem, some write a partition table, and others write directly to the device. Security posture also changes because the pod accesses a device node. Troubleshooting changes, too, because you investigate alignment, queue depth, and I/O patterns rather than file metadata and mount options.

Many teams see the biggest gains when they run stateful services that tune concurrency and queue depth. Disaggregated designs can amplify that benefit because compute nodes stay lean while storage nodes serve volumes across the network.

Kubernetes Raw Block Volume Support and NVMe/TCP

NVMe/TCP carries NVMe commands over standard IP networks, which makes it practical for Kubernetes clusters on Ethernet. Raw block workloads can drive heavy parallel I/O, so the transport must stay stable during bursts. Good results come from proper bandwidth sizing, consistent congestion handling, and enough CPU on the storage endpoints.

NVMe/TCP also fits enterprise operations because teams can reuse existing switching and routing practices. That advantage matters when you want a SAN alternative that scales out and still works cleanly with Kubernetes scheduling and policy controls.

Measuring and Benchmarking Kubernetes Raw Block Volume Support Performance

Benchmarking raw block volumes works only when the test matches the workload. Start with the metrics that drive real outcomes: IOPS, throughput, average latency, and p95/p99 latency. Tail latency often decides whether a database meets its SLO.

Next, model the I/O profile. OLTP patterns differ from log-structured writes, and streaming ingest behaves differently again. Use the right block size, read/write mix, queue depth, and sync behavior. Then correlate the results with node CPU usage, IRQ pressure, storage CPU, and network drops. If latency rises while CPU pegs, the platform needs a more efficient data path or stronger isolation.

Failure-path tests matter just as much as steady-state tests. Validate attach/detach timing, node loss behavior, and recovery time so you do not learn those limits in production.

Improving Raw Block Volume Performance

Use these actions to raise throughput and reduce latency variance without fragile tuning:

- Align application I/O sizes with storage block boundaries, and avoid misalignment penalties.

- Tune queue depth and concurrency to match device and transport limits.

- Reserve CPU for storage and networking paths to reduce jitter under contention.

- Size the network for bursts, and keep congestion settings consistent across nodes.

- Enforce per-volume QoS so one tenant cannot saturate queues.

- Prefer user-space, zero-copy data paths when CPU efficiency drives your bottlenecks.

Raw Block vs Filesystem Volumes

Before you standardize, compare raw block volumes with filesystem volumes because production operations expose trade-offs quickly.

| Dimension | Raw block device | Mounted filesystem volume |

|---|---|---|

| Application control | App controls layout and writes | Filesystem controls layout and metadata |

| Operational ease | Needs device-aware guardrails | Easier inspection and tooling |

| Latency variance | Often lower with good tuning | Often higher due to filesystem overhead |

| Safety defaults | Higher risk if unmanaged | More guardrails via filesystem semantics |

| Best-fit workloads | Databases, log engines, custom caches | General apps, shared file semantics |

Simplyblock™ for Stable Block I/O

Simplyblock™ targets predictable performance by combining Software-defined Block Storage with an NVMe-first, Kubernetes-native design. The platform supports NVMe/TCP and focuses on an efficient data path that reduces CPU overhead, which helps keep p95 and p99 latency steadier under parallel load.

Raw block workloads can push the storage plane hard with many concurrent operations. Multi-tenancy increases that pressure because one noisy workload can dominate queues. Volume-level QoS and isolation help keep neighbors from stealing performance. Simplyblock supports flexible Kubernetes deployments, including hyper-converged, disaggregated, and mixed layouts, so teams can keep one operating model while scaling compute and storage independently.

Emerging Patterns in Raw Block Storage

Kubernetes storage keeps moving toward policy-driven operations where topology and service targets influence placement and provisioning. Raw block adoption will likely grow as more teams run databases and streaming systems directly on Kubernetes and demand tighter control of I/O behavior.

DPUs and IPUs can also change the equation. Offload for networking and data-plane work can free CPU on nodes and cut jitter. Expect more automation that selects block versus filesystem based on workload signals, plus tighter links between observability data and storage policy.

Related Terms

Teams often review these glossary pages alongside Kubernetes Raw Block Volume Support when they define performance targets and operational guardrails for stateful workloads on Kubernetes.

- Kubernetes CSI Inline Volumes

- Observability

- HostPath

- Disaggregated Storage

- Kubernetes Volume Mode (Filesystem vs Block)

Questions and Answers

Kubernetes Raw Block Volume Support allows pods to access storage devices as raw block devices instead of mounted file systems. This is essential for performance-critical workloads like databases that need direct I/O access, often used in Kubernetes Stateful workloads.

To use raw block volumes, set the volumeDevices field in your pod spec and provision a PVC with volumeMode: Block. When paired with CSI, like Kubernetes CSI, the driver will expose the raw device directly to the container.

Use raw block volumes when your application requires direct control over block storage, such as in database engines or custom filesystems. For example, PostgreSQL on Simplyblock benefits from the performance advantages of raw block I/O in certain configurations.

Yes, if the CSI driver supports it. Simplyblock’s block storage replacement supports dynamically provisioned raw block volumes, giving Kubernetes users high-throughput, low-latency storage with full automation.

Encryption can be enabled at the block device level, and resizing is possible through supported CSI drivers. Simplyblock supports encryption at rest even for raw volumes, ensuring security without sacrificing performance.