Kubernetes StatefulSet VolumeClaimTemplates

Terms related to simplyblock

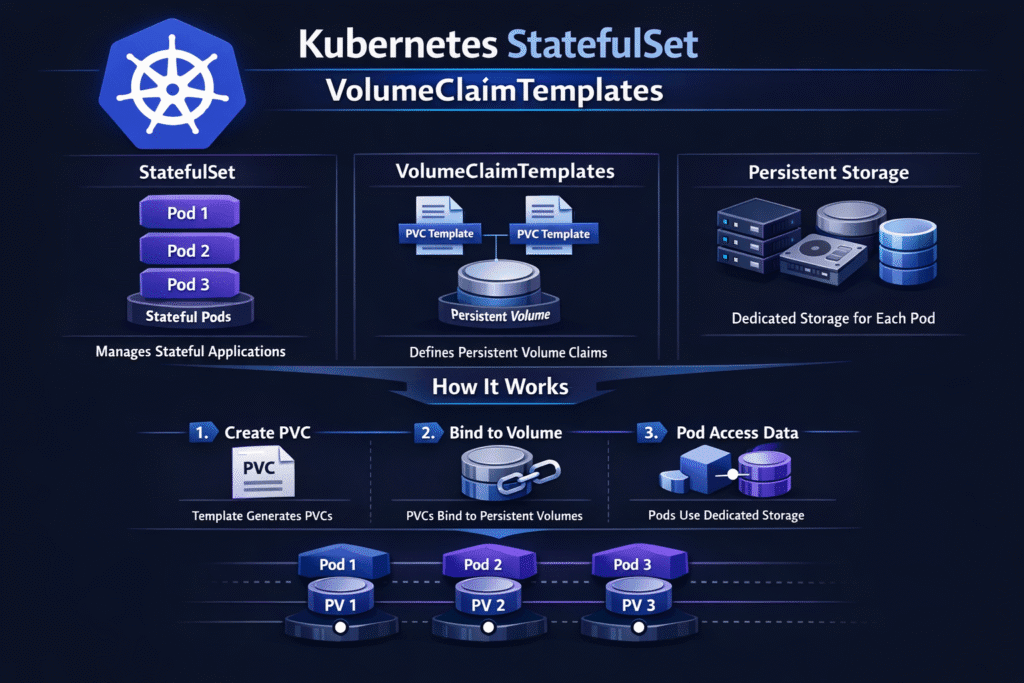

Kubernetes StatefulSet VolumeClaimTemplates define a per-replica storage blueprint that the StatefulSet controller turns into one PersistentVolumeClaim (PVC) per Pod ordinal. That behavior matters because it preserves identity: app-0 keeps its own claim, app-1 keeps its own claim, and so on, even when Kubernetes reschedules Pods.

A template typically includes storageClassName, accessModes, and a resources.requests.storage size. Kubernetes then relies on the CSI driver and the StorageClass to provision matching PersistentVolumes (PVs). When your cluster uses Kubernetes Storage for databases, caches, queues, and analytics, the template becomes the contract between the workload and the platform: capacity, performance tier, and lifecycle semantics.

What problem does it solve? It prevents “shared volume chaos” in stateful replicas by giving each Pod its own claim and volume, without forcing operators to hand-create PVC objects.

What problem does it not solve? It does not guarantee performance, latency, or rebuild behavior. Your storage backend still sets those outcomes.

Kubernetes StatefulSet VolumeClaimTemplates, Explained for Platform Teams

Kubernetes StatefulSet VolumeClaimTemplates sit inside the StatefulSet spec and act like a stamp. Every new replica gets a stamped PVC name, plus a PV created by dynamic provisioning, if the StorageClass supports it.

Most teams run into the same operational edges:

You can scale replicas up and down, but the PVCs often stay. That design protects data, yet it can surprise cost models. Template changes also do not automatically rewrite existing PVCs in many environments, so operators must plan expansions and tier moves with care.

Governance matters here. Treat the StorageClass behind the template as a policy surface: encryption, snapshot support, topology rules, and volume expansion gates. When your exec team asks why stateful upgrades “feel risky,” the answer often starts with mismatched storage policies across clusters.

🚀 Standardize StatefulSet Storage with VolumeClaimTemplates

Use Simplyblock to keep Kubernetes Storage consistent with Software-defined Block Storage and NVMe/TCP.

👉 Use Simplyblock for Kubernetes Persistent Volumes Best Practices →

Smarter Ways to Provision Stateful Volumes Without Lock-In

Modern platform teams reduce storage friction by standardizing how they express intent and how the storage layer enforces it. CSI gives you a clean contract, but the backend still needs to deliver consistent behavior under node drains, rolling updates, and noisy neighbor pressure.

Software-defined Block Storage helps because it decouples performance and resilience from any one server or array. That also supports SAN alternative designs: you keep block semantics, but you scale like cloud infrastructure. For Kubernetes Storage, that means fewer special cases per workload type and more repeatable templates across environments.

Kubernetes Storage and Kubernetes StatefulSet VolumeClaimTemplates

Kubernetes Storage becomes “real” for stateful apps when four pieces align: StatefulSet identity, a correct template, an appropriate StorageClass, and a storage backend that handles reschedules without drama.

Common failure modes show up fast:

A template points at a StorageClass that binds volumes late, so first-write latency spikes during scheduling. Another template requests a filesystem, but the workload needs raw blocks for a database engine’s tuning. Some teams choose a fast tier for production, then forget to mirror that tier in staging, which makes load tests meaningless.

From an operations angle, the cleanest approach uses one template per data role and keeps it boring. Save innovation for the storage layer, not for every YAML file.

NVMe/TCP Considerations for Kubernetes StatefulSet VolumeClaimTemplates

NVMe/TCP changes the performance envelope when you run disaggregated storage. It keeps IP routing and operational simplicity while delivering NVMe semantics over the network. That matters for templates because replicas no longer depend on local disks on a specific node, yet they can still hit low latency.

If you pair a template with an NVMe/TCP-backed class, you can scale stateful replicas while keeping data placement flexible. You also reduce the blast radius of a node event because storage service nodes can evolve independently from compute nodes.

For high fanout stateful fleets, NVMe/TCP also helps with CPU efficiency when the storage stack avoids kernel-heavy copies. That’s where user-space, zero-copy design, and SPDK-style datapaths become relevant: lower overhead often translates to more headroom per node, not just more IOPS.

What to Measure When Stateful Storage “Feels Slow”

Measure the storage outcomes that match how StatefulSets behave during change:

Track p50 and p99 latency during steady state, and then track the same metrics during reschedules, rolling updates, and node drains. Add a “first-attach to ready” timer because volume attach and mount delays drive real downtime even when the app itself restarts quickly.

IOPS and throughput still matter, but they do not tell the whole story. Watch tail latency and jitter, plus queue depth and throttle events. For multi-tenant clusters, confirm that one namespace cannot steal performance from another.

A practical benchmark uses the same access pattern as the workload. Synthetic tools help, yet replaying the application’s read/write mix catches issues that generic tests miss.

Practical Tuning Moves for Stateful Workloads

The fastest path to better outcomes starts with fewer surprises in provisioning, scheduling, and noisy neighbor control. Use one small checklist, then iterate.

- Match the StorageClass to the workload’s access pattern, not to a generic “fast” label.

- Keep template sizes realistic, and enable expansion where your platform supports it.

- Use topology-aware rules so volumes bind where the network path stays short.

- Apply QoS controls so one replica group cannot drown out another.

- Test rolling updates under load, and record attach, mount, and warmup times.

- Validate snapshots and restore paths, because recovery drills expose hidden latency cliffs.

Provisioning Patterns Compared for StatefulSet Storage

The table below summarizes the tradeoffs operators see most often. Use it to align business risk (downtime, recovery, cost) with the technical pattern you standardize across clusters.

| Pattern | What it Optimizes For | Typical Risk | Day-2 Ops Effort | Fit for Kubernetes Storage |

|---|---|---|---|---|

| VolumeClaimTemplates + CSI dynamic PVs | Automation and repeatability | Template drift vs existing PVCs | Medium | Strong default for most stateful apps |

| Pre-provisioned PVs + static PVCs | Full control of PV placement | Slower scale-out, manual steps | High | Niche cases with strict placement rules |

| Local PVs tied to nodes | Lowest local latency | Node loss impacts recovery | High | Specific ultra-low-latency, high-touch setups |

| Shared RWX filesystem volumes | Simple sharing across Pods | Contention and noisy neighbors | Medium | Better for shared content, not per-replica data |

Simplyblock™ for Repeatable Stateful Volume Behavior

Simplyblock™ targets the core problem behind most StatefulSet incidents: storage performance that changes during cluster events. It provides Software-defined Block Storage for Kubernetes Storage, and it supports NVMe/TCP to deliver NVMe semantics without turning your network into a science project.

Because simplyblock uses an SPDK-based, user-space design, it can reduce CPU overhead in the data path and keep latency tight under load. That shows up when replicas churn during upgrades or when multiple stateful teams share the same platform. Multi-tenancy and QoS controls also help you enforce fairness, which keeps one “busy” database from flattening every other StatefulSet.

For executives, the business outcome is simpler: fewer storage-driven change windows, fewer performance tickets, and a cleaner SAN alternative story for Kubernetes.

Where This Feature Set Is Headed Next

Kubernetes continues to improve how it expresses storage intent, while CSI vendors expand features around cloning, snapshots, online expansion, and topology intelligence. Expect more automation around resizing workflows and safer updates for stateful fleets.

Offload will also matter more. DPUs and IPUs can take on data-plane work, while the control plane keeps orchestration logic. Storage stacks that already align with user-space, zero-copy principles stand to benefit as those architectures become common in production clusters.

Related Technologies

Teams often review these glossary pages alongside Kubernetes StatefulSet VolumeClaimTemplates when they standardize Kubernetes Storage and Software-defined Block Storage.

- Kubernetes StatefulSet

- Kubernetes StorageClass Parameters

- Container Storage Interface (CSI)

- Storage Quality of Service (QoS)

Questions and Answers

volumeClaimTemplates In a Kubernetes StatefulSet, automatically generate a unique PersistentVolumeClaim for each replica. This ensures each pod in the StatefulSet gets its own persistent volume, enabling stable network identity and storage — a requirement for Kubernetes Stateful workloads.

Unlike Deployments, which require manual PVC management, StatefulSets use volumeClaimTemplates auto-provisioning volumes per replica. This simplifies scaling and storage isolation, and works seamlessly with block storage replacement solutions that support dynamic provisioning.

Yes, volumeClaimTemplates work with dynamic provisioning when paired with a compatible StorageClass. Simplyblock’s CSI integration allows automated creation of persistent volumes for each pod using Kubernetes CSI.

Absolutely. Each PVC created via volumeClaimTemplates is bound to its StatefulSet pod identity and remains intact even after pod deletion. This persistence is vital for PostgreSQL on Simplyblock and other stateful databases that require durable storage.

They remove the need for manual PVC declarations by generating one PVC per pod automatically. This is ideal for Kubernetes Stateful workloads that need predictable storage and identity, such as message queues, caches, and databases.