Kubernetes Volume Attachment

Terms related to simplyblock

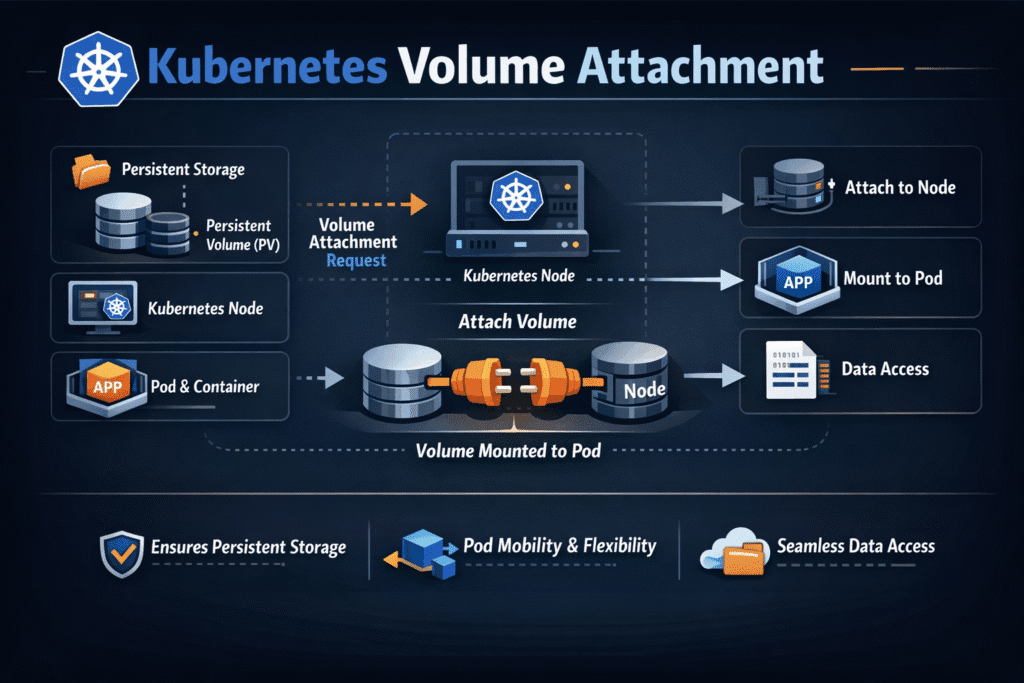

Kubernetes Volume Attachment covers the control-plane steps that connect a persistent volume to a specific node so the kubelet can mount it into a pod. When the attach path runs clean, stateful pods start fast, restarts stay boring, and rolling upgrades finish on time. When the attached path drags, pods sit in ContainerCreating, Error rates rise, and on-call teams chase “storage is slow” tickets that really trace back to lifecycle timing.

Kubernetes Storage teams usually care about two things here. They want a predictable time from “pod scheduled” to “volume usable,” and they want attach behavior that stays stable during node drains, upgrades, and autoscaling. Software-defined Block Storage helps because it can keep the data path steady while the cluster moves workloads around.

How Kubernetes Decides to Attach a Volume

The scheduler picks a node for the pod. Next, Kubernetes checks whether the volume needs an attach step for that driver and access mode. If it does, the control plane coordinates an attachment to the chosen node. After that, the kubelet and the CSI node plugin handle the node-side work, such as staging and mounting.

This flow sounds simple, but it has sharp edges. A single-writer block volume must detach cleanly before it can attach elsewhere. A busy node can also slow the kubelet’s volume work, even when the storage backend has plenty of headroom. Good platforms treat attach time as a first-class SLO, not as a hidden “startup detail.”

🚀 Cut Volume Attach Delays During Rollouts, Natively in Kubernetes

Use Simplyblock to keep Kubernetes Storage steady with NVMe/TCP and Software-defined Block Storage.

👉 Install Simplyblock on Kubernetes →

Kubernetes Volume Attachment vs Mount and Publish

Kubernetes Volume Attachment ends when the platform finishes the node-level connect step and marks the volume ready for node-side use. Mounting comes next. The kubelet asks the CSI node plugin to publish the volume into the pod’s target path. If your driver uses a staging step, it stages once per node and then publishes per pod.

This split matters for troubleshooting. Attach issues often look like “volume not ready,” while mount issues look like “target path not found” or repeated publish retries. Teams shorten outages when they log and alert on attach time and publish time separately.

Signals That Show Attach Trouble Early

Attach trouble shows up as time, not just as errors. Watch the delay from PodScheduled to ContainersReady, and break it into storage phases. Pay attention to these patterns:

One node pool shows slow attaches after a kernel or NIC change. Another cluster shows multi-attach conflicts during failover. A third environment shows long detach waits during rolling upgrades because it runs close to capacity. Each pattern points to a different fix, and none of them improves with “more IOPS” alone.

Where Kubernetes Volume Attachment Slows Down in Kubernetes Storage

Kubernetes Volume Attachment slows down for a few repeatable reasons. Node pressure can starve kubelet threads. The attach controller can queue requests when many pods restart at once. A driver can serialize work too much, or it can open too many parallel calls and overload the node. Networked backends can add delay when discovery and reconnect settings vary across nodes.

Workload design can also trigger slow paths. Stateful systems that restart many replicas at once can create attach storms. If a platform lacks clear QoS, background rebuild work can also inflate attach and mount time for unrelated services. Software-defined Block Storage with multi-tenancy controls helps keep those spikes contained.

Kubernetes Volume Attachment with NVMe/TCP on Ethernet

NVMe/TCP can improve the feel of volume lifecycle events because it provides NVMe semantics over standard Ethernet and supports high performance without specialized fabrics. In disaggregated Kubernetes Storage setups, NVMe/TCP also helps keep compute and storage lifecycles separate. That separation reduces the chance that a node upgrade forces a storage migration at the same time.

NVMe/TCP does not fix bad settings, though. If node images differ on multipath, timeouts, or reconnect behavior, attach and reattach can still churn. A clean platform standard makes the attach path repeatable. A CPU-efficient data path also helps, because it leaves headroom for kubelet work during busy periods.

Benchmarks That Catch Attachment Drag Before Production

A useful benchmark includes lifecycle timing, not only throughput. Run load that matches the app, then trigger the same events that cause pain: rolling updates, node drains, and reschedules. Capture the full curve: baseline, disruption, and recovery.

Track these outcomes as plain numbers that teams can agree on: attach time p95, attach time p99, mount time p95, mount time p99, and “scheduled to ready” time. If those numbers stay flat as you scale replicas, the platform can handle real change windows.

Fast Fixes That Reduce Attach Wait Time

- Set rollout rules that limit how many stateful pods restart at once, and avoid attach storms.

- Reserve CPU for kubelet and the CSI node plugin so node pressure does not block volume work.

- Standardize node NVMe/TCP settings so every node behaves the same during reconnect and failover.

- Use clear QoS controls so rebuilds and bulk jobs do not inflate attach and mount time for critical services.

- Validate access mode choices so single-writer volumes do not hit multi-attach conflicts during failover.

Attachment Patterns Compared for Stateful Workloads

The table below compares common patterns that influence attach timing and operational risk.

| Pattern | What you gain | What can go wrong | Best fit |

|---|---|---|---|

| Networked block volumes with strict single-writer mode | Clear data safety, simple app model | Detach waits can slow failover | Databases, queues, and leaders |

| Local disks bound to a node | Very low local latency | Node loss complicates recovery | Caches, scratch, and edge cases |

| Shared filesystem volumes | Easy sharing across pods | Contention and noisy neighbors | Shared content, build outputs |

| NVMe/TCP-backed Software-defined Block Storage | Fast networked block I/O and flexible placement | Node config drift can add churn | Large clusters with frequent change |

Simplyblock™ for Predictable Attach and Restart Windows

Simplyblock™ focuses on steady behavior during cluster change. It delivers Software-defined Block Storage for Kubernetes Storage and supports NVMe/TCP for high-performance volumes on Ethernet. That combination helps teams keep attach timing stable as they scale nodes and workloads.

Simplyblock also targets efficiency in the data path. When the platform wastes fewer CPU cycles on storage I/O, kubelet and CSI tasks can run without getting squeezed during busy periods. Multi-tenancy and QoS controls add another layer of safety by keeping background work from spilling into stateful startup and failover paths.

What Changes Next in Volume Attach and Scheduling Signals

Kubernetes continues to improve storage scheduling signals and driver reporting so the scheduler makes better placement choices for stateful pods.

The CSI ecosystem also keeps pushing clearer lifecycle tracking and better visibility into attach and detach steps. Those changes should reduce “mystery delays,” especially in large clusters where attach capacity and node churn intersect.

Related Terms

Teams often review these glossary pages alongside Kubernetes Volume Attachment when they standardize Kubernetes Storage and Software-defined Block Storage.

- CSI Driver vs Sidecar

- Kubelet Volume Manager

- Static Volume Provisioning

- AccessModes in Kubernetes Storage

- Kubernetes Volume Mount Options

Questions and Answers

Kubernetes Volume Attachment is the process of binding a PersistentVolume to a specific node before it can be mounted by a pod. This lifecycle is managed by the Kubernetes CSI driver, which ensures the volume is attached and accessible.

The VolumeAttachment object tracks the binding of a volume to a node. It is automatically created by the Kubernetes control plane when using CSI drivers, enabling transparent management for Kubernetes Stateful workloads that rely on persistent storage.

Attachment can fail due to node taints, zone mismatches, or CSI driver errors. Monitoring and debugging these failures is essential in environments using block storage replacement to ensure storage resilience and availability.

When a pod is moved to another node, Kubernetes safely detaches the volume from the old node before reattaching it. This handoff is secured via the Kubernetes CSI mechanism and is key to maintaining data consistency.

Yes. Platforms like PostgreSQL on Simplyblock rely on fast and reliable volume attachment for consistent performance. Using zone-aware scheduling and CSI features helps reduce latency during attachment operations.