Red Hat OpenShift Container Platform

Terms related to simplyblock

Red Hat OpenShift Container Platform adds enterprise controls on top of Kubernetes, with a strong focus on lifecycle automation, policy, and security. Teams often standardize on it when they want one platform for many clusters, many teams, and strict change control. That choice impacts storage more than most buyers expect, because stateful apps stress every part of the stack during upgrades, node drains, and scale events.

If you run databases, analytics, CI artifact stores, or AI pipelines, you will feel the storage layer first. The platform can ship patches fast, but your apps still need stable I/O and predictable recovery. Kubernetes Storage and Software-defined Block Storage play a central role here, because they let you keep storage policy in code and keep operations repeatable across clusters.

Operating model fit – why platform teams pick it

OpenShift uses operators and guardrails to reduce day-two toil. That approach helps most when you apply it to storage, because storage touches every namespace and every SLO. A good design keeps your storage policies clear, your failure domains well-defined, and your upgrades boring.

Executives usually care about risk and cost. OpenShift reduces risk when teams follow one platform standard, one policy model, and one support path. Cost still rises when storage performance drifts, because teams then overbuy nodes and overprovision volumes to mask latency spikes.

🚀 Run OpenShift Stateful Workloads on NVMe/TCP Block Storage, with Clear QoS

Use Simplyblock to keep latency stable during upgrades, drains, and multi-tenant spikes.

👉 Use Simplyblock for OpenShift Storage →

Red Hat OpenShift Container Platform and Kubernetes Storage choices

Red Hat OpenShift Container Platform supports CSI-driven persistent volumes, so storage vendors and platform teams can plug in block, file, or object services through Kubernetes APIs. In practice, most performance issues come from how teams map workloads to StorageClasses, not from the YAML itself. When a StorageClass mixes many workloads in one pool, noisy neighbors show up fast, and p99 grows.

Software-defined Block Storage can reduce that drift. It lets you define tiers, enforce QoS, and scale storage without tying the outcome to one SAN alternative box. It also fits OpenShift well when you run baremetal and want predictable latency with fewer moving parts than legacy arrays.

Red Hat OpenShift Container Platform with NVMe/TCP for low-latency paths

Red Hat OpenShift Container Platform often runs in mixed networks and mixed hardware fleets, so teams need a storage transport that works on standard Ethernet. NVMe/TCP fits that model. It delivers NVMe semantics over TCP, which helps you keep latency low without forcing specialized fabrics in every site.

An SPDK-based datapath matters in these designs. User-space I/O can cut CPU overhead in the storage path, which helps when you run dense nodes and want to keep more cores for apps. That combination supports Kubernetes Storage at scale, with a clean path to disaggregated layouts, DPUs, and strict QoS.

Red Hat OpenShift Container Platform performance checks that teams trust

Measure outcomes, not hopes. Start with attach time, mount time, and reschedule behavior during a node drain. Then measure I/O with a profile that matches your apps. Use mixed read/write tests, realistic block sizes, and fixed queue depth. Track p50, p95, and p99 latency over time, and correlate spikes with upgrade windows, rebuild activity, and tenant contention.

Also, validate the control plane signals. If pods wait on volume binding, you have a placement or topology issue. If pods start fast but latency spikes later, you likely have pool contention, network headroom limits, or weak QoS.

Practical ways to improve steady performance

- Define separate StorageClasses for distinct latency tiers, and keep critical databases off general-purpose pools.

- Enforce QoS per tenant or per workload so one job cannot consume the whole queue.

- Match replication and failure domains to business RPO and RTO, and keep the policy consistent across clusters.

- Validate network headroom for NVMe/TCP during peak hours, not just in a lab window.

- Re-test during upgrades and node drains, because those events expose hidden bottlenecks fast.

Comparison table – OpenShift storage options side by side

The table below compares common storage approaches teams use with OpenShift, with an emphasis on operational friction and performance control.

| Approach | Strength | Trade-off | Best fit |

|---|---|---|---|

| OpenShift Data Foundation style platform storage | Tight platform integration | Can add resource overhead and tuning work | Teams that want one integrated stack |

| Cloud block CSI volumes | Fast to start, easy procurement | Costs and throughput caps can surprise at scale | Small to mid clusters, bursty workloads |

| Legacy SAN alternative with CSI | Familiar ops model for some orgs | Change control, scaling, and latency variance | Sites with existing SAN contracts |

| Software-defined Block Storage over NVMe/TCP | Strong latency control, flexible layouts | Needs clear tier and QoS design | Databases, analytics, multi-tenant platforms |

Predictable OpenShift storage outcomes with simplyblock™

Simplyblock supports Kubernetes Storage on OpenShift with NVMe/TCP and Software-defined Block Storage, so platform teams can keep performance policy consistent across clusters. It supports hyper-converged, disaggregated, and mixed layouts, which helps when you standardize OpenShift across sites that do not share the same server profile.

The SPDK-based datapath helps reduce CPU overhead in the storage path, which preserves cores for application threads and lowers latency variance under load. Multi-tenancy and QoS controls help keep noisy neighbors from dominating shared queues, so stateful workloads behave the same during normal traffic, node drains, and rolling upgrades.

OpenShift storage roadmap signals to plan around

Platform teams want fewer surprises during upgrades and a clearer link between policy and I/O behavior. Expect more emphasis on topology-aware placement, stronger observability for CSI paths, and wider use of NVMe/TCP as disaggregated designs become the default for larger clusters.

More organizations will also push for consistent QoS across tenants and namespaces, because shared OpenShift clusters keep growing in scope. Storage stacks that can express performance tiers as code and enforce them at runtime will fit that direction best.

Related Terms

Teams often review these glossary pages alongside Red Hat OpenShift Container Platform when they standardize storage for regulated, multi-team clusters.

- OpenShift Container Storage

- Kubernetes Block Storage

- Software-Defined Storage (SDS)

- OpenShift Data Foundation vs Ceph

- OpenShift CSI Driver Operator

- OpenShift Elastic Block Storage Integration

- OpenShift StorageClass Templates

Questions and Answers

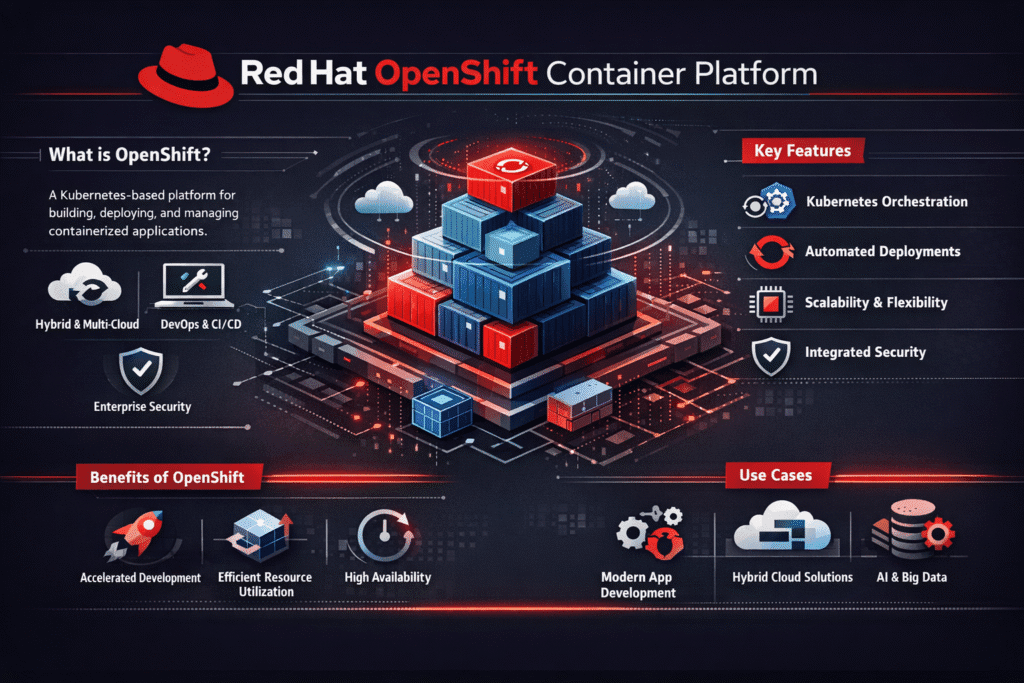

OpenShift is a Kubernetes-based enterprise platform that adds features like built-in CI/CD, developer tooling, and tighter security policies. Unlike vanilla Kubernetes, OpenShift includes opinionated defaults and enterprise support. It’s frequently used with persistent storage solutions that meet strict production SLAs.

Yes. OpenShift fully supports CSI drivers via the Kubernetes Container Storage Interface. Solutions like Simplyblock integrate through Kubernetes-native CSI support, allowing dynamic volume provisioning, snapshots, and topology-aware storage within OpenShift clusters.

OpenShift uses Kubernetes StorageClasses and PVCs, but includes stricter security constraints like SELinux labels and SCCs (Security Context Constraints). Storage solutions must support these features to run effectively in OpenShift, making compliant CSI integration essential.

Yes. Simplyblock’s CSI-based platform is compatible with OpenShift’s dynamic provisioning workflows and meets the needs of containerized stateful workloads like PostgreSQL and MongoDB running inside OpenShift environments.

Absolutely. OpenShift supports encrypted storage, volume-level access controls, and SELinux-based isolation. When used with providers like Simplyblock, you can enable encryption at rest and enforce multi-tenant volume security without extra tooling.