Static Volume Provisioning

Terms related to simplyblock

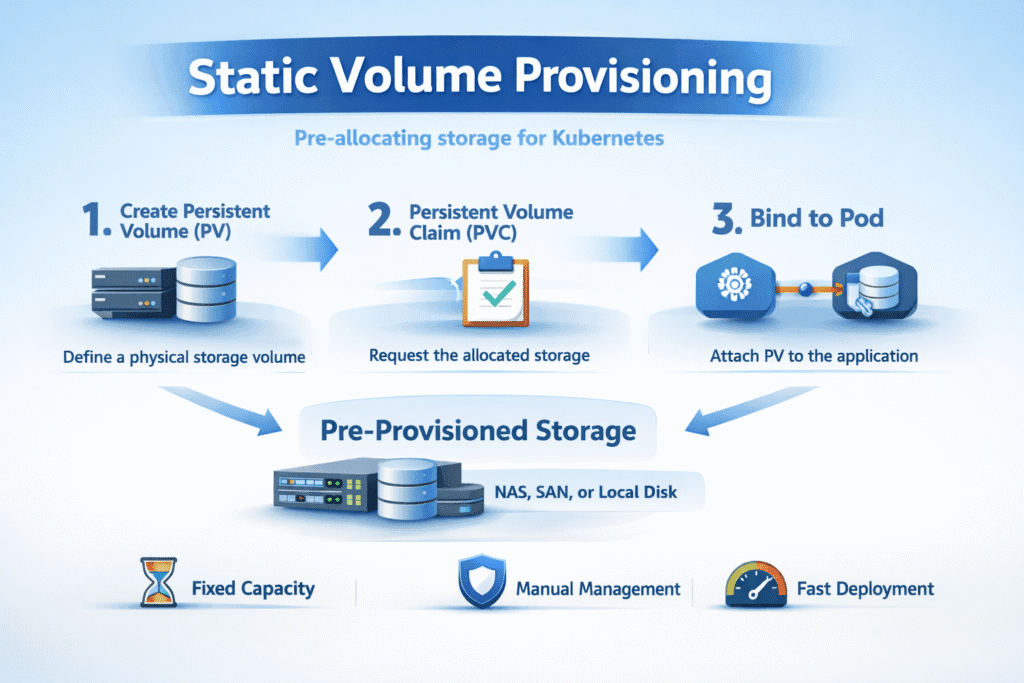

Static Volume Provisioning means a platform team creates a PersistentVolume (PV) in advance, and Kubernetes later binds an app’s PersistentVolumeClaim (PVC) to that pre-created capacity.

This pattern fits regulated environments, migration projects, and shared clusters where teams require approved storage pools, fixed placement, and consistent reclaim behavior. Static Volume Provisioning does not guarantee performance on its own, as the data path, isolation controls, and transport determine latency and throughput in Kubernetes Storage.

Static Volume Provisioning Operations That Scale Beyond Hand-Built PVs

Static PVs work best when teams manage them as governed inventory, not one-off YAML. A small set of PV templates, clear tier labels, and automated validation reduce drift. This approach also supports Software-defined Block Storage, where many workloads share back-end resources, and the platform team must enforce fair use.

Strong ops hygiene starts with explicit reclaim policies, consistent naming, and a clear split between “reserved” volumes and “general” volumes. Many teams keep static PVs for the highest control tier and use dynamic workflows for everything else, because automation removes most manual binding issues.

🚀 Standardize Static Volume Provisioning for Kubernetes Persistent Volumes

Use Simplyblock to pre-allocate PVs cleanly and keep NVMe/TCP performance predictable at scale.

👉 Use Simplyblock for Persistent Storage for Kubernetes →

How Static PV Binding Behaves in Kubernetes Storage

Kubernetes binds a PVC to a PV by matching size, access mode, storage constraints, and selectors. Static provisioning shifts responsibility to the admin, so every mismatch creates operational load for the platform team. Reclaim behavior also matters more in static setups, because teams often keep data after release to support audits, restores, or migrations.

Most production clusters still use CSI-based attachment and mount behavior behind the scenes. CSI keeps the interface stable across backends, and it reduces custom node-side scripting.

Static PVs on NVMe/TCP for SAN Alternatives

Static provisioning pairs well with NVMe/TCP when the organization wants a SAN alternative on standard Ethernet and still needs deterministic volume identity. Teams can pre-map namespaces, apply strict access controls, and assign PVs that match each workload tier and tenant boundary.

NVMe/TCP also supports disaggregated architectures, where compute and storage scale independently. That pattern matters for database fleets and streaming platforms that outgrow node-local disks. With the right backend, this design improves utilization, reduces storage overbuy, and keeps scaling predictable.

Performance Signals That Matter for Static Volume Provisioning

Static provisioning includes a control-plane flow and a data-plane flow. The control plane covers PVC-to-PV binding time, attach time, and mount time. The data plane covers IOPS, bandwidth, and tail latency under contention.

Most teams track three numbers per tier: steady throughput, p95 latency, and p99 latency. They also track noisy-neighbor behavior, because shared pools break predictable storage faster than raw bandwidth limits. Many teams use fio for block testing, since it makes queue depth, block size, and read/write mix explicit.

Practical Tuning Levers for Faster Static PV Outcomes

Static PVs often fail on consistency, not peak speed. Teams get better results when they standardize PV definitions and align them to workload classes.

Use this short checklist to reduce variance without adding process overhead:

- Standardize PV templates with labels for tier, topology, and reclaim policy.

- Keep node coupling rare, and avoid node-local paths for the critical state unless the failure model fits.

- Enforce I/O isolation with per-tenant QoS to prevent one namespace from consuming the entire pool.

- Benchmark with realistic queue depth and report p99, not only averages.

- Validate the full path, including network MTU, multipathing choices, and CPU overhead in the I/O stack.

Static vs Dynamic vs Node-Local – What Changes in Practice

The table below contrasts the operational and performance impact of the common provisioning models, since many teams mix them inside one cluster.

| Factor | Static Volume Provisioning | Dynamic Provisioning | Node-Local (HostPath / local disk) |

|---|---|---|---|

| Admin control | High | Policy-driven | High, but risky |

| Automation | Low | High | Medium |

| Portability | High with CSI | High with CSI | Low |

| Performance potential | High (backend-dependent) | High (backend-dependent) | High on one node only |

| Primary risk | Drift and manual errors | Misconfigured classes | Node lock-in and security exposure |

Predictable Static PV Tiers with Simplyblock™

Static provisioning becomes easier to defend when the backend delivers stable performance under mixed workloads. Simplyblock focuses on Software-defined Block Storage for Kubernetes Storage, with NVMe/TCP support and an SPDK-based user-space data path that targets lower CPU overhead and tighter latency behavior. PV and PVC workflows remain standard Kubernetes objects, so teams can keep their governance model while improving the data path.

Two capabilities matter most for static PV environments. Multi-tenant isolation and QoS policies help keep “gold” PV tiers steady when other tenants spike traffic. NVMe/TCP enables disaggregated deployments that scale storage without forcing compute refresh cycles.

Where Static Volume Provisioning Is Headed Next

Teams will keep static provisioning for regulated data, migration workloads, and reserved capacity tiers, because those use cases require pre-approval and explicit placement.

Platform teams also treat static PVs as capacity products with tier rules, not as exceptions. Expect tighter policy controls around labels, topology, and reclaim behavior. Expect broader adoption of disaggregated NVMe-oF designs as more teams standardize SAN alternatives on Ethernet.

Related Terms

Teams often review these glossary pages alongside Static Volume Provisioning when they standardize Kubernetes Storage and Software-defined Block Storage tiers.

- Persistent Volume (PV)

- Persistent Volume Claim (PVC)

- Storage Quality of Service (QoS)

- Dynamic Provisioning in Kubernetes

Questions and Answers

Static provisioning is the manual process of pre-creating PersistentVolumes (PVs) and binding them to PersistentVolumeClaims (PVCs). It’s used when external storage already exists or needs tight control—commonly found in lift-and-shift scenarios.

Static provisioning is ideal when existing data must be preserved or when external systems require manual volume creation. It’s useful in backup and restore processes or for migrating legacy workloads into Kubernetes, where dynamic provisioning isn’t feasible.

Yes, but it depends on the storage backend. With Simplyblock’s infrastructure, even statically defined volumes can be secured using encryption at rest and integrated into systems that support replication, redundancy, and performance policies.

Not necessarily. Static provisioning offers more manual control but increases operational overhead and the risk of human error. For modern, fast-scaling workloads, dynamic CSI provisioning is generally preferred unless legacy systems dictate otherwise.

You must manually create a PersistentVolume and define a matching claimRef one or use selectors to bind it to a PVC. Tools like kubectl CI/CD pipelines can be used for automation. For specific examples, refer to the Simplyblock CSI deployment guide.