Storage Control Plane

Terms related to simplyblock

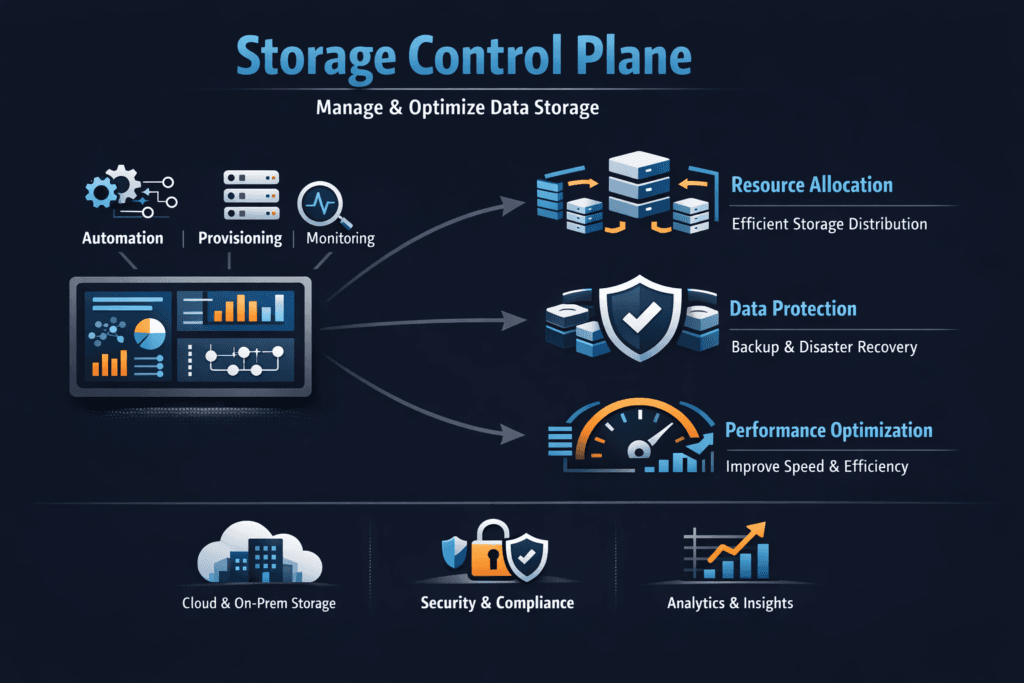

A Storage Control Plane is the “brains” that plans, provisions, and governs storage, while the data plane moves reads and writes. In Kubernetes, the Storage Control Plane decides how a PersistentVolumeClaim maps to a real volume, where that volume should live, how it should replicate, and what policies apply during node churn. This is the layer that turns intent into action.

Leaders usually ask two questions: “Can we scale without outages?” and “Can we keep performance steady when teams share the cluster?” A strong Storage Control Plane answers by enforcing policy, tracking health, and automating lifecycle tasks across Kubernetes Storage and Software-defined Block Storage.

Storage Control Plane upgrades that reduce operational friction

Modern platforms treat storage policy as code. They attach policy to volumes, not to ticket queues. That shift reduces drift between clusters and limits late-night “why did this PVC behave differently?” incidents.

CPU efficiency also matters. Kernel-heavy paths and extra copies waste cycles that apps could use. Storage stacks that pair tight orchestration with SPDK-style, user-space I/O often hold steadier latency under load, especially in multi-tenant environments.

🚀 Push More I/O with Less CPU Overhead

Use Simplyblock’s SPDK-based path to keep latency steady under concurrency.

👉 Use Simplyblock Storage Performance Features →

Storage Control Plane in Kubernetes Storage

In Kubernetes Storage, the control plane covers provisioning, topology-aware placement, attach and mount flows, expansion, and snapshots. The CSI framework defines the contract between Kubernetes and storage backends, but your backend determines how cleanly those workflows run in production. If attach times spike during a rollout, the Storage Control Plane often sits at the center of the issue.

For platform teams, three signals summarize control-plane health: provisioning success rate, attach and mount latency, and recovery time after failures. For executives, those signals translate to delivery risk, upgrade risk, and outage risk. When the control plane stays fast and consistent, application teams ship changes without fighting storage.

Storage Control Plane and NVMe/TCP

NVMe/TCP helps when you want NVMe-oF behavior over standard Ethernet, with broad compatibility across data centers. A Storage Control Plane can use NVMe/TCP pools to deliver SAN-like shared block storage without locking you into a traditional array. The key is how the control plane manages placement, isolation, and rebuild behavior under load.

Many teams standardize on NVMe/TCP for general Kubernetes Storage, then add RDMA only where the latency budget forces it. That approach keeps operations simpler while still giving a path to ultra-low latency tiers. When you combine NVMe/TCP with Software-defined Block Storage, you also gain policy-based volume workflows that scale across clusters.

Measuring and Benchmarking Control Plane Outcomes

Benchmarking should include control-plane timing, not only data-plane throughput. A storage backend can post high IOPS while still causing slow rollouts if provisioning and attach steps lag.

Useful benchmarks track PVC-to-PV bind time under concurrency, attach and mount time during node drains, snapshot and clone completion time at scale, and end-to-end application readiness time after reschedules. Run tests during real cluster events. A calm lab run rarely matches production, because production mixes deployments, autoscaling, and noisy neighbors.

Tactics that Improve Day-2 Storage Behavior

Most improvements come from reducing variance and removing manual steps. Keep changes simple, measure them, and roll them out through standard templates.

- Standardize StorageClasses with clear defaults for replication, snapshots, and expansion.

- Enforce multi-tenancy with QoS so one namespace cannot starve others.

- Use topology-aware placement to reduce cross-zone hops and surprise latency.

- Test node drains and upgrades in staging with the same policies as production.

- Prefer backends that keep the control plane responsive during rebuilds and resyncs.

Trade-offs Across Common Architectures

The table below compares common approaches, focusing on the control-plane experience during provisioning, upgrades, and failure events.

| Architecture choice | Control-plane strength | Operational fit | Typical risk |

|---|---|---|---|

| Local PV on NVMe | Fast and simple for single-node intent | Best for pinned workloads | Reschedules can break assumptions |

| Traditional SAN | Centralized workflows | Fits legacy estates | Shared contention, slower change cycles |

| General-purpose SDS | Flexible policies | Broad use cases | Needs tuning to avoid jitter |

| NVMe/TCP Software-defined Block Storage | Strong policy + scale-out | Kubernetes-first teams | Requires clear QoS and placement rules |

Achieving Consistent Storage Control Plane Outcomes with Simplyblock™

Simplyblock™ aligns the Storage Control Plane with Kubernetes-first operations, so platform teams can standardize how volumes get created, placed, expanded, and protected. It also pairs that orchestration layer with an SPDK-based, user-space data path that targets low overhead and strong tail-latency control, especially under mixed workloads.

This matters when multiple teams share the same infrastructure. Simplyblock supports multi-tenancy and QoS, so platform owners can protect critical services from noisy neighbors. It also supports flexible deployment models, including hyper-converged and disaggregated layouts, so teams can choose the right split between compute and storage without rebuilding the platform.

Where Storage Control Plane Design Is Headed

The next wave focuses on two goals: lower CPU cost per I/O and tighter policy enforcement across fleets. Expect more automation around placement, faster rebuild workflows, and more offload to DPUs/IPUs for NVMe-oF targets and data movement.

As that happens, the Storage Control Plane will act more like a scheduler for storage intent, not just a provisioning tool, while the data plane keeps pushing closer to “local-NVMe feel” over Ethernet.

Related Terms

Teams often review these pages with Storage Control Plane when they tune Kubernetes Storage workflows.

- CSI Control Plane vs Data Plane

- CSI Resize Controller

- Kubernetes Volume Attachment

- Distributed Block Storage Architecture

Questions and Answers

The storage control plane manages provisioning, replication, scaling, and policy enforcement, while the data plane handles actual I/O. In distributed systems, the control plane coordinates cluster state and volume lifecycle within a distributed block storage architecture.

The control plane orchestrates operations like volume creation, snapshots, and failover. The data plane processes read/write requests directly between applications and storage nodes. Simplyblock separates these layers within its software-defined storage platform to maintain performance and reliability.

In Kubernetes, the control plane handles dynamic provisioning through CSI. Slow control-plane operations can delay pod scheduling and scaling. Simplyblock integrates via its CSI-based architecture to streamline persistent volume lifecycle management.

Indirectly, yes. While it doesn’t process I/O, inefficient control-plane operations can slow scaling, replication setup, or failover. Architectures built on scale-out storage design ensure that control tasks do not bottleneck performance-critical workloads.

Simplyblock automates provisioning, replication, and encryption policies through a distributed control layer while keeping the data path optimized with NVMe over TCP. This separation ensures high performance without operational complexity.