IOPS (Input/Output Operations Per Second)

Terms related to simplyblock

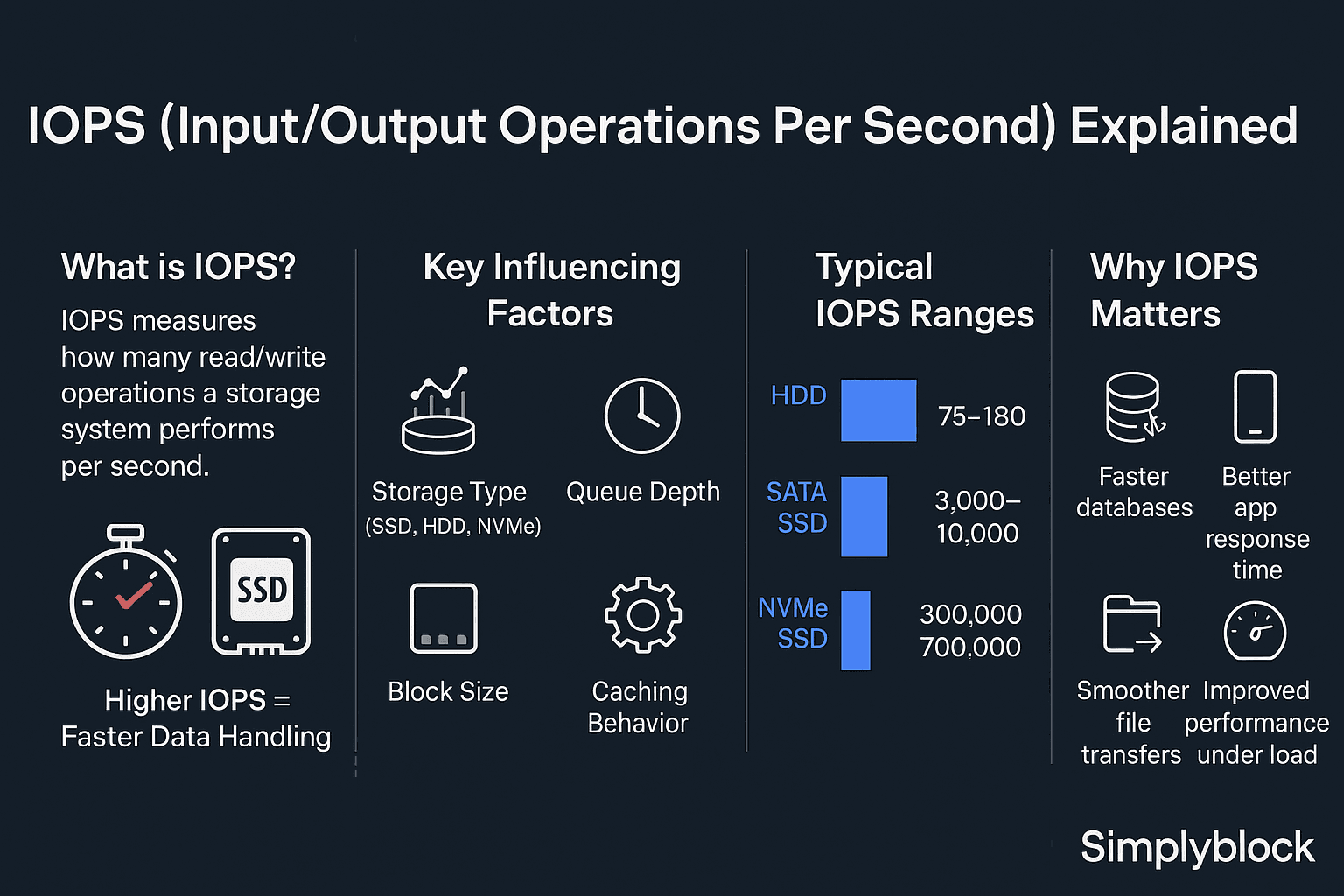

IOPS stands for Input/Output Operations Per Second. It’s a measure of how many read and write operations your storage system can handle each second. While most teams focus on storage capacity or bandwidth, IOPS is what determines how fast your system responds under load.

If IOPS is too low, apps slow down. Database queries lag—backup windows stretch. And even with decent CPU and memory, your entire stack can feel sluggish. High IOPS, on the other hand, keeps systems responsive—even when traffic or workload spikes.

How IOPS Works Under the Hood

IOPS reflects how many I/O operations a device can complete in a single second. These operations are either reads or writes, and they’re influenced by multiple factors—not just hardware specs.

Storage type makes a big difference. SSDs and Proxmox storage setups often handle more IOPS than traditional hard drives. Queue depth matters too—it defines how many operations can be handled at once. Latency, block size, and caching behavior also shape how efficiently I/O gets processed. The raw number alone doesn’t tell the full story, but it gives a reliable signal on how your storage behaves under stress.

🚀 Spin Up Database Branches Without IOPS Bottlenecks

Use Simplyblock to clone, test, and scale databases in seconds—without performance drops or storage reconfiguration.

👉 Use Simplyblock for Database Branching →

IOPS vs Throughput vs Latency – Know the Difference

IOPS often get confused with throughput and latency, but they measure different things. Throughput is the amount of data transferred per second, usually in MB/s. Latency is the delay for a single read or write. IOPS is simply the count of how many read/write operations are happening every second.

| Metric | What It Measures | Why It Matters |

|---|---|---|

| IOPS | Number of operations per second | Best for small, random workloads |

| Throughput | Total data moved per second (MB/s) | Useful for large, sequential tasks |

| Latency | Time to complete one operation | Impacts responsiveness |

Each metric has its place, but for workloads like databases, VMs, or messaging systems, IOPS is often the one that makes or breaks performance.

Real-World IOPS – Storage Type Comparison

Not all storage is created equal. Here’s how IOPS performance typically stacks up across storage types:

| Storage Type | Average IOPS (Random Read/Write) | Latency | Ideal Use Case |

|---|---|---|---|

| HDD (7200 RPM) | 75–200 | 5–10 ms | Backup, archival storage |

| SATA SSD | 5,000–100,000 | ~100 µs | General-purpose VMs, web apps |

| NVMe SSD | 300,000–1,000,000+ | ~20–50 µs | Databases, real-time workloads |

If you’re still using HDDs for production workloads, you’re likely paying a performance penalty. Even basic SSDs offer a massive leap, and NVMe is essential for high-throughput, low-latency tasks.

What Impacts IOPS – The Factors That Actually Matter

- Read vs. write mix – Writes are slower and more resource-intensive than reads.

- Block size – Smaller blocks can increase IOPS, but not always throughput.

- Queue depth – Shallow queues limit parallelism, reducing overall efficiency.

- Storage type – SSDs and NVMe drives far outperform spinning disks.

- Workload pattern – Random I/O workloads require more IOPS than sequential ones.

- Caching – Proper caching reduces demand on physical storage.

- Drivers and firmware – Outdated or misconfigured software can limit hardware potential.

These factors often interact in complex ways, especially in Kubernetes environments where workloads shift dynamically and storage needs vary minute to minute.

IOPS in the Cloud vs On-Prem – What Changes

On-prem setups tie IOPS directly to physical hardware. If you need more, you either upgrade disks or build larger RAID arrays. You manage everything—performance, scaling, and fault tolerance.

In the cloud, things shift. Providers like AWS let you provision IOPS independently from disk size, especially on services like gp3 or io2. That’s more flexible, but it also introduces variability. Shared infrastructure, noisy neighbors, and regional latency can all impact actual IOPS performance, even if you’re paying for higher numbers.

This is where cloud cost optimization becomes critical—allocating the right level of performance without overspending.

How Simplyblock Makes IOPS Easier to Manage

Managing IOPS at scale can get complicated—especially in Kubernetes, Proxmox, or hybrid environments. Simplyblock simplifies that. It delivers high IOPS performance with NVMe-over-TCP and integrates directly with Kubernetes via CSI, so storage fits cleanly into your deployment workflow.

It also handles replication, failover, and thin provisioning out of the box. No need for complex tuning or custom logic. Whether you’re running CI/CD pipelines, multi-tenant workloads, or clustered deployments, Simplyblock gives you speed and control—without the overhead.

Why IOPS Deserves Your Attention

IOPS isn’t just another benchmark—it directly affects how your systems feel in real-world use. If your storage can’t keep up, everything slows down: apps stall, databases crawl, and users wait. But with the right IOPS planning, you avoid all of that.

As you scale distributed systems or run stateful services in containers, IOPS becomes critical. Whether you’re handling persistent volumes, transactional databases, or backup and recovery, predictable storage performance matters.

Getting it right isn’t just about buying better drives—it’s about understanding the entire stack, from firmware to the hypervisor layer.

Questions and answers

IOPS is measured by counting how many input/output operations a storage system can handle per second. This includes both read and write requests. Tools like FIO help simulate workloads for benchmarking. For deeper insights, check our guide on IOPS, throughput, and latency.

NVMe over TCP is optimized for low-latency and high-parallelism, allowing more operations per second compared to iSCSI. In real-world benchmarks, IOPS increased by over 30% when switching from iSCSI to NVMe/TCP—without changing the underlying hardware.

High IOPS ensures faster data access for demanding applications like databases, analytics engines, and file servers. Low IOPS can cause performance bottlenecks. That’s why modern workloads benefit from NVMe storage solutions that offer significantly higher IOPS.

IOPS performance depends on block size, queue depth, storage protocol, and device type. Protocols like NVMe over Fabrics deliver more efficient parallel operations than legacy protocols like iSCSI or SATA.

Benchmarking tools such as FIO or vdbench simulate I/O workloads to measure IOPS under different conditions. Make sure to test using realistic queue depths and block sizes to reflect your actual usage patterns.