Weaviate

Terms related to simplyblock

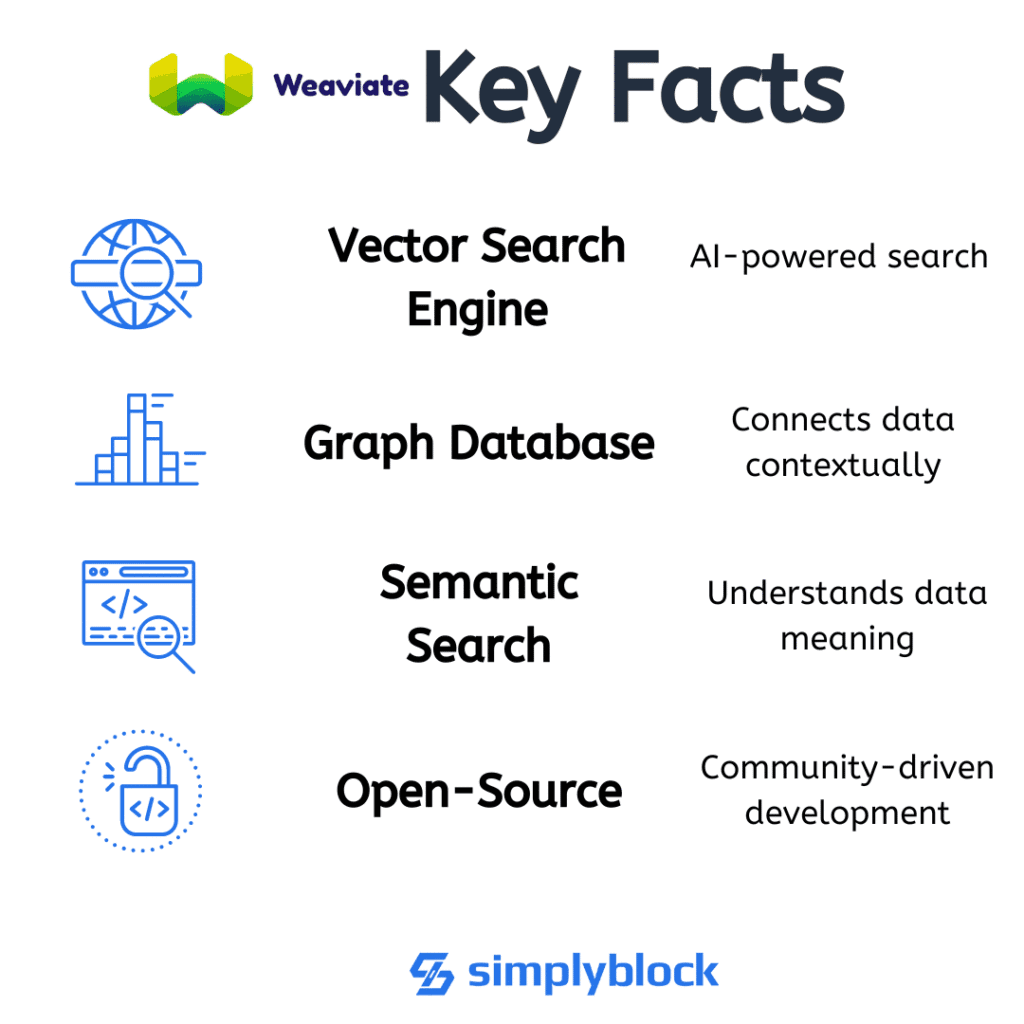

Weaviate is an open-source, cloud-native vector database designed for storing, indexing, and querying high-dimensional data using machine learning models. Unlike traditional databases, which index structured data by rows and columns, Weaviate enables semantic search by leveraging vector embeddings—numerical representations of unstructured data like text, images, or audio. It’s widely used in AI, search, recommendation systems, and natural language processing (NLP) applications.

How Weaviate Works

At its core, Weaviate combines a vector indexing engine (HNSW – Hierarchical Navigable Small World graphs) with a modular architecture that allows integration with external machine learning models or its built-in model inference capabilities. Key components include:

- Vector Index: Stores vector embeddings for similarity-based search

- Data Objects: JSON-based schema with optional vector attachments

- Modules: Extend functionality, including OpenAI, Cohere, Hugging Face, and custom inference APIs

- GraphQL API: Offers intuitive querying across structured metadata and semantic vectors

- Hybrid Search: Combines full-text (BM25) with vector-based search

Weaviate supports local and remote inference, enabling real-time search over large unstructured datasets.

Weaviate vs Traditional Databases

Vector databases like Weaviate differ significantly from relational or NoSQL databases. Here’s a comparison:

| Feature | Weaviate | Relational/NoSQL DBs |

|---|---|---|

| Data Model | Vector-based + JSON schema | Row/Document-based |

| Search Type | Semantic (vector similarity) | Keyword, primary key |

| Indexing | HNSW graph for nearest neighbors | B-Trees, hash indexes |

| AI Integration | Built-in and pluggable ML inference | External services |

| Query Language | GraphQL + REST | SQL or NoSQL API |

Weaviate is optimized for workloads that require contextual understanding rather than exact keyword matching.

Storage Requirements for Weaviate

Weaviate is write-heavy during ingest and latency-sensitive during search, especially when scaling across large datasets and vector dimensions. Storage infrastructure must support:

- Low-latency random reads: For vector similarity lookups

- High IOPS: To ingest embeddings and metadata efficiently

- Snapshot support: For backups, cloning, and model versioning

- Durability and fault-tolerance: Especially in multi-zone or hybrid setups

- Scalability: Across nodes and cloud zones

Deploying Weaviate on NVMe over TCP with simplyblock™ enhances performance at scale by providing sub-millisecond latencies and parallel I/O across CPU cores.

Weaviate in Kubernetes and Cloud-Native Environments

Weaviate is built for cloud-native deployment and provides Helm charts for Kubernetes orchestration. However, stateful vector indexes must persist across pods, which makes Kubernetes-native block storage critical.

With simplyblock, Weaviate benefits from:

- Persistent NVMe volumes provisioned via CSI driver

- Volume snapshots for dataset versioning or model rollback

- High-availability across availability zones

- Thin provisioning and volume cloning for staging environments

- Consistent performance across hybrid-cloud and edge environments

Weaviate Use Cases

Weaviate is widely adopted in AI-driven, search-centric applications, including:

- Semantic Search Engines: Contextual document or media retrieval

- Recommendation Systems: Vector matching for user/item similarity

- Conversational AI: Retrieval-Augmented Generation (RAG) backends

- E-commerce: Visual or text-based product discovery

- Enterprise Knowledge Graphs: Linking unstructured internal data sources

When paired with high-performance SDS, Weaviate ensures instant response for AI inference and data lookup.

Simplyblock™ Enhancements for Weaviate

Running Weaviate on simplyblock infrastructure adds operational resilience and storage performance:

- NVMe-over-TCP for parallel vector search across nodes

- Copy-on-write snapshotting for version control of indexes

- Advanced erasure coding to reduce replication costs

- Multi-tenant QoS and secure volume isolation

- Cloud-neutral storage support for hybrid deployments

This allows Weaviate to scale with user demand without incurring performance degradation or operational complexity.

Related Terms

Teams often review these glossary pages alongside Weaviate when they’re tightening ingest-to-index timelines, keeping recovery workflows simple, and preventing storage-path bottlenecks from showing up as query latency.

CSI Snapshot Controller

Copy-On-Write (CoW)

Zero-Copy I/O

NVMe Multipathing

External Resources

- Weaviate Official Website

- Weaviate GitHub Repository

- Vector Database – Wikipedia

- HNSW Algorithm Overview

Questions and Answers

Weaviate is an open-source vector database that enables semantic search across text, images, and more using built-in machine learning models. It’s ideal for AI-driven applications like recommendation engines, natural language search, and multi-modal data indexing.

Yes, Weaviate supports containerized deployment and runs well on Kubernetes. For high-performance use cases, pair it with NVMe-powered Kubernetes storage to ensure fast indexing, query responsiveness, and persistent volume reliability.

Vector workloads like Weaviate require high IOPS and low latency. NVMe over TCP offers the speed and scalability needed for real-time AI search, especially when handling large embedding sets or concurrent queries.

Weaviate supports TLS and authentication but relies on the infrastructure layer for storage encryption. To secure vector data, use encryption-at-rest at the volume level for compliance and secure multi-tenant deployments.

Yes, Weaviate is built for horizontal scalability and sharding. Combined with software-defined storage, it supports large-scale deployments with flexible performance tuning and infrastructure control.